https://www.certivize.me/

arxiv.org/abs/2504.03255

#Philtech

www.washingtonpost.com/technology/2...

#Philtech

www.washingtonpost.com/technology/2...

https://www.ft.com/content/8253b66e-ade7-4d1f-993b-2d0779c7e7d8

https://www.ft.com/content/8253b66e-ade7-4d1f-993b-2d0779c7e7d8

arxiv.org/abs/2504.03255

arxiv.org/abs/2504.03255

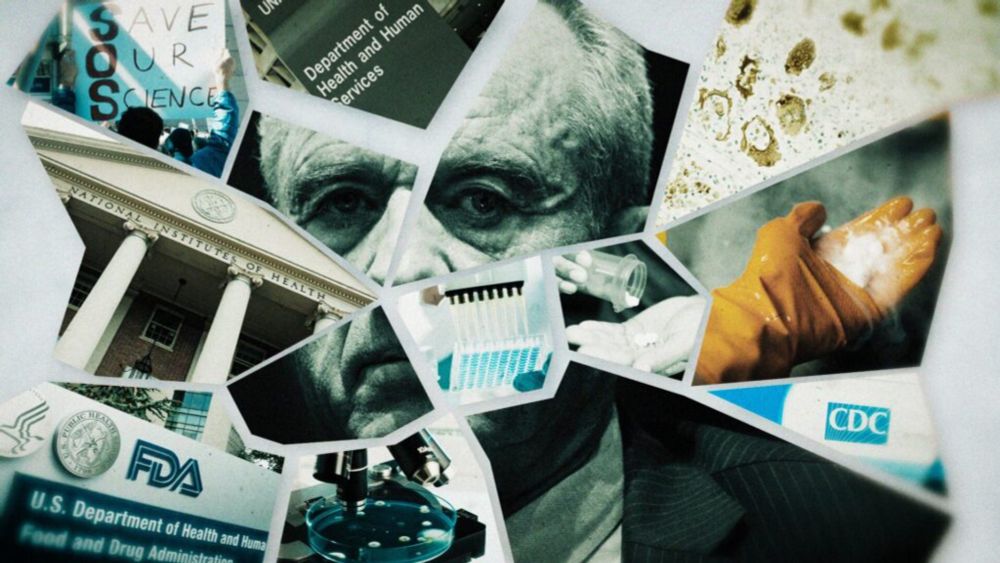

if AI was really able to do that

they would not have needed to first fire all the smart, experienced, and honest people at the FDA.

Even if you knew nothing else about AI in medicine, that should be enough to make you want to scream.

hls.harvard.edu/today/ai-is-...

In medical settings, researchers & institutional users often cite medical examination benchmark results as a proxy for AI model performance.

This is a woefully misguided practice - as with actual doctors, exam performance does NOT equal clinical competence!

In medical settings, researchers & institutional users often cite medical examination benchmark results as a proxy for AI model performance.

This is a woefully misguided practice - as with actual doctors, exam performance does NOT equal clinical competence!