Carlo Sferrazza

@carlosferrazza.bsky.social

Postdoc at Berkeley AI Research. PhD from ETH Zurich.

Robotics, Artificial Intelligence, Humanoids, Tactile Sensing.

https://sferrazza.cc

Robotics, Artificial Intelligence, Humanoids, Tactile Sensing.

https://sferrazza.cc

Pinned

Ever wondered what robots 🤖 could achieve if they could not just see – but also feel and hear?

Introducing FuSe: a recipe for finetuning large vision-language-action (VLA) models with heterogeneous sensory data, such as vision, touch, sound, and more.

Details in the thread 👇

Introducing FuSe: a recipe for finetuning large vision-language-action (VLA) models with heterogeneous sensory data, such as vision, touch, sound, and more.

Details in the thread 👇

We just released FastTD3: a simple, fast, off-policy RL algorithm to train humanoid policies that transfer seamlessly from simulation to the real world.

younggyo.me/fast_td3

younggyo.me/fast_td3

May 29, 2025 at 5:49 PM

We just released FastTD3: a simple, fast, off-policy RL algorithm to train humanoid policies that transfer seamlessly from simulation to the real world.

younggyo.me/fast_td3

younggyo.me/fast_td3

Heading to @ieeeras.bsky.social RoboSoft today! I'll be giving a short Rising Star talk Thu at 2:30pm: "Towards Multi-sensory, Tactile-Enabled Generalist Robot Learning"

Excited for my first in-person RoboSoft after the 2020 edition went virtual mid-pandemic.

Reach out if you'd like to chat!

Excited for my first in-person RoboSoft after the 2020 edition went virtual mid-pandemic.

Reach out if you'd like to chat!

April 22, 2025 at 5:59 PM

Heading to @ieeeras.bsky.social RoboSoft today! I'll be giving a short Rising Star talk Thu at 2:30pm: "Towards Multi-sensory, Tactile-Enabled Generalist Robot Learning"

Excited for my first in-person RoboSoft after the 2020 edition went virtual mid-pandemic.

Reach out if you'd like to chat!

Excited for my first in-person RoboSoft after the 2020 edition went virtual mid-pandemic.

Reach out if you'd like to chat!

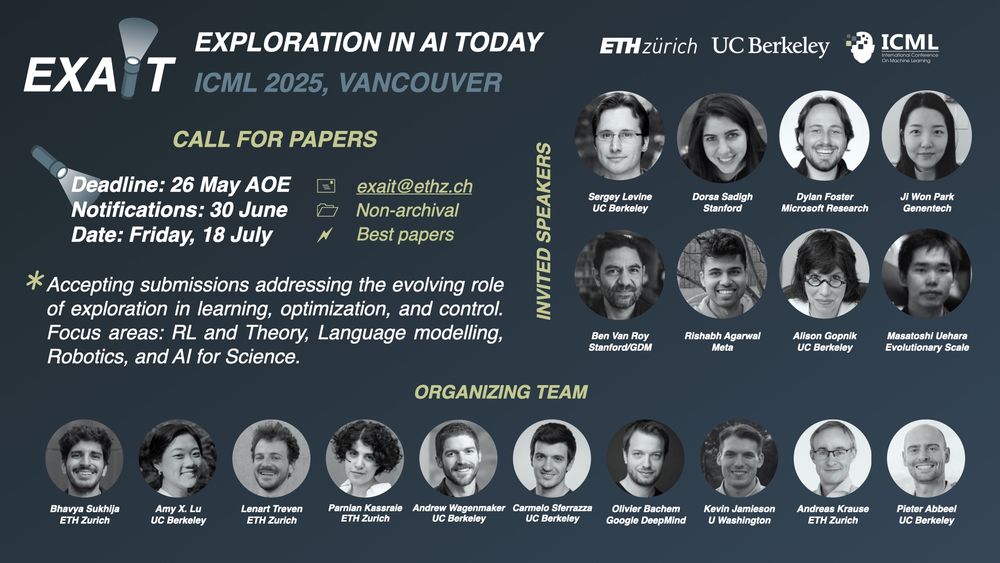

What is the place of exploration in today's AI landscape and in which settings can exploration algorithms address current open challenges?

Join us to discuss this at our exciting workshop at @icmlconf.bsky.social 2025: EXAIT!

exait-workshop.github.io

#ICML2025

Join us to discuss this at our exciting workshop at @icmlconf.bsky.social 2025: EXAIT!

exait-workshop.github.io

#ICML2025

April 17, 2025 at 5:53 AM

What is the place of exploration in today's AI landscape and in which settings can exploration algorithms address current open challenges?

Join us to discuss this at our exciting workshop at @icmlconf.bsky.social 2025: EXAIT!

exait-workshop.github.io

#ICML2025

Join us to discuss this at our exciting workshop at @icmlconf.bsky.social 2025: EXAIT!

exait-workshop.github.io

#ICML2025

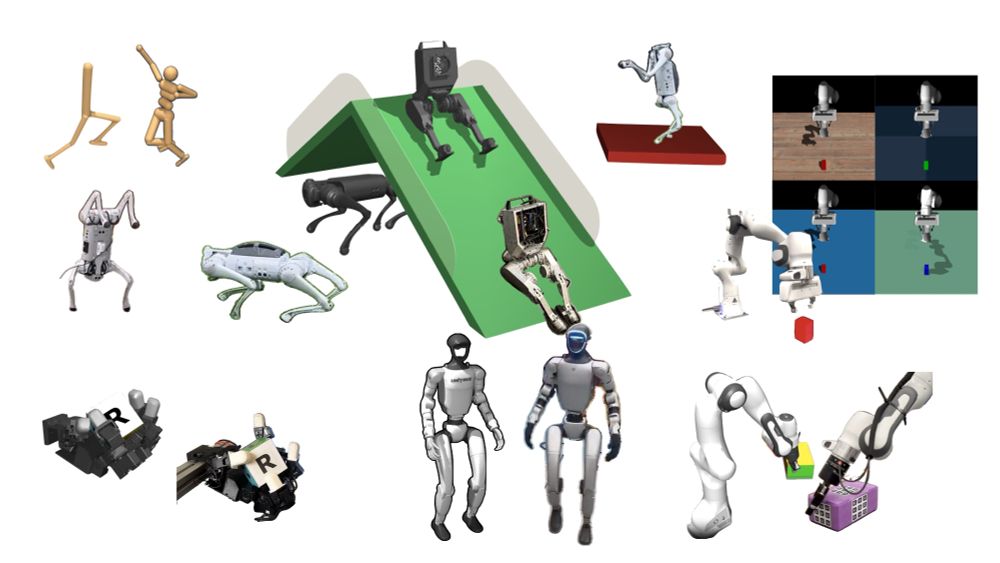

Big news for open-source robot learning! We are very excited to announce MuJoCo Playground.

The Playground is a reproducible sim-to-real pipeline that leverages MuJoCo ecosystem and GPU acceleration to learn robot locomotion and manipulation in minutes.

playground.mujoco.org

The Playground is a reproducible sim-to-real pipeline that leverages MuJoCo ecosystem and GPU acceleration to learn robot locomotion and manipulation in minutes.

playground.mujoco.org

Introducing playground.mujoco.org

Combining MuJoCo’s rich and thriving ecosystem, massively parallel GPU-accelerated simulation, and real-world results across a diverse range of robot platforms: quadrupeds, humanoids, dexterous hands, and arms.

Get started today: pip install playground

Combining MuJoCo’s rich and thriving ecosystem, massively parallel GPU-accelerated simulation, and real-world results across a diverse range of robot platforms: quadrupeds, humanoids, dexterous hands, and arms.

Get started today: pip install playground

MuJoCo Playground

An open-source framework for GPU-accelerated robot learning and sim-to-real transfer

playground.mujoco.org

January 16, 2025 at 10:27 PM

Big news for open-source robot learning! We are very excited to announce MuJoCo Playground.

The Playground is a reproducible sim-to-real pipeline that leverages MuJoCo ecosystem and GPU acceleration to learn robot locomotion and manipulation in minutes.

playground.mujoco.org

The Playground is a reproducible sim-to-real pipeline that leverages MuJoCo ecosystem and GPU acceleration to learn robot locomotion and manipulation in minutes.

playground.mujoco.org

Ever wondered what robots 🤖 could achieve if they could not just see – but also feel and hear?

Introducing FuSe: a recipe for finetuning large vision-language-action (VLA) models with heterogeneous sensory data, such as vision, touch, sound, and more.

Details in the thread 👇

Introducing FuSe: a recipe for finetuning large vision-language-action (VLA) models with heterogeneous sensory data, such as vision, touch, sound, and more.

Details in the thread 👇

January 13, 2025 at 6:51 PM

Ever wondered what robots 🤖 could achieve if they could not just see – but also feel and hear?

Introducing FuSe: a recipe for finetuning large vision-language-action (VLA) models with heterogeneous sensory data, such as vision, touch, sound, and more.

Details in the thread 👇

Introducing FuSe: a recipe for finetuning large vision-language-action (VLA) models with heterogeneous sensory data, such as vision, touch, sound, and more.

Details in the thread 👇

Reposted by Carlo Sferrazza

Excited to share MaxInfoRL, a family of powerful off-policy RL algorithms! The core focus of this work was to develop simple, flexible, and scalable methods for principled exploration. Check out the thread below to see how MaxInfoRL meets these criteria while also achieving SOTA empirical results.

🚨 New reinforcement learning algorithms 🚨

Excited to announce MaxInfoRL, a class of model-free RL algorithms that solves complex continuous control tasks (including vision-based!) by steering exploration towards informative transitions.

Details in the thread 👇

Excited to announce MaxInfoRL, a class of model-free RL algorithms that solves complex continuous control tasks (including vision-based!) by steering exploration towards informative transitions.

Details in the thread 👇

December 17, 2024 at 5:48 PM

Excited to share MaxInfoRL, a family of powerful off-policy RL algorithms! The core focus of this work was to develop simple, flexible, and scalable methods for principled exploration. Check out the thread below to see how MaxInfoRL meets these criteria while also achieving SOTA empirical results.

🚨 New reinforcement learning algorithms 🚨

Excited to announce MaxInfoRL, a class of model-free RL algorithms that solves complex continuous control tasks (including vision-based!) by steering exploration towards informative transitions.

Details in the thread 👇

Excited to announce MaxInfoRL, a class of model-free RL algorithms that solves complex continuous control tasks (including vision-based!) by steering exploration towards informative transitions.

Details in the thread 👇

December 17, 2024 at 5:47 PM

🚨 New reinforcement learning algorithms 🚨

Excited to announce MaxInfoRL, a class of model-free RL algorithms that solves complex continuous control tasks (including vision-based!) by steering exploration towards informative transitions.

Details in the thread 👇

Excited to announce MaxInfoRL, a class of model-free RL algorithms that solves complex continuous control tasks (including vision-based!) by steering exploration towards informative transitions.

Details in the thread 👇