🗣️ICML Poster West Exhibition Hall, 16 Jul, 11a.m. PDT, No. W-707

📜arxiv.org/abs/2503.01584

🌐sites.google.com/view/sensei-paper

Work done with @cgumbsch.bsky.social (co-first), @zadaianchuk.bsky.social, @pavelkolevbg.bsky.social and @gmartius.bsky.social

8/8

🗣️ICML Poster West Exhibition Hall, 16 Jul, 11a.m. PDT, No. W-707

📜arxiv.org/abs/2503.01584

🌐sites.google.com/view/sensei-paper

Work done with @cgumbsch.bsky.social (co-first), @zadaianchuk.bsky.social, @pavelkolevbg.bsky.social and @gmartius.bsky.social

8/8

7/8

7/8

6/8

6/8

5/8

5/8

4/8

4/8

Our approach is to use human priors found in foundation models. We extend MOTIF to VLMs: A VLM compares observation pairs, collected through self-supervised exploration. This ranking is distilled into a reward function.

3/8

Our approach is to use human priors found in foundation models. We extend MOTIF to VLMs: A VLM compares observation pairs, collected through self-supervised exploration. This ranking is distilled into a reward function.

3/8

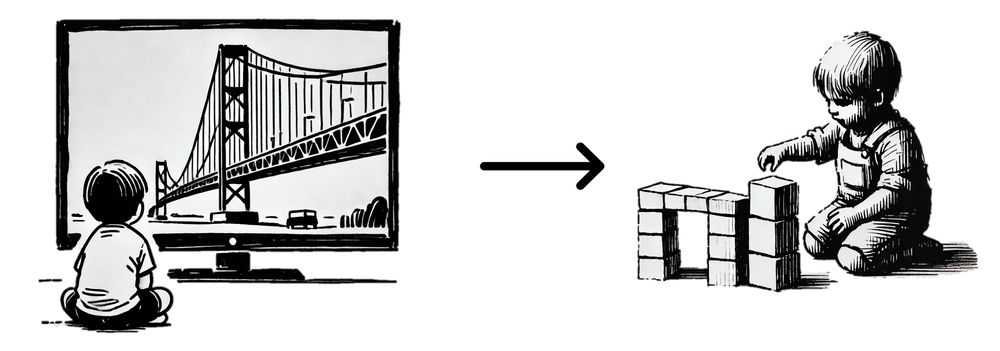

Children solve this by observing and imitating adults. We bring such semantic exploration to artificial agents.

2/8

Children solve this by observing and imitating adults. We bring such semantic exploration to artificial agents.

2/8