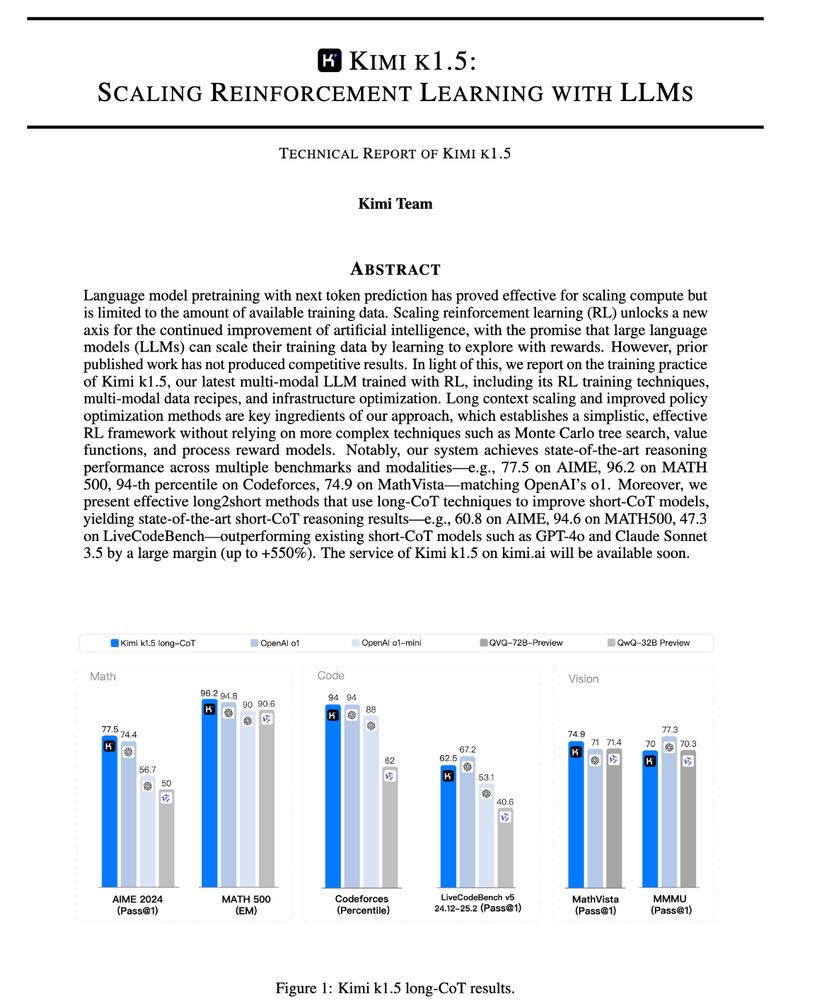

kimi 1.5 report: https://buff.ly/4jqgCOa

kimi 1.5 report: https://buff.ly/4jqgCOa

I started disliking cossim some years ago due to multiple reasons such as the non-linearity around 0.0 and the loss of certainty-information due to the normalization of feature vectors but this study seems to give another good reason to abandon it.

I started disliking cossim some years ago due to multiple reasons such as the non-linearity around 0.0 and the loss of certainty-information due to the normalization of feature vectors but this study seems to give another good reason to abandon it.

The authors propose a pre-training framework using synthetic cross-modal data to enhance LoFTR and RoMa for matching across medical imaging modalities like CT, MR, PET, and SPECT.

zju3dv.github.io/MatchAnything/

The authors propose a pre-training framework using synthetic cross-modal data to enhance LoFTR and RoMa for matching across medical imaging modalities like CT, MR, PET, and SPECT.

zju3dv.github.io/MatchAnything/

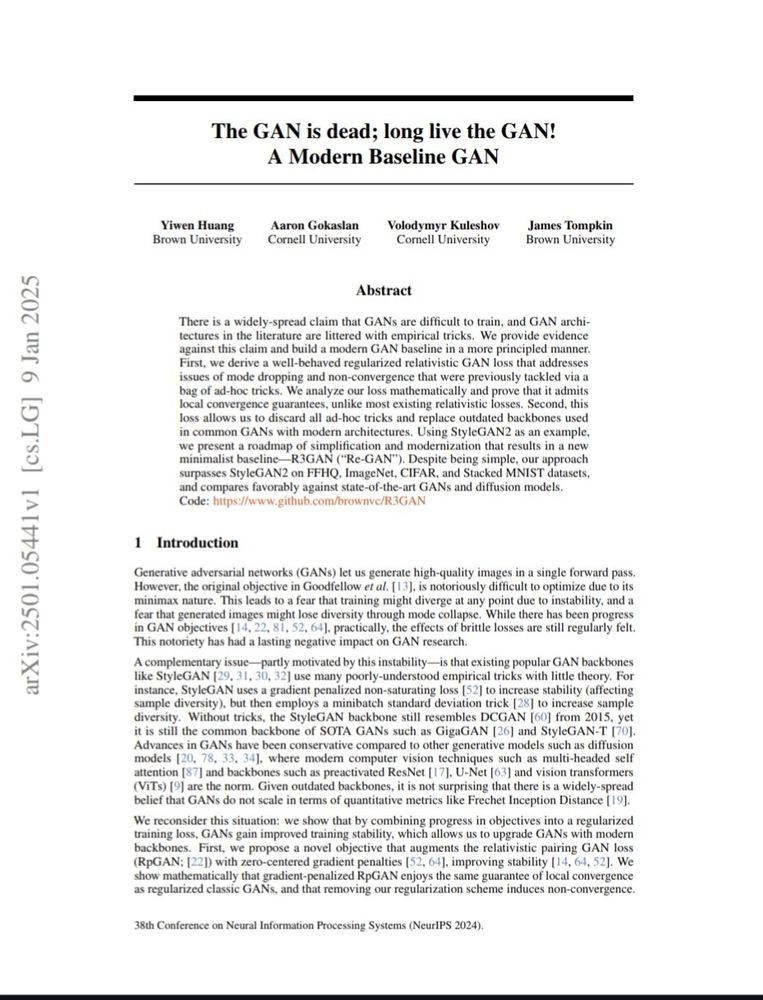

This is a very interesting paper, exploring making GANs simpler and more performant.

abs: arxiv.org/abs/2501.05441

code: github.com/brownvc/R3GAN

This is a very interesting paper, exploring making GANs simpler and more performant.

abs: arxiv.org/abs/2501.05441

code: github.com/brownvc/R3GAN

tl;dr: it is good, even feels like human, but not perfect.

ducha-aiki.github.io/wide-baselin...

tl;dr: it is good, even feels like human, but not perfect.

ducha-aiki.github.io/wide-baselin...

Blog: sakana.ai/asal/

We propose a new method called Automated Search for Artificial Life (ASAL) which uses foundation models to automate the discovery of the most interesting and open-ended artificial lifeforms!

Blog: sakana.ai/asal/

We propose a new method called Automated Search for Artificial Life (ASAL) which uses foundation models to automate the discovery of the most interesting and open-ended artificial lifeforms!

A blog post to celebrate and present it: francisbach.com/my-book-is-o...

A blog post to celebrate and present it: francisbach.com/my-book-is-o...

arxiv.org/abs/2412.11768

#MLSky #NeuroAI

arxiv.org/abs/2412.11768

#MLSky #NeuroAI

Au-delà des annonces spectaculaire du type : "o1 a réussi à s'échapper !!!", que disent vraiment ces articles ? Eh bien nous allons voir.

(lien dans la réponse)

Au-delà des annonces spectaculaire du type : "o1 a réussi à s'échapper !!!", que disent vraiment ces articles ? Eh bien nous allons voir.

(lien dans la réponse)

@awhiteguitar.bsky.social & Yueh-Cheng Liu have been working tirelessly to bring:

🔹1006 high-fidelity 3D scans

🔹+ DSLR & iPhone captures

🔹+ rich semantics

Elevating 3D scene understanding to the next level!🚀

w/ @niessner.bsky.social

kaldir.vc.in.tum.de/scannetpp

@awhiteguitar.bsky.social & Yueh-Cheng Liu have been working tirelessly to bring:

🔹1006 high-fidelity 3D scans

🔹+ DSLR & iPhone captures

🔹+ rich semantics

Elevating 3D scene understanding to the next level!🚀

w/ @niessner.bsky.social

kaldir.vc.in.tum.de/scannetpp

We trained 2 new models. Like BERT, but modern. ModernBERT.

Not some hypey GenAI thing, but a proper workhorse model, for retrieval, classification, etc. Real practical stuff.

It's much faster, more accurate, longer context, and more useful. 🧵

We trained 2 new models. Like BERT, but modern. ModernBERT.

Not some hypey GenAI thing, but a proper workhorse model, for retrieval, classification, etc. Real practical stuff.

It's much faster, more accurate, longer context, and more useful. 🧵

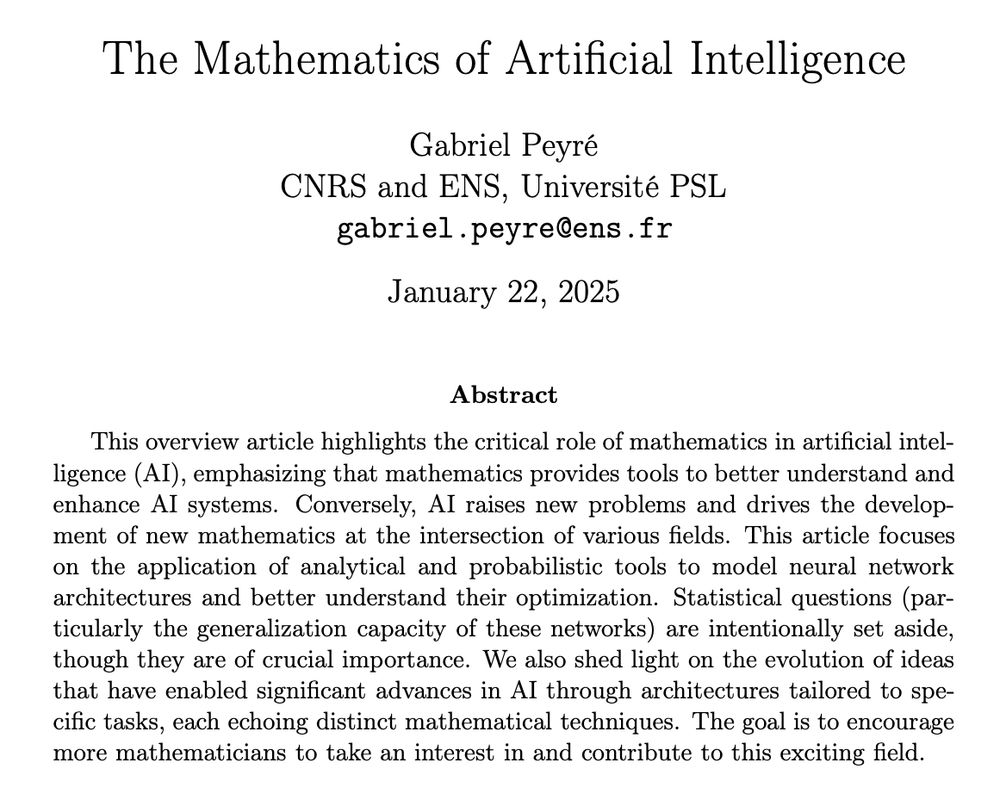

Below a few words on each of them:

Below a few words on each of them:

📢📢𝐆𝐀𝐅: 𝐆𝐚𝐮𝐬𝐬𝐢𝐚𝐧 𝐀𝐯𝐚𝐭𝐚𝐫 𝐑𝐞𝐜𝐨𝐧𝐬𝐭𝐫𝐮𝐜𝐭𝐢𝐨𝐧 𝐟𝐫𝐨𝐦 𝐌𝐨𝐧𝐨𝐜𝐮𝐥𝐚𝐫 𝐕𝐢𝐝𝐞𝐨𝐬 𝐯𝐢𝐚 𝐌𝐮𝐥𝐭𝐢-𝐯𝐢𝐞𝐰 𝐃𝐢𝐟𝐟𝐮𝐬𝐢𝐨𝐧📢📢

We reconstruct animatable Gaussian head avatars from monocular videos captured by commodity devices such as smartphones.

📢📢𝐆𝐀𝐅: 𝐆𝐚𝐮𝐬𝐬𝐢𝐚𝐧 𝐀𝐯𝐚𝐭𝐚𝐫 𝐑𝐞𝐜𝐨𝐧𝐬𝐭𝐫𝐮𝐜𝐭𝐢𝐨𝐧 𝐟𝐫𝐨𝐦 𝐌𝐨𝐧𝐨𝐜𝐮𝐥𝐚𝐫 𝐕𝐢𝐝𝐞𝐨𝐬 𝐯𝐢𝐚 𝐌𝐮𝐥𝐭𝐢-𝐯𝐢𝐞𝐰 𝐃𝐢𝐟𝐟𝐮𝐬𝐢𝐨𝐧📢📢

We reconstruct animatable Gaussian head avatars from monocular videos captured by commodity devices such as smartphones.

It will take me some time to digest this article fully, but it's important to follow the authors' advice and read the appendices, as the examples are helpful and well-illustrated.

📄 arxiv.org/abs/2409.09347

It will take me some time to digest this article fully, but it's important to follow the authors' advice and read the appendices, as the examples are helpful and well-illustrated.

📄 arxiv.org/abs/2409.09347

🐍 Code: github.com/google-deepm...

📄 Article: arxiv.org/abs/2406.04329

📼 Video: www.youtube.com/watch?v=qj9B...

🐍 Code: github.com/google-deepm...

📄 Article: arxiv.org/abs/2406.04329

📼 Video: www.youtube.com/watch?v=qj9B...

We exploit tactile sensing to enhance geometric details for text- and image-to-3D generation.

Check out our #NeurIPS2024 work on Tactile DreamFusion: Exploiting Tactile Sensing for 3D Generation: ruihangao.github.io/TactileDream...

1/3

We exploit tactile sensing to enhance geometric details for text- and image-to-3D generation.

Check out our #NeurIPS2024 work on Tactile DreamFusion: Exploiting Tactile Sensing for 3D Generation: ruihangao.github.io/TactileDream...

1/3

📢 Introducing APOLLO! 🚀: SGD-like memory cost, yet AdamW-level performance (or better!).

❓ How much memory do we need for optimization states in LLM training ? 🧐

Almost zero.

📜 Paper: arxiv.org/abs/2412.05270

🔗 GitHub: github.com/zhuhanqing/A...

📢 Introducing APOLLO! 🚀: SGD-like memory cost, yet AdamW-level performance (or better!).

❓ How much memory do we need for optimization states in LLM training ? 🧐

Almost zero.

📜 Paper: arxiv.org/abs/2412.05270

🔗 GitHub: github.com/zhuhanqing/A...

Apple introduces TarFlow, a new Transformer-based variant of Masked Autoregressive Flows.

SOTA on likelihood estimation for images, quality and diversity comparable to diffusion models.

arxiv.org/abs/2412.06329

Apple introduces TarFlow, a new Transformer-based variant of Masked Autoregressive Flows.

SOTA on likelihood estimation for images, quality and diversity comparable to diffusion models.

arxiv.org/abs/2412.06329

We propose a training-free 3D shape editing approach that rapidly and precisely edits the regions intended by the user and keeps the rest as is.

We propose a training-free 3D shape editing approach that rapidly and precisely edits the regions intended by the user and keeps the rest as is.

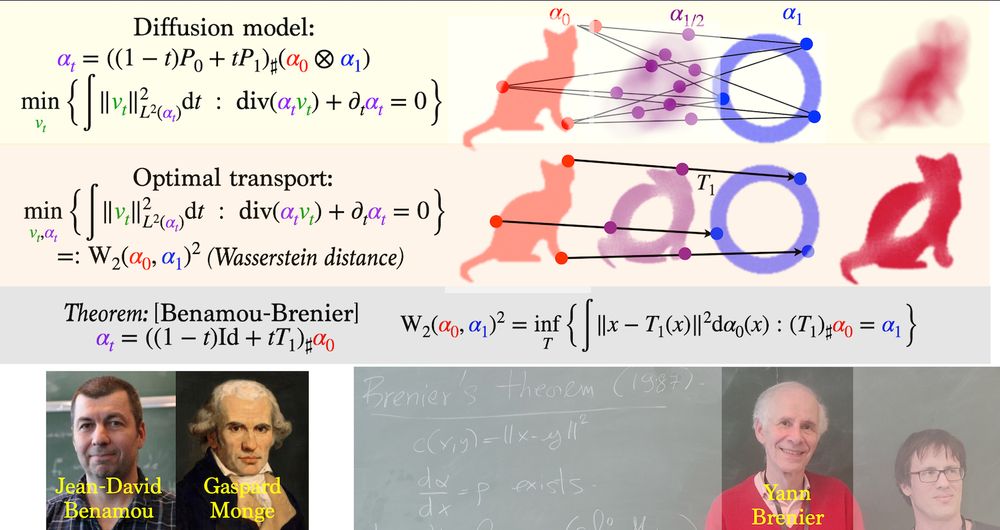

Also covers variants like non-Euclidean & discrete flow matching.

A PyTorch library is also released with this guide!

This looks like a very good read! 🔥

arxiv: arxiv.org/abs/2412.06264

Also covers variants like non-Euclidean & discrete flow matching.

A PyTorch library is also released with this guide!

This looks like a very good read! 🔥

arxiv: arxiv.org/abs/2412.06264

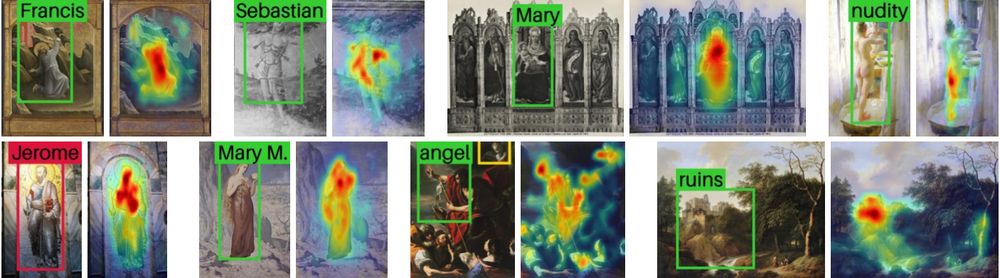

happy happy happy to introduce NADA, our latest work on object detection in art! 🎨

with amazing collaborators:

@patrick-ramos.bsky.social, @nicaogr.bsky.social, Selina Khan, Yuta Nakashima

happy happy happy to introduce NADA, our latest work on object detection in art! 🎨

with amazing collaborators:

@patrick-ramos.bsky.social, @nicaogr.bsky.social, Selina Khan, Yuta Nakashima