Prof @ EPFL, Lead @ CLAIRE lab, ELLIS Scholar

Ex: Staff Research Scientist @ Deepmind, MSR, IBM Research

Incredible execution and attention to details by @xiuyingwei.bsky.social !

giving.ucla.edu/Campaign/Donat

giving.ucla.edu/Campaign/Donat

❌ You want rewards, but GRPO only works online?

❌ You want offline, but DPO is limited to preferences?

✅ QRPO can do both!

🧵Here's how we do it:

❌ You want rewards, but GRPO only works online?

❌ You want offline, but DPO is limited to preferences?

✅ QRPO can do both!

🧵Here's how we do it:

Incredible execution and attention to details by @xiuyingwei.bsky.social !

Incredible execution and attention to details by @xiuyingwei.bsky.social !

Until then:

Paper: arxiv.org/abs/2405.066...

Code: github.com/au-clan/FoA

Until then:

Paper: arxiv.org/abs/2405.066...

Code: github.com/au-clan/FoA

12/12

12/12

Papers due March 30th 23:59 AoE 🚀

@sdumbrava.bsky.social @olafhartig.bsky.social @csaudk.bsky.social

Papers due March 30th 23:59 AoE 🚀

@sdumbrava.bsky.social @olafhartig.bsky.social @csaudk.bsky.social

Details: www.cs.au.dk/~clan/openings

Deadline: May 1, 2025

Please boost!

cc: @aicentre.dk @wikiresearch.bsky.social

Details: www.cs.au.dk/~clan/openings

Deadline: May 1, 2025

Please boost!

cc: @aicentre.dk @wikiresearch.bsky.social

LLMs and World Models, Part 1: aiguide.substack.com/p/llms-and-w...

LLMs and World Models, Part 2: aiguide.substack.com/p/llms-and-w...

LLMs and World Models, Part 1: aiguide.substack.com/p/llms-and-w...

LLMs and World Models, Part 2: aiguide.substack.com/p/llms-and-w...

Talk: www.youtube.com/watch?v=a-1x...

Award announcement: www.computer.org/publications...

Talk: www.youtube.com/watch?v=a-1x...

Award announcement: www.computer.org/publications...

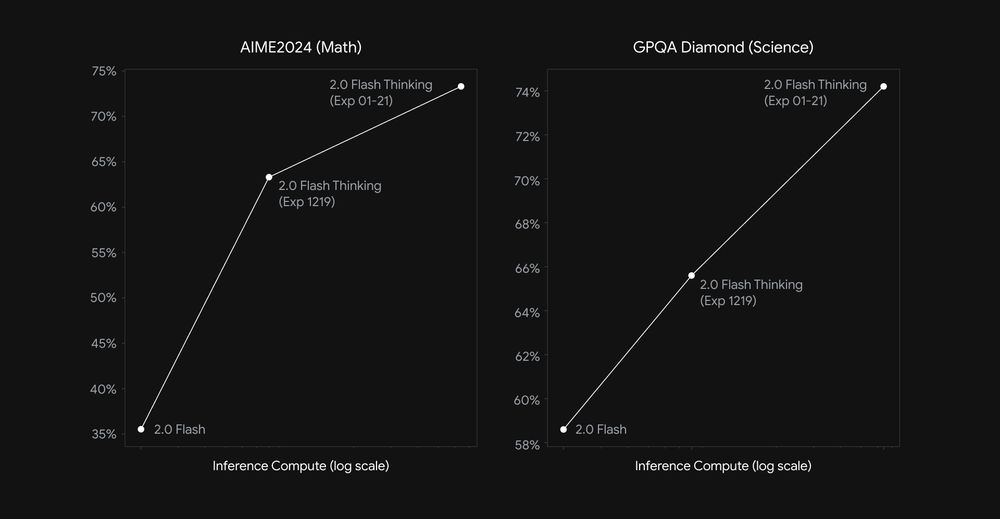

Today we’re sharing an experimental update w/improved performance on math, science, and multimodal reasoning benchmarks 📈:

• AIME: 73.3%

• GPQA: 74.2%

• MMMU: 75.4%

Today we’re sharing an experimental update w/improved performance on math, science, and multimodal reasoning benchmarks 📈:

• AIME: 73.3%

• GPQA: 74.2%

• MMMU: 75.4%

@caglarai.bsky.social

🧑💻 github.com/CLAIRE-Labo/...

@caglarai.bsky.social

🧑💻 github.com/CLAIRE-Labo/...

Wed 11 Dec 11 am - 2 pm PST

West Ballroom A-D #6403

@caglarai.bsky.social @andreamiele.bsky.social @razvan-pascanu.bsky.social

Wed 11 Dec 11 am - 2 pm PST

West Ballroom A-D #6403

@caglarai.bsky.social @andreamiele.bsky.social @razvan-pascanu.bsky.social

We have two accepted papers from my lab:

1. Building on Efficient Foundations: Effective Training of LLMs with Structured Feedforward Layers, on Wednesday, East Exhibit Hall A-C #2010 (1/3)

We have two accepted papers from my lab:

1. Building on Efficient Foundations: Effective Training of LLMs with Structured Feedforward Layers, on Wednesday, East Exhibit Hall A-C #2010 (1/3)