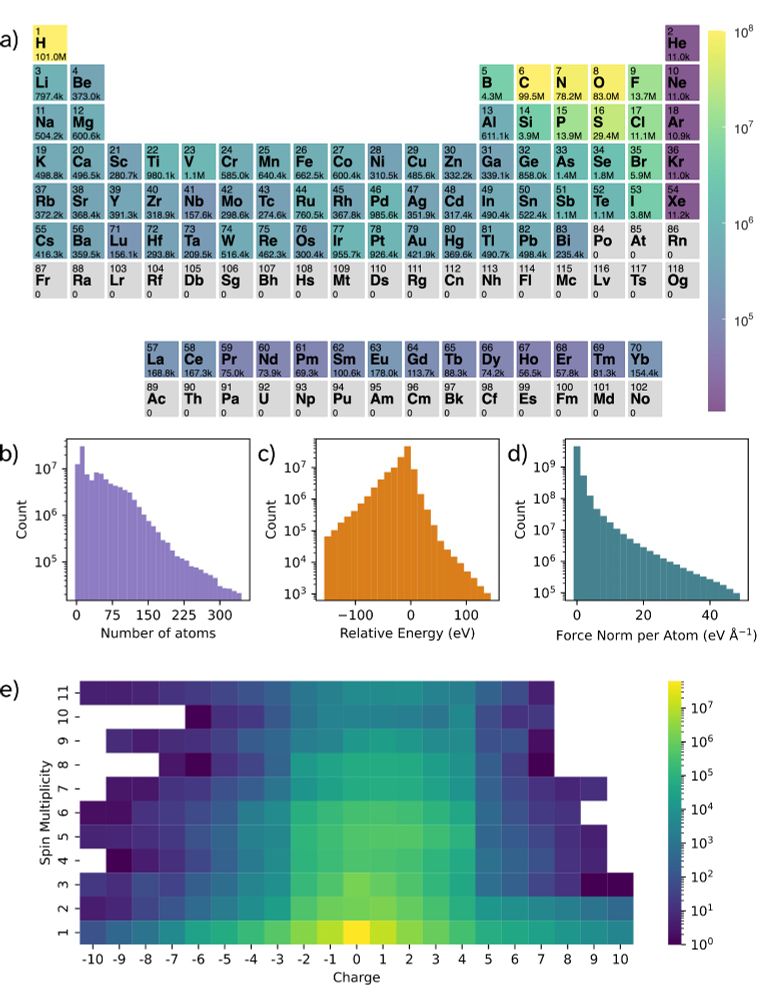

Introducing UMA - our latest model trained on over 30 billion atoms across our open-science datasets.

Introducing UMA - our latest model trained on over 30 billion atoms across our open-science datasets.

We hope to release a public leaderboard in the near future!

We hope to release a public leaderboard in the near future!

@AIatMeta @OpenCatalyst

OMol25: huggingface.co/facebook/OMo...

UMA: huggingface.co/facebook/UMA

Blog: ai.meta.com/blog/meta-fa...

Demo: huggingface.co/spaces/faceb...

@AIatMeta @OpenCatalyst

OMol25: huggingface.co/facebook/OMo...

UMA: huggingface.co/facebook/UMA

Blog: ai.meta.com/blog/meta-fa...

Demo: huggingface.co/spaces/faceb...