Benno Krojer

@bennokrojer.bsky.social

AI PhDing at Mila/McGill (prev FAIR intern). Happily residing in Montreal 🥯❄️

Academic: language grounding, vision+language, interp, rigorous & creative evals, cogsci

Other: many sports, urban explorations, puzzles/quizzes

bennokrojer.com

Academic: language grounding, vision+language, interp, rigorous & creative evals, cogsci

Other: many sports, urban explorations, puzzles/quizzes

bennokrojer.com

Hahaha yes they all mentioned Embo Died for a while

And generally memes are all over

And generally memes are all over

November 8, 2025 at 2:01 AM

Hahaha yes they all mentioned Embo Died for a while

And generally memes are all over

And generally memes are all over

I used it recently for the first time and was blown away by the speed. Should switch!

September 22, 2025 at 2:35 AM

I used it recently for the first time and was blown away by the speed. Should switch!

for inspiration, here are some from my past papers:

arxiv.org/abs/2407.03471

arxiv.org/abs/2506.09987

arxiv.org/abs/2508.01119

arxiv.org/abs/2407.03471

arxiv.org/abs/2506.09987

arxiv.org/abs/2508.01119

August 13, 2025 at 12:19 PM

for inspiration, here are some from my past papers:

arxiv.org/abs/2407.03471

arxiv.org/abs/2506.09987

arxiv.org/abs/2508.01119

arxiv.org/abs/2407.03471

arxiv.org/abs/2506.09987

arxiv.org/abs/2508.01119

Do you have any thoughts on how this relates to e.g. the Platonic Representation Hypothesis paper? arxiv.org/abs/2405.07987

The Platonic Representation Hypothesis

We argue that representations in AI models, particularly deep networks, are converging. First, we survey many examples of convergence in the literature: over time and across multiple domains, the ways...

arxiv.org

August 13, 2025 at 12:15 PM

Do you have any thoughts on how this relates to e.g. the Platonic Representation Hypothesis paper? arxiv.org/abs/2405.07987

Maybe he'd otherwise miss the Alpes and skiing too much

July 28, 2025 at 3:57 PM

Maybe he'd otherwise miss the Alpes and skiing too much

Done the same in the past when I felt little motivation for the PhD! It's been a while since I've read one... Maybe I'll pick it up again

July 4, 2025 at 10:17 PM

Done the same in the past when I felt little motivation for the PhD! It's been a while since I've read one... Maybe I'll pick it up again

Also check out our previous two episodes! They didn't have a single guest, instead:

1) we introduce the podcast and how Tom and I got into research in Ep 00

2) we interview several people at Mila just before the Neurips deadline about their submissions in Ep 01

1) we introduce the podcast and how Tom and I got into research in Ep 00

2) we interview several people at Mila just before the Neurips deadline about their submissions in Ep 01

June 25, 2025 at 3:54 PM

Also check out our previous two episodes! They didn't have a single guest, instead:

1) we introduce the podcast and how Tom and I got into research in Ep 00

2) we interview several people at Mila just before the Neurips deadline about their submissions in Ep 01

1) we introduce the podcast and how Tom and I got into research in Ep 00

2) we interview several people at Mila just before the Neurips deadline about their submissions in Ep 01

This is part of a larger effort at meta to significantly improve physical world modeling so check out the other works in this blog post!

ai.meta.com/blog/v-jepa-...

ai.meta.com/blog/v-jepa-...

ai.meta.com

June 13, 2025 at 2:47 PM

This is part of a larger effort at meta to significantly improve physical world modeling so check out the other works in this blog post!

ai.meta.com/blog/v-jepa-...

ai.meta.com/blog/v-jepa-...

Some reflections at the end:

There's a lot of talk about math reasoning these days, but this project made me appreciate what simple reasoning we humans take for granted, arising in our first months and years of living

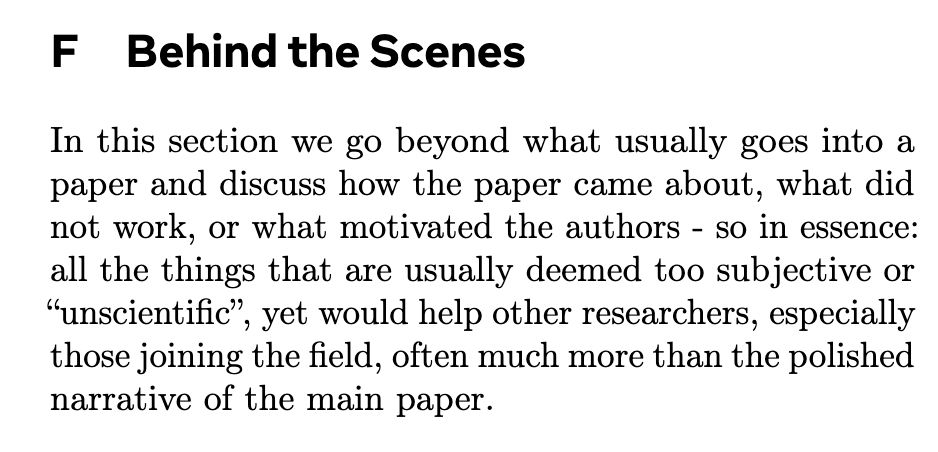

As usual i also included "Behind The Scenes" in the Appendix:

There's a lot of talk about math reasoning these days, but this project made me appreciate what simple reasoning we humans take for granted, arising in our first months and years of living

As usual i also included "Behind The Scenes" in the Appendix:

June 13, 2025 at 2:47 PM

Some reflections at the end:

There's a lot of talk about math reasoning these days, but this project made me appreciate what simple reasoning we humans take for granted, arising in our first months and years of living

As usual i also included "Behind The Scenes" in the Appendix:

There's a lot of talk about math reasoning these days, but this project made me appreciate what simple reasoning we humans take for granted, arising in our first months and years of living

As usual i also included "Behind The Scenes" in the Appendix:

I am super grateful to my smart+kind collaborators at Meta who made this a very enjoyable project :)

(Mido Assran Nicolas Ballas @koustuvsinha.com @candaceross.bsky.social @quentin-garrido.bsky.social Mojtaba Komeili)

The Montreal office in general is a very fun place 👇

(Mido Assran Nicolas Ballas @koustuvsinha.com @candaceross.bsky.social @quentin-garrido.bsky.social Mojtaba Komeili)

The Montreal office in general is a very fun place 👇

June 13, 2025 at 2:47 PM

I am super grateful to my smart+kind collaborators at Meta who made this a very enjoyable project :)

(Mido Assran Nicolas Ballas @koustuvsinha.com @candaceross.bsky.social @quentin-garrido.bsky.social Mojtaba Komeili)

The Montreal office in general is a very fun place 👇

(Mido Assran Nicolas Ballas @koustuvsinha.com @candaceross.bsky.social @quentin-garrido.bsky.social Mojtaba Komeili)

The Montreal office in general is a very fun place 👇

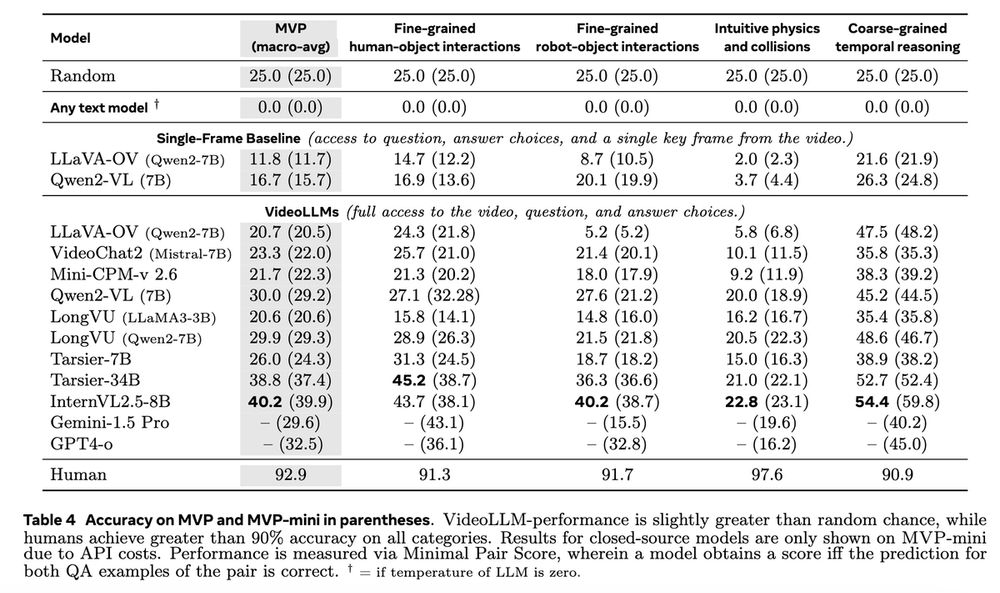

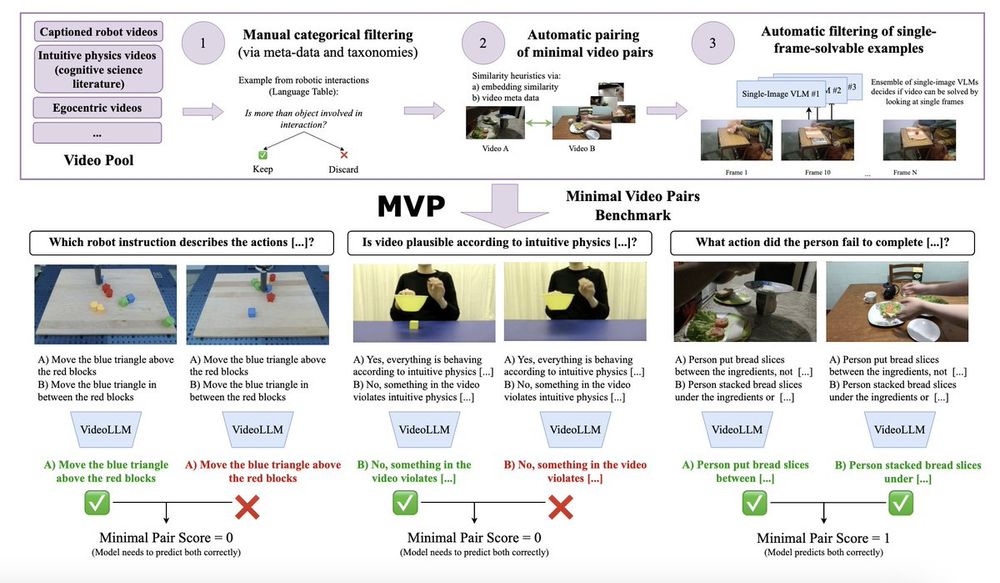

The hardest tasks for current models are still intuitive physics tasks where performance is often below random (In line with the prev. literature)

We encourage the community to use MVPBench to check if the latest VideoLLMs possess a *real* understanding of the physical world!

We encourage the community to use MVPBench to check if the latest VideoLLMs possess a *real* understanding of the physical world!

June 13, 2025 at 2:47 PM

The hardest tasks for current models are still intuitive physics tasks where performance is often below random (In line with the prev. literature)

We encourage the community to use MVPBench to check if the latest VideoLLMs possess a *real* understanding of the physical world!

We encourage the community to use MVPBench to check if the latest VideoLLMs possess a *real* understanding of the physical world!

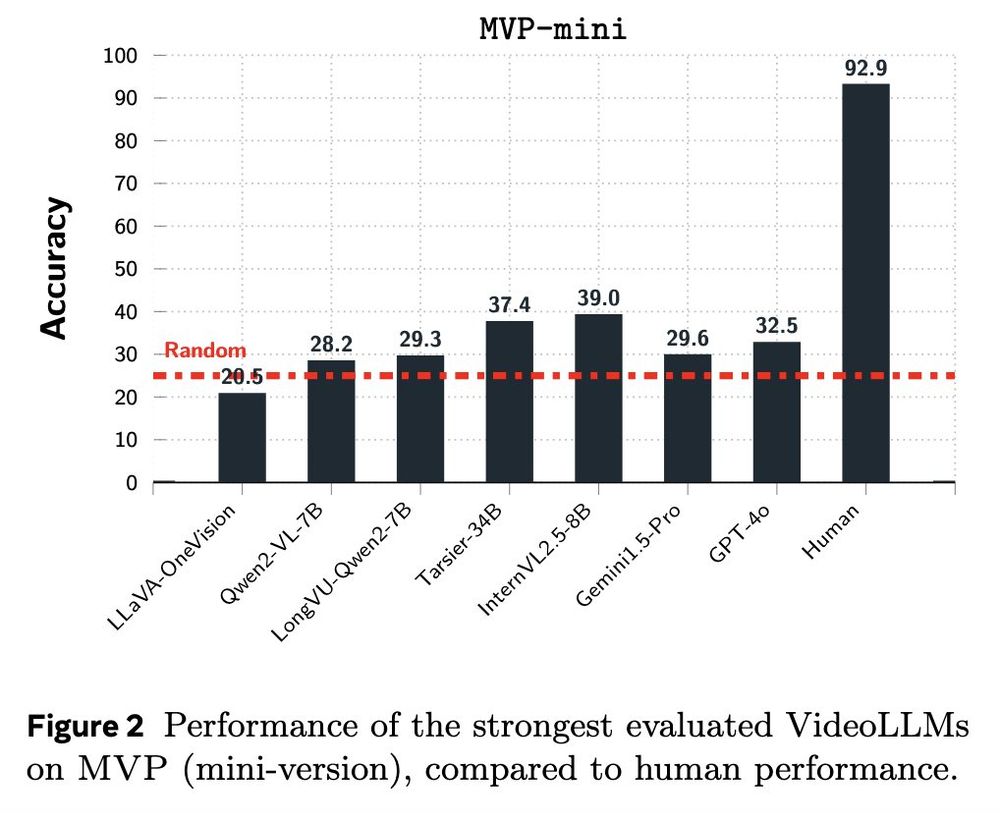

On the other hand even the strongest sota models perform around random chance, with only 2-3 models significantly above random

June 13, 2025 at 2:47 PM

On the other hand even the strongest sota models perform around random chance, with only 2-3 models significantly above random

The questions in MVPBench are conceptually simple: relatively short videos with little linguistic or cultural knowledge needed. As a result humans have no problem with these questions, e.g. it is known that even babies do well on various intuitive physics tasks

June 13, 2025 at 2:47 PM

The questions in MVPBench are conceptually simple: relatively short videos with little linguistic or cultural knowledge needed. As a result humans have no problem with these questions, e.g. it is known that even babies do well on various intuitive physics tasks

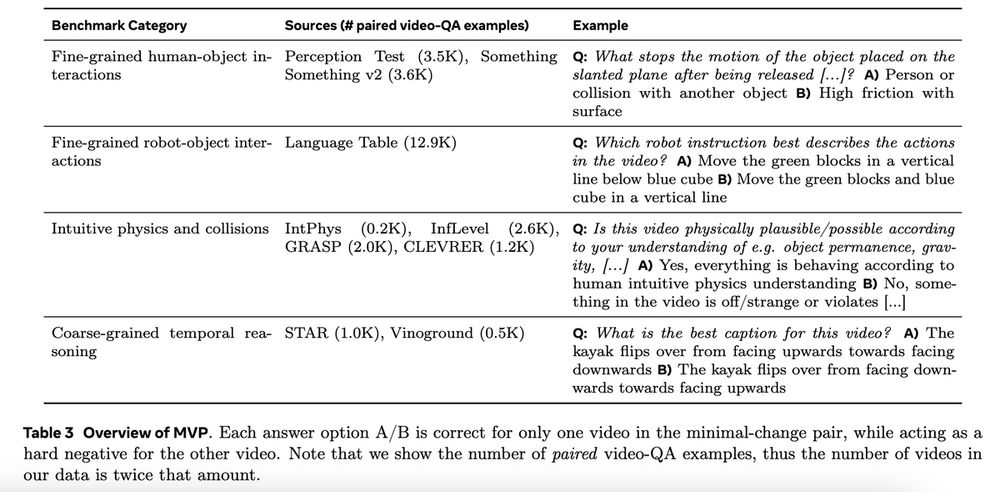

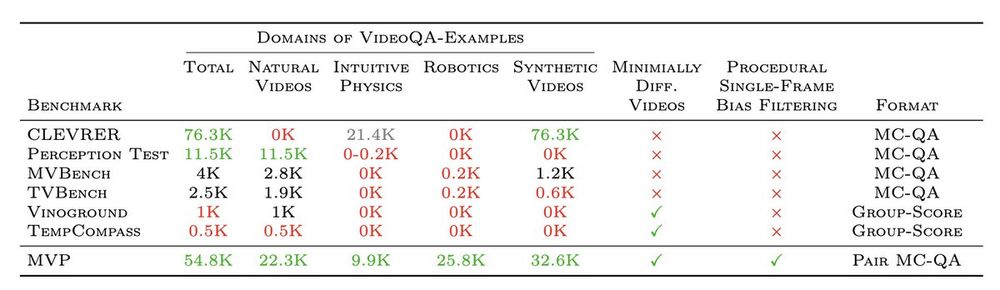

By automating the pairing of highly similar video pairs pairs and unifying different datasets, as well filtering out examples that models can solve with a single-frame, we end up with (probably) the largest and most diverse dataset of its kind:

June 13, 2025 at 2:47 PM

By automating the pairing of highly similar video pairs pairs and unifying different datasets, as well filtering out examples that models can solve with a single-frame, we end up with (probably) the largest and most diverse dataset of its kind:

So a solution we propose a 3-step curation framework that results in the Minimal Video Pairs benchmark (MVPBench)

June 13, 2025 at 2:47 PM

So a solution we propose a 3-step curation framework that results in the Minimal Video Pairs benchmark (MVPBench)