#Reading #NeuroCognition #ComputationalModels

https://selflearningsystems.uni-koeln.de/

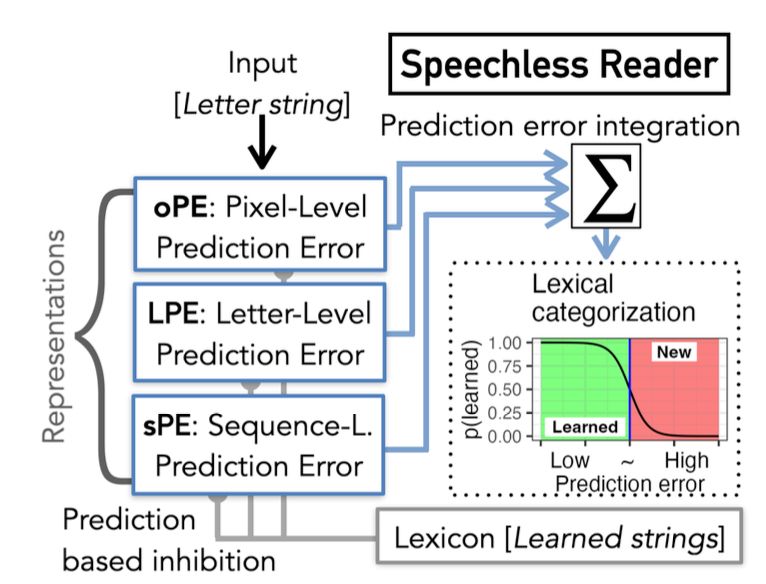

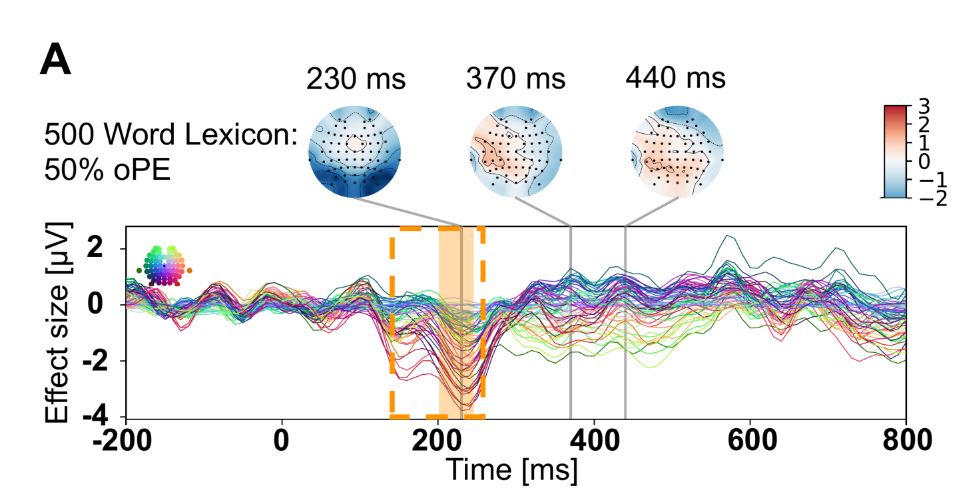

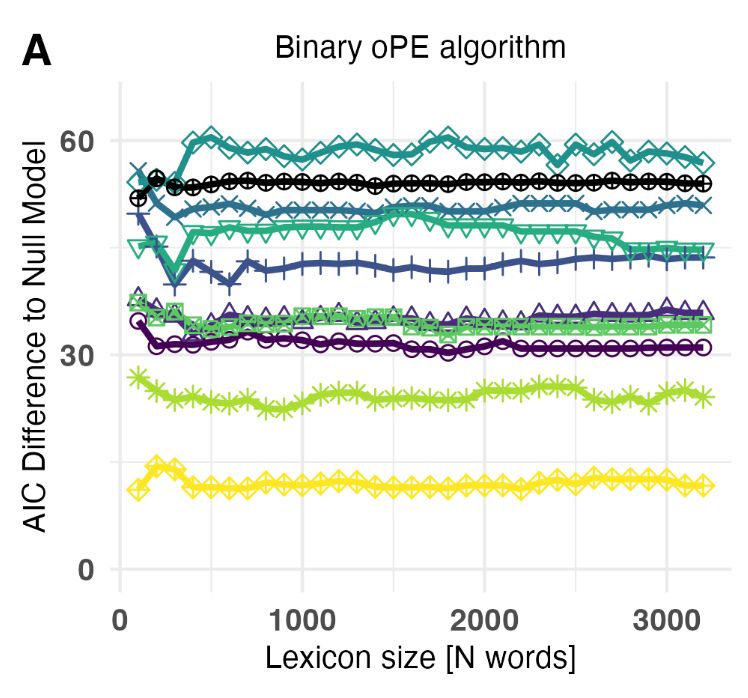

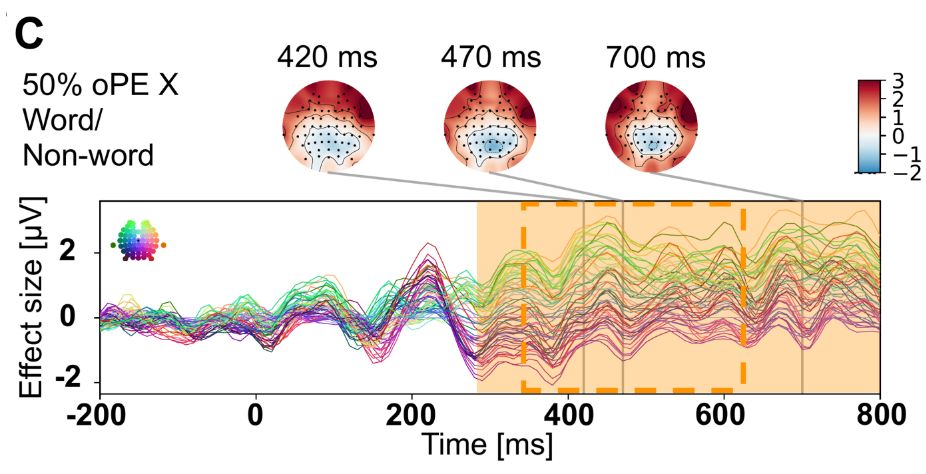

The central finding is that for late brain activation and behavior, a model with a representation that uses binary signals and memory storage fits with estimates of human vocabulary.

The central finding is that for late brain activation and behavior, a model with a representation that uses binary signals and memory storage fits with estimates of human vocabulary.

Within that framework, we test if the precision of the representation can be increased by

(i) Binary signals

Within that framework, we test if the precision of the representation can be increased by

(i) Binary signals