Benjamin Henke

@benhenke.bsky.social

Philosopher of Cognitive Science and AI. @imperialcollegeldn.bsky.social, @cfi-cambridge.bsky.social, and the Institute of Philosophy

Associate Director @laihp.bsky.social

While my opinions are my own, they’re also my gift to all of you.

Associate Director @laihp.bsky.social

While my opinions are my own, they’re also my gift to all of you.

Reposted by Benjamin Henke

Important work from @birchlse.bsky.social

Here is "AI Consciousness: A Centrist Manifesto". I've been working on this feverishly because the issue seems to me so urgent - and I'm worried extreme positions on both sides are becoming locked in, when the best way forward is in the centre. Please read it! philpapers.org/rec/BIRACA-4

August 28, 2025 at 6:07 PM

Important work from @birchlse.bsky.social

Can you REALLY tell the difference between AI-generated and human-written text? One of these texts was written by a human and another by a well-prompted chatbot. Which is which?:

August 28, 2025 at 4:18 PM

Can you REALLY tell the difference between AI-generated and human-written text? One of these texts was written by a human and another by a well-prompted chatbot. Which is which?:

Reposted by Benjamin Henke

Next up in our ongoing AI Affect series:

👤 Tim Salomons (Queens Canada)

📢 "How do we judge others' pain?"

🗓️ TOMORROW April 15, 3-4:30 PM

📍 Join us in person or online! DM for details.

👤 Tim Salomons (Queens Canada)

📢 "How do we judge others' pain?"

🗓️ TOMORROW April 15, 3-4:30 PM

📍 Join us in person or online! DM for details.

April 14, 2025 at 3:37 PM

Next up in our ongoing AI Affect series:

👤 Tim Salomons (Queens Canada)

📢 "How do we judge others' pain?"

🗓️ TOMORROW April 15, 3-4:30 PM

📍 Join us in person or online! DM for details.

👤 Tim Salomons (Queens Canada)

📢 "How do we judge others' pain?"

🗓️ TOMORROW April 15, 3-4:30 PM

📍 Join us in person or online! DM for details.

Reposted by Benjamin Henke

Announcement of Institute of Philosophy Conference on Responsible AI at the University of London on 19-20 May. Free and open to all

philosophy.sas.ac.uk/news-events/...

philosophy.sas.ac.uk/news-events/...

Responsible AI

philosophy.sas.ac.uk

April 9, 2025 at 10:06 AM

Announcement of Institute of Philosophy Conference on Responsible AI at the University of London on 19-20 May. Free and open to all

philosophy.sas.ac.uk/news-events/...

philosophy.sas.ac.uk/news-events/...

But her emails

SignalGate Is Bad; But OPSEC Isn’t Even the Worst Part Of It talkingpointsmemo.com/edblog/signa...

SignalGate Is Bad; But OPSEC Isn’t Even the Worst Part Of It

I haven’t had time to comment on the Jeff Goldberg’s story about...

talkingpointsmemo.com

March 25, 2025 at 10:37 AM

But her emails

Reposted by Benjamin Henke

Join us Friday for a bonus PPE tak!

Sam Berstler (MIT) | "Conversing in the Dark: Off-Off Record Speech Acts and the Cooperative Creation of Uncertainty"

📆: Fri, March 28, 4:30-6 PM

📍: Senate House, Rm 349

Hope to see you there!

philosophy.sas.ac.uk/news-events/...

Sam Berstler (MIT) | "Conversing in the Dark: Off-Off Record Speech Acts and the Cooperative Creation of Uncertainty"

📆: Fri, March 28, 4:30-6 PM

📍: Senate House, Rm 349

Hope to see you there!

philosophy.sas.ac.uk/news-events/...

Conversing in the Dark: Off-Off Record Speech Acts and the Cooperative Creation of Uncertainty | Sam Berstler (MIT)

philosophy.sas.ac.uk

March 25, 2025 at 8:22 AM

Join us Friday for a bonus PPE tak!

Sam Berstler (MIT) | "Conversing in the Dark: Off-Off Record Speech Acts and the Cooperative Creation of Uncertainty"

📆: Fri, March 28, 4:30-6 PM

📍: Senate House, Rm 349

Hope to see you there!

philosophy.sas.ac.uk/news-events/...

Sam Berstler (MIT) | "Conversing in the Dark: Off-Off Record Speech Acts and the Cooperative Creation of Uncertainty"

📆: Fri, March 28, 4:30-6 PM

📍: Senate House, Rm 349

Hope to see you there!

philosophy.sas.ac.uk/news-events/...

Reposted by Benjamin Henke

Next up in our ongoing AI Affect series:

👤 Rob Long (Eleos AI)

📢 "Taking AI Welfare Seriously"

🗓️ Tuesday, March 25, 3-4:30 PM

📍 Join us in person or online! DM for details.

👤 Rob Long (Eleos AI)

📢 "Taking AI Welfare Seriously"

🗓️ Tuesday, March 25, 3-4:30 PM

📍 Join us in person or online! DM for details.

March 21, 2025 at 10:33 AM

Next up in our ongoing AI Affect series:

👤 Rob Long (Eleos AI)

📢 "Taking AI Welfare Seriously"

🗓️ Tuesday, March 25, 3-4:30 PM

📍 Join us in person or online! DM for details.

👤 Rob Long (Eleos AI)

📢 "Taking AI Welfare Seriously"

🗓️ Tuesday, March 25, 3-4:30 PM

📍 Join us in person or online! DM for details.

Reposted by Benjamin Henke

Next up in our ongoing AI Affect series:

👤 Tom Everitt (Google Deepmind) @tom4everitt.bsky.social

📢 "Agency as backwards causality"

🗓️ TODAY Tuesday, March 4, 3-4:30 PM

📍 Join us in person or online! DM for details.

👤 Tom Everitt (Google Deepmind) @tom4everitt.bsky.social

📢 "Agency as backwards causality"

🗓️ TODAY Tuesday, March 4, 3-4:30 PM

📍 Join us in person or online! DM for details.

March 4, 2025 at 11:31 AM

Next up in our ongoing AI Affect series:

👤 Tom Everitt (Google Deepmind) @tom4everitt.bsky.social

📢 "Agency as backwards causality"

🗓️ TODAY Tuesday, March 4, 3-4:30 PM

📍 Join us in person or online! DM for details.

👤 Tom Everitt (Google Deepmind) @tom4everitt.bsky.social

📢 "Agency as backwards causality"

🗓️ TODAY Tuesday, March 4, 3-4:30 PM

📍 Join us in person or online! DM for details.

Reposted by Benjamin Henke

The deadline for applying to be an AI fellow at the LAIHP is this Sunday.

The LAIHP is pleased to invite applications for Visiting Fellowships at the Institute of Philosophy, School of Advanced Study, University of London.

The fellowship period will run from May 19th-June 27th, 2025

For more information, see below.

The fellowship period will run from May 19th-June 27th, 2025

For more information, see below.

AI Fellows 2025 | LAIHP

www.ai-humanity-london.com

February 18, 2025 at 2:25 PM

The deadline for applying to be an AI fellow at the LAIHP is this Sunday.

Reposted by Benjamin Henke

A cartoon by Amy Kurzweil. #NewYorkerCartoons

February 14, 2025 at 2:03 AM

A cartoon by Amy Kurzweil. #NewYorkerCartoons

Reposted by Benjamin Henke

It's a neat way to stumble upon interesting information randomly, learn new things, and spend spare moments of boredom without reaching for an algorithmically addictive social media app.

Developer creates endless Wikipedia feed to fight algorithm addiction

WikiTok cures boredom in spare moments with wholesome swipe-up Wikipedia article discovery.

arstechnica.com

February 10, 2025 at 8:19 PM

It's a neat way to stumble upon interesting information randomly, learn new things, and spend spare moments of boredom without reaching for an algorithmically addictive social media app.

Reposted by Benjamin Henke

University College Dublin is hiring five year Ad Astra Fellows in the Philosophy of Technology/AI. Deadline: February 21st. Find out more at the link below.

Ad Astra Fellow in Ethics and Philosophy of Technology - UCD School of Philosophy

www.ucd.ie

February 10, 2025 at 12:16 PM

University College Dublin is hiring five year Ad Astra Fellows in the Philosophy of Technology/AI. Deadline: February 21st. Find out more at the link below.

Reposted by Benjamin Henke

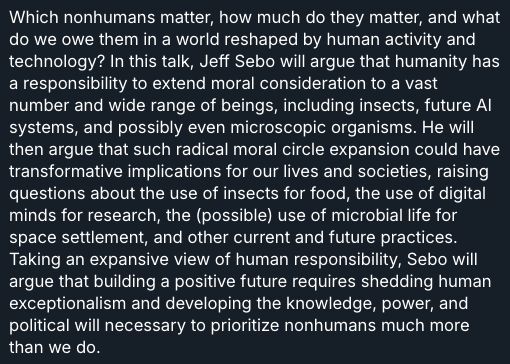

Next up in our ongoing AI Affect series:

👤 Jeff Sebo (NYU) @jeffsebo.bsky.social

📢 "The Moral Circle"

🗓️ Tuesday, February 4, 3-4:30 PM

📍 Join us in person or online! DM for details.

👤 Jeff Sebo (NYU) @jeffsebo.bsky.social

📢 "The Moral Circle"

🗓️ Tuesday, February 4, 3-4:30 PM

📍 Join us in person or online! DM for details.

February 3, 2025 at 11:33 AM

Next up in our ongoing AI Affect series:

👤 Jeff Sebo (NYU) @jeffsebo.bsky.social

📢 "The Moral Circle"

🗓️ Tuesday, February 4, 3-4:30 PM

📍 Join us in person or online! DM for details.

👤 Jeff Sebo (NYU) @jeffsebo.bsky.social

📢 "The Moral Circle"

🗓️ Tuesday, February 4, 3-4:30 PM

📍 Join us in person or online! DM for details.

Reposted by Benjamin Henke

Very good (technical) explainer answering "How has DeepSeek improved the Transformer architecture?". Aimed at readers already familiar with Transformers.

epoch.ai/gradient-upd...

epoch.ai/gradient-upd...

How has DeepSeek improved the Transformer architecture?

This Gradient Updates issue goes over the major changes that went into DeepSeek’s most recent model.

epoch.ai

January 30, 2025 at 9:07 PM

Very good (technical) explainer answering "How has DeepSeek improved the Transformer architecture?". Aimed at readers already familiar with Transformers.

epoch.ai/gradient-upd...

epoch.ai/gradient-upd...

Reposted by Benjamin Henke

The LAIHP is pleased to invite applications for Visiting Fellowships at the Institute of Philosophy, School of Advanced Study, University of London.

The fellowship period will run from May 19th-June 27th, 2025

For more information, see below.

The fellowship period will run from May 19th-June 27th, 2025

For more information, see below.

AI Fellows 2025 | LAIHP

www.ai-humanity-london.com

January 20, 2025 at 2:22 PM

The LAIHP is pleased to invite applications for Visiting Fellowships at the Institute of Philosophy, School of Advanced Study, University of London.

The fellowship period will run from May 19th-June 27th, 2025

For more information, see below.

The fellowship period will run from May 19th-June 27th, 2025

For more information, see below.

Reposted by Benjamin Henke

Today, we are publishing the first-ever International AI Safety Report, backed by 30 countries and the OECD, UN, and EU.

It summarises the state of the science on AI capabilities and risks, and how to mitigate those risks. 🧵

Full Report: assets.publishing.service.gov.uk/media/679a0c...

1/21

It summarises the state of the science on AI capabilities and risks, and how to mitigate those risks. 🧵

Full Report: assets.publishing.service.gov.uk/media/679a0c...

1/21

January 29, 2025 at 1:50 PM

Today, we are publishing the first-ever International AI Safety Report, backed by 30 countries and the OECD, UN, and EU.

It summarises the state of the science on AI capabilities and risks, and how to mitigate those risks. 🧵

Full Report: assets.publishing.service.gov.uk/media/679a0c...

1/21

It summarises the state of the science on AI capabilities and risks, and how to mitigate those risks. 🧵

Full Report: assets.publishing.service.gov.uk/media/679a0c...

1/21

Reposted by Benjamin Henke

Reminder @alex-taylor.bsky.social is speaking *this* Thursday discussing his BRAID project on red teaming & outsourcing labour in the Global South.

Make sure you don't miss out - get your hybrid ticket now 👉 rb.gy/64oljm

@technomoralfutures.bsky.social @edcdcs.bsky.social @uoe-gail.bsky.social

Make sure you don't miss out - get your hybrid ticket now 👉 rb.gy/64oljm

@technomoralfutures.bsky.social @edcdcs.bsky.social @uoe-gail.bsky.social

January 27, 2025 at 12:27 PM

Reminder @alex-taylor.bsky.social is speaking *this* Thursday discussing his BRAID project on red teaming & outsourcing labour in the Global South.

Make sure you don't miss out - get your hybrid ticket now 👉 rb.gy/64oljm

@technomoralfutures.bsky.social @edcdcs.bsky.social @uoe-gail.bsky.social

Make sure you don't miss out - get your hybrid ticket now 👉 rb.gy/64oljm

@technomoralfutures.bsky.social @edcdcs.bsky.social @uoe-gail.bsky.social

Reposted by Benjamin Henke

Can't wait for this talk tomorrow?

Too bad, that's when it is. We're excited too.

Too bad, that's when it is. We're excited too.

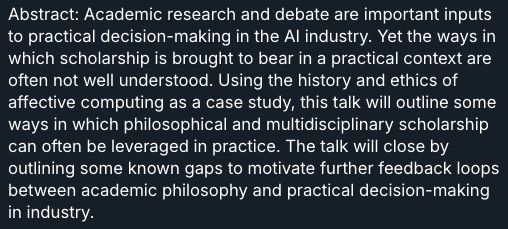

We're excited to announce the next speaker in our AI Affect series is Amanda McCroskery (Google Deepmind)!

📢 "From Research to Practical Decision-making on Affective AI"

🗓️ January 28th, 3-4:30 PM

📍 Join us in person or online! DM for details.

📢 "From Research to Practical Decision-making on Affective AI"

🗓️ January 28th, 3-4:30 PM

📍 Join us in person or online! DM for details.

January 27, 2025 at 10:56 AM

Can't wait for this talk tomorrow?

Too bad, that's when it is. We're excited too.

Too bad, that's when it is. We're excited too.

Reposted by Benjamin Henke

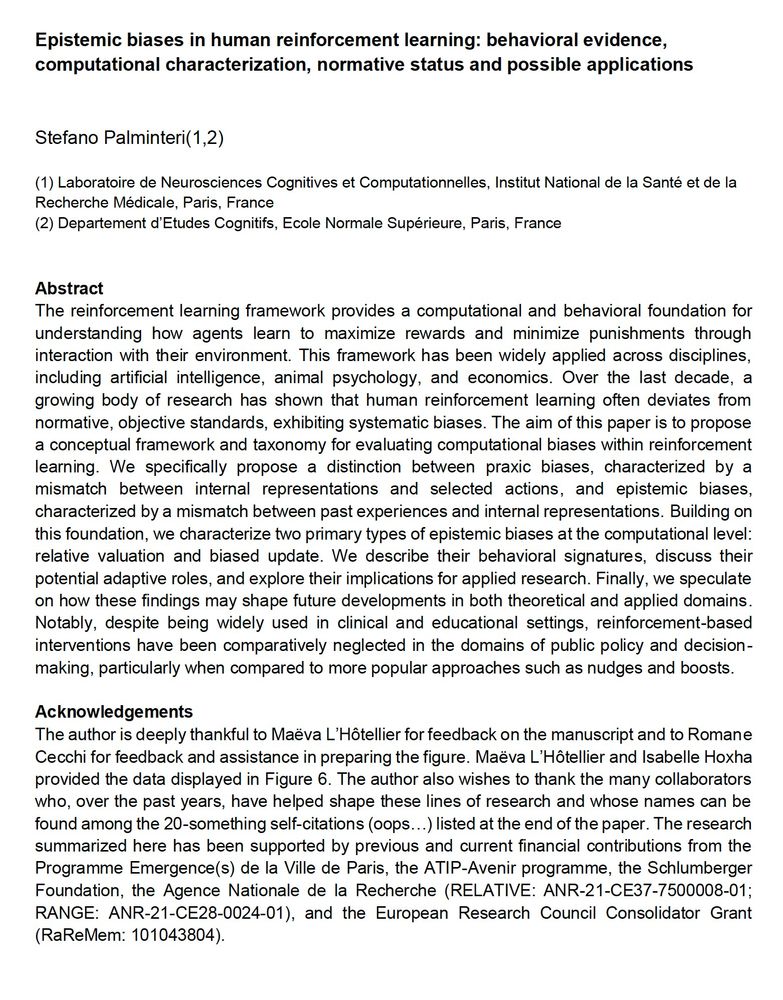

Epistemic biases in human reinforcement learning: behavioral evidence, computational characterization, normative status and possible applications.

A quite self-centered review, but with a broad introduction and conclusions and very cool figures.

Few main takes will follow

osf.io/preprints/ps...

A quite self-centered review, but with a broad introduction and conclusions and very cool figures.

Few main takes will follow

osf.io/preprints/ps...

January 23, 2025 at 3:47 PM

Epistemic biases in human reinforcement learning: behavioral evidence, computational characterization, normative status and possible applications.

A quite self-centered review, but with a broad introduction and conclusions and very cool figures.

Few main takes will follow

osf.io/preprints/ps...

A quite self-centered review, but with a broad introduction and conclusions and very cool figures.

Few main takes will follow

osf.io/preprints/ps...

Reposted by Benjamin Henke

The Journal of the American Philosophical Association is now on 🦋 Bluesky 🦋!

We aim to publish papers that break new ground, are from diverse philosophical traditions, and are on topics of wide interest.

Here are our most recent articles!

www.cambridge.org/core/journal...

Follow us for updates.

We aim to publish papers that break new ground, are from diverse philosophical traditions, and are on topics of wide interest.

Here are our most recent articles!

www.cambridge.org/core/journal...

Follow us for updates.

FirstView articles | Journal of the American Philosophical Association | Cambridge Core

Journal of the American Philosophical Association - Heather D. Battaly

www.cambridge.org

January 18, 2025 at 12:15 PM

The Journal of the American Philosophical Association is now on 🦋 Bluesky 🦋!

We aim to publish papers that break new ground, are from diverse philosophical traditions, and are on topics of wide interest.

Here are our most recent articles!

www.cambridge.org/core/journal...

Follow us for updates.

We aim to publish papers that break new ground, are from diverse philosophical traditions, and are on topics of wide interest.

Here are our most recent articles!

www.cambridge.org/core/journal...

Follow us for updates.

Reposted by Benjamin Henke

We're excited to announce the next speaker in our AI Affect series is Amanda McCroskery (Google Deepmind)!

📢 "From Research to Practical Decision-making on Affective AI"

🗓️ January 28th, 3-4:30 PM

📍 Join us in person or online! DM for details.

📢 "From Research to Practical Decision-making on Affective AI"

🗓️ January 28th, 3-4:30 PM

📍 Join us in person or online! DM for details.

January 16, 2025 at 11:15 AM

We're excited to announce the next speaker in our AI Affect series is Amanda McCroskery (Google Deepmind)!

📢 "From Research to Practical Decision-making on Affective AI"

🗓️ January 28th, 3-4:30 PM

📍 Join us in person or online! DM for details.

📢 "From Research to Practical Decision-making on Affective AI"

🗓️ January 28th, 3-4:30 PM

📍 Join us in person or online! DM for details.

Reposted by Benjamin Henke

Discussion of the #aiglossary in the Daily Nous!

A Philosophically Informed Glossary of Key Concepts in AI - Daily Nous

“Part of explaining what an AI system is doing and knowing why it is doing it turns on knowing what (if anything) the system is representing when reaching an output.” That’s a small excerpt from the e...

dailynous.com

January 14, 2025 at 3:50 PM

Discussion of the #aiglossary in the Daily Nous!

Reposted by Benjamin Henke

Inspired by Keir Starmer's speech yesterday to read Kate Vredenburgh's paper on the dangers of using AI in bureaucratic settings www.tandfonline.com/doi/full/10....

AI and bureaucratic discretion

Algorithmic decision-making has the potential to radically reshape policy-making and policy implementation. Many of the moral examinations of AI in government take AI to be a neutral epistemic tool...

www.tandfonline.com

January 14, 2025 at 11:51 AM

Inspired by Keir Starmer's speech yesterday to read Kate Vredenburgh's paper on the dangers of using AI in bureaucratic settings www.tandfonline.com/doi/full/10....

Reposted by Benjamin Henke

On one hand, this paper finds adding inference-time compute (like o1 does) improves medical reasoning, which is an important finding suggesting a way to continue to improve AI performance in medicine

On the other hand, scientific illustrations are apparently just anime now arxiv.org/pdf/2501.06458

On the other hand, scientific illustrations are apparently just anime now arxiv.org/pdf/2501.06458

January 14, 2025 at 5:56 AM

On one hand, this paper finds adding inference-time compute (like o1 does) improves medical reasoning, which is an important finding suggesting a way to continue to improve AI performance in medicine

On the other hand, scientific illustrations are apparently just anime now arxiv.org/pdf/2501.06458

On the other hand, scientific illustrations are apparently just anime now arxiv.org/pdf/2501.06458

It’s about time.

Hello everyone! We’re finally here! We’re excited to launch this new profile to communicate, share, and promote our work in the #PhilosophyOfTime.

Follow us to stay updated on our latest projects, events, and insights into one of the most fascinating areas of philosophy!

Follow us to stay updated on our latest projects, events, and insights into one of the most fascinating areas of philosophy!

January 13, 2025 at 11:31 PM

It’s about time.