I enjoy photography, animation/VFX, working on my renderer, languages and contributing to open source.

Spiraling down the #nixOS road.

After watching this video (www.youtube.com/watch?v=dsl_...), I thought I should also have dinner. Dinner with the penguins and the snowflakes.

Let's see how far I am getting with this. Not sure I am ready.

Spiraling down the #nixOS road.

After watching this video (www.youtube.com/watch?v=dsl_...), I thought I should also have dinner. Dinner with the penguins and the snowflakes.

Let's see how far I am getting with this. Not sure I am ready.

I had a great time and learned a lot of stuff. Thanks to Omar and Habib for the mentoring, and I will definitely continue to contribute to Blender. :)

I'll be around at BlenderCon this week and happy to chat :)

I had a great time and learned a lot of stuff. Thanks to Omar and Habib for the mentoring, and I will definitely continue to contribute to Blender. :)

I'll be around at BlenderCon this week and happy to chat :)

- && `a and b`

- || `a or b`

- !a `not a`

- && `a and b`

- || `a or b`

- !a `not a`

Not my goofball but a friend’s favorite 😬

Not my goofball but a friend’s favorite 😬

It's a minor one, but a start for many more to come!

It's a minor one, but a start for many more to come!

I would suggest to not just quote a reply without setting any context. Thanks.

Further it is theft, because the training data has not been eradicated and the model has never been put down. Commercially use without consent of the artists.

I would suggest to not just quote a reply without setting any context. Thanks.

Further it is theft, because the training data has not been eradicated and the model has never been put down. Commercially use without consent of the artists.

Looks pretty sick.

Paper: arxiv.org/abs/2412.09548

Check their project's website: research.nvidia.com/labs/dir/mes...

(Video belongs to the original authors and is shortened for upload reasons).

Looks pretty sick.

Paper: arxiv.org/abs/2412.09548

Check their project's website: research.nvidia.com/labs/dir/mes...

(Video belongs to the original authors and is shortened for upload reasons).

arxiv.org/pdf/2412.07371

PRM is a photometric stereo scene reconstruction model based on a two-stage optimization using InstantMesh. This optimization utilizes triplanes and volume rendering and is followed by FlexiCubes.

arxiv.org/pdf/2412.07371

PRM is a photometric stereo scene reconstruction model based on a two-stage optimization using InstantMesh. This optimization utilizes triplanes and volume rendering and is followed by FlexiCubes.

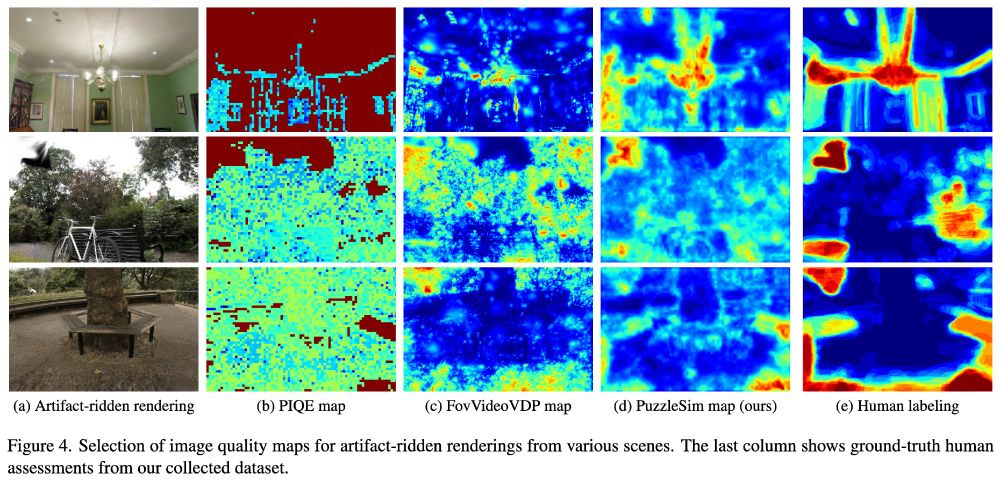

Hermann et al. 2024 :: Puzzle Similarity: A Perceptually-guided No-Reference Metric for Artifact Detection in 3D Scene Reconstructions

arxiv.org/abs/2411.17489

The authors propose a new no-reference metric for 3D scene reconstruction artifacts & an annotated dataset.

Hermann et al. 2024 :: Puzzle Similarity: A Perceptually-guided No-Reference Metric for Artifact Detection in 3D Scene Reconstructions

arxiv.org/abs/2411.17489

The authors propose a new no-reference metric for 3D scene reconstruction artifacts & an annotated dataset.

arxiv.org/abs/2411.17513

Dynamically-guided SR to human-sensitive areas in an image leading to half the flops used for an indistinguishable result from prior methods.

arxiv.org/abs/2411.17513

Dynamically-guided SR to human-sensitive areas in an image leading to half the flops used for an indistinguishable result from prior methods.