Site: https://ben-eysenbach.github.io/

Lab: https://princeton-rl.github.io/

@yijieisabelliu.bsky.social 's new algorithm using RL to provide better skills for planning!

Check out the website for code, videos, and pre-trained models: github.com/isabelliu0/S...

@yijieisabelliu.bsky.social 's new algorithm using RL to provide better skills for planning!

Check out the website for code, videos, and pre-trained models: github.com/isabelliu0/S...

While AI research often conflates reasoning with language models, block-building lets us study how embodied reasoning might emerge from exploration and trial-and-error learning!

Presenting BuilderBench (website : t.co/H7wToslhXG).

Details below 🧵⬇️

While AI research often conflates reasoning with language models, block-building lets us study how embodied reasoning might emerge from exploration and trial-and-error learning!

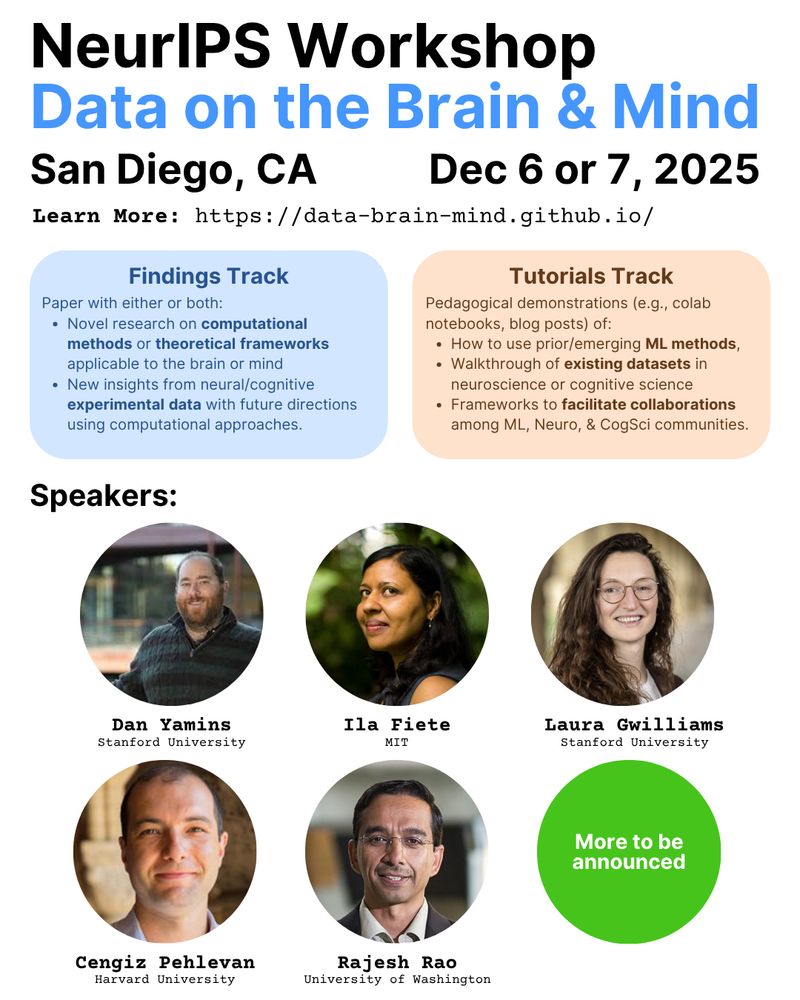

📣 Call for: Findings (4- or 8-page) + Tutorials tracks

🎙️ Speakers include @dyamins.bsky.social @lauragwilliams.bsky.social @cpehlevan.bsky.social

🌐 Learn more: data-brain-mind.github.io

📣 Call for: Findings (4- or 8-page) + Tutorials tracks

🎙️ Speakers include @dyamins.bsky.social @lauragwilliams.bsky.social @cpehlevan.bsky.social

🌐 Learn more: data-brain-mind.github.io

This project changed my mind in 2 ways:

1/ Diffusion policies, flow-models, and EBMs have become ubiquitous in RL. Turns out NFs can perform as well -- no ODEs/SDEs required!

Are NFs fundamentally limited?

This project changed my mind in 2 ways:

1/ Diffusion policies, flow-models, and EBMs have become ubiquitous in RL. Turns out NFs can perform as well -- no ODEs/SDEs required!

Our new paper shows that very very deep networks are surprisingly useful for RL, if you use resnets, layer norm, and self-supervised RL!

Paper, code, videos: wang-kevin3290.github.io/scaling-crl/

Webpage+Paper+Code: wang-kevin3290.github.io/scaling-crl/

Our new paper shows that very very deep networks are surprisingly useful for RL, if you use resnets, layer norm, and self-supervised RL!

Paper, code, videos: wang-kevin3290.github.io/scaling-crl/

In practice, RL agents often struggle to generalize to new long-horizon behaviors.

Our new paper studies *horizon generalization*, the degree to which RL algorithms generalize to reaching distant goals. 1/