Vivek Myers

@vivekmyers.bsky.social

PhD student @Berkeley_AI

reinforcement learning, AI, robotics

reinforcement learning, AI, robotics

Reposted by Vivek Myers

🚨 Deadline Extended 🚨

The submission deadline for the Data on the Brain & Mind Workshop (NeurIPS 2025) has been extended to Sep 8 (AoE)! 🧠✨

We invite you to submit your findings or tutorials via the OpenReview portal:

openreview.net/group?id=Neu...

The submission deadline for the Data on the Brain & Mind Workshop (NeurIPS 2025) has been extended to Sep 8 (AoE)! 🧠✨

We invite you to submit your findings or tutorials via the OpenReview portal:

openreview.net/group?id=Neu...

NeurIPS 2025 Workshop DBM

Welcome to the OpenReview homepage for NeurIPS 2025 Workshop DBM

openreview.net

August 27, 2025 at 7:45 PM

🚨 Deadline Extended 🚨

The submission deadline for the Data on the Brain & Mind Workshop (NeurIPS 2025) has been extended to Sep 8 (AoE)! 🧠✨

We invite you to submit your findings or tutorials via the OpenReview portal:

openreview.net/group?id=Neu...

The submission deadline for the Data on the Brain & Mind Workshop (NeurIPS 2025) has been extended to Sep 8 (AoE)! 🧠✨

We invite you to submit your findings or tutorials via the OpenReview portal:

openreview.net/group?id=Neu...

Reposted by Vivek Myers

📢 10 days left to submit to the Data on the Brain & Mind Workshop at #NeurIPS2025!

📝 Call for:

• Findings (4 or 8 pages)

• Tutorials

If you’re submitting to ICLR or NeurIPS, consider submitting here too—and highlight how to use a cog neuro dataset in our tutorial track!

🔗 data-brain-mind.github.io

📝 Call for:

• Findings (4 or 8 pages)

• Tutorials

If you’re submitting to ICLR or NeurIPS, consider submitting here too—and highlight how to use a cog neuro dataset in our tutorial track!

🔗 data-brain-mind.github.io

Data on the Brain & Mind

data-brain-mind.github.io

August 25, 2025 at 3:43 PM

📢 10 days left to submit to the Data on the Brain & Mind Workshop at #NeurIPS2025!

📝 Call for:

• Findings (4 or 8 pages)

• Tutorials

If you’re submitting to ICLR or NeurIPS, consider submitting here too—and highlight how to use a cog neuro dataset in our tutorial track!

🔗 data-brain-mind.github.io

📝 Call for:

• Findings (4 or 8 pages)

• Tutorials

If you’re submitting to ICLR or NeurIPS, consider submitting here too—and highlight how to use a cog neuro dataset in our tutorial track!

🔗 data-brain-mind.github.io

Reposted by Vivek Myers

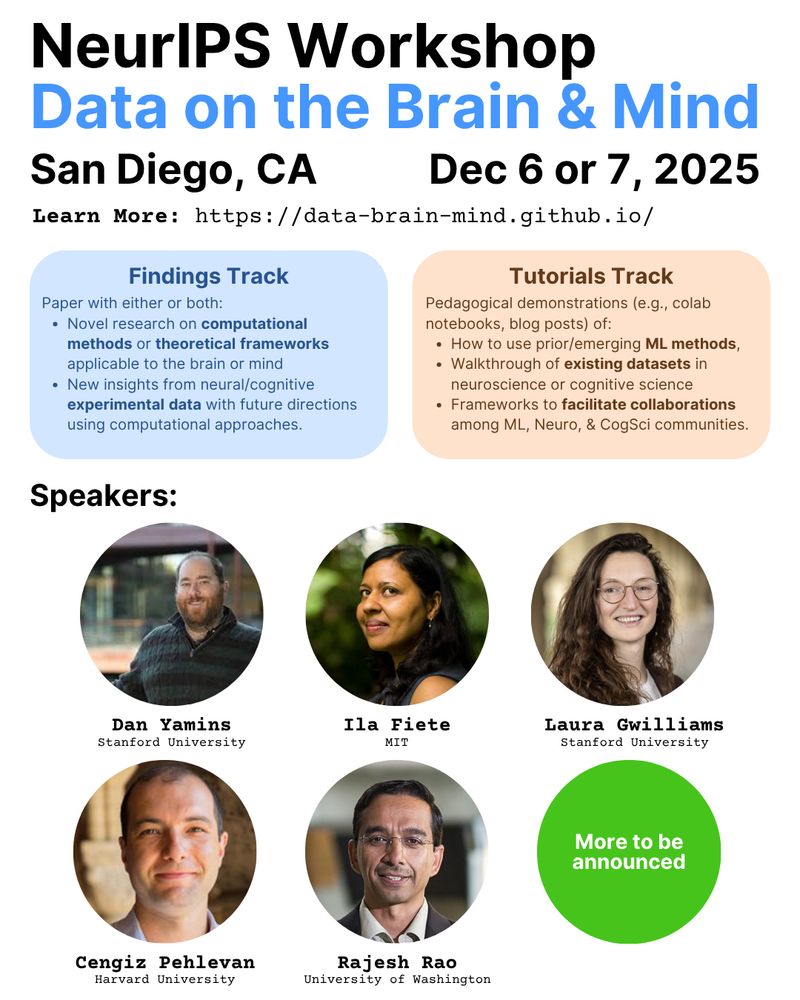

🚨 Excited to announce our #NeurIPS2025 Workshop: Data on the Brain & Mind

📣 Call for: Findings (4- or 8-page) + Tutorials tracks

🎙️ Speakers include @dyamins.bsky.social @lauragwilliams.bsky.social @cpehlevan.bsky.social

🌐 Learn more: data-brain-mind.github.io

📣 Call for: Findings (4- or 8-page) + Tutorials tracks

🎙️ Speakers include @dyamins.bsky.social @lauragwilliams.bsky.social @cpehlevan.bsky.social

🌐 Learn more: data-brain-mind.github.io

August 4, 2025 at 3:28 PM

🚨 Excited to announce our #NeurIPS2025 Workshop: Data on the Brain & Mind

📣 Call for: Findings (4- or 8-page) + Tutorials tracks

🎙️ Speakers include @dyamins.bsky.social @lauragwilliams.bsky.social @cpehlevan.bsky.social

🌐 Learn more: data-brain-mind.github.io

📣 Call for: Findings (4- or 8-page) + Tutorials tracks

🎙️ Speakers include @dyamins.bsky.social @lauragwilliams.bsky.social @cpehlevan.bsky.social

🌐 Learn more: data-brain-mind.github.io

Reposted by Vivek Myers

This is an excellent and very clear piece from Sergey Levine about the strengths and limitations of Large Language models.

sergeylevine.substack.com/p/language-m...

sergeylevine.substack.com/p/language-m...

Language Models in Plato's Cave

Why language models succeeded where video models failed, and what that teaches us about AI

sergeylevine.substack.com

June 12, 2025 at 4:30 PM

This is an excellent and very clear piece from Sergey Levine about the strengths and limitations of Large Language models.

sergeylevine.substack.com/p/language-m...

sergeylevine.substack.com/p/language-m...

Reposted by Vivek Myers

Normalizing Flows (NFs) check all boxes for RL: exact likelihoods (imitation learning), efficient sampling (real-time control), and variational inference (Q-learning)! Yet they are overlooked over more expensive and less flexible contemporaries like diffusion models.

Are NFs fundamentally limited?

Are NFs fundamentally limited?

June 5, 2025 at 5:06 PM

Normalizing Flows (NFs) check all boxes for RL: exact likelihoods (imitation learning), efficient sampling (real-time control), and variational inference (Q-learning)! Yet they are overlooked over more expensive and less flexible contemporaries like diffusion models.

Are NFs fundamentally limited?

Are NFs fundamentally limited?

How can agents trained to reach (temporally) nearby goals generalize to attain distant goals?

Come to our #ICLR2025 poster now to discuss 𝘩𝘰𝘳𝘪𝘻𝘰𝘯 𝘨𝘦𝘯𝘦𝘳𝘢𝘭𝘪𝘻𝘢𝘵𝘪𝘰𝘯!

w/ @crji.bsky.social and @ben-eysenbach.bsky.social

📍Hall 3 + Hall 2B #637

Come to our #ICLR2025 poster now to discuss 𝘩𝘰𝘳𝘪𝘻𝘰𝘯 𝘨𝘦𝘯𝘦𝘳𝘢𝘭𝘪𝘻𝘢𝘵𝘪𝘰𝘯!

w/ @crji.bsky.social and @ben-eysenbach.bsky.social

📍Hall 3 + Hall 2B #637

April 26, 2025 at 2:12 AM

How can agents trained to reach (temporally) nearby goals generalize to attain distant goals?

Come to our #ICLR2025 poster now to discuss 𝘩𝘰𝘳𝘪𝘻𝘰𝘯 𝘨𝘦𝘯𝘦𝘳𝘢𝘭𝘪𝘻𝘢𝘵𝘪𝘰𝘯!

w/ @crji.bsky.social and @ben-eysenbach.bsky.social

📍Hall 3 + Hall 2B #637

Come to our #ICLR2025 poster now to discuss 𝘩𝘰𝘳𝘪𝘻𝘰𝘯 𝘨𝘦𝘯𝘦𝘳𝘢𝘭𝘪𝘻𝘢𝘵𝘪𝘰𝘯!

w/ @crji.bsky.social and @ben-eysenbach.bsky.social

📍Hall 3 + Hall 2B #637

Reposted by Vivek Myers

🚨Our new #ICLR2025 paper presents a unified framework for intrinsic motivation and reward shaping: they signal the value of the RL agent’s state🤖=external state🌎+past experience🧠. Rewards based on potentials over the learning agent’s state provably avoid reward hacking!🧵

March 26, 2025 at 12:05 AM

🚨Our new #ICLR2025 paper presents a unified framework for intrinsic motivation and reward shaping: they signal the value of the RL agent’s state🤖=external state🌎+past experience🧠. Rewards based on potentials over the learning agent’s state provably avoid reward hacking!🧵

Current robot learning methods are good at imitating tasks seen during training, but struggle to compose behaviors in new ways. When training imitation policies, we found something surprising—using temporally-aligned task representations enabled compositional generalization. 1/

February 14, 2025 at 1:39 AM

Current robot learning methods are good at imitating tasks seen during training, but struggle to compose behaviors in new ways. When training imitation policies, we found something surprising—using temporally-aligned task representations enabled compositional generalization. 1/

Reposted by Vivek Myers

Excited to share new work led by @vivekmyers.bsky.social and @crji.bsky.social that proves you can learn to reach distant goals by solely training on nearby goals. The key idea is a new form of invariance. This invariance implies generalization w.r.t. the horizon.

Reinforcement learning agents should be able to improve upon behaviors seen during training.

In practice, RL agents often struggle to generalize to new long-horizon behaviors.

Our new paper studies *horizon generalization*, the degree to which RL algorithms generalize to reaching distant goals. 1/

In practice, RL agents often struggle to generalize to new long-horizon behaviors.

Our new paper studies *horizon generalization*, the degree to which RL algorithms generalize to reaching distant goals. 1/

February 6, 2025 at 1:13 AM

Excited to share new work led by @vivekmyers.bsky.social and @crji.bsky.social that proves you can learn to reach distant goals by solely training on nearby goals. The key idea is a new form of invariance. This invariance implies generalization w.r.t. the horizon.

Reposted by Vivek Myers

Want to see an agent carry out long horizons tasks when only trained on short horizon trajectories?

We formalize and demonstrate this notion of *horizon generalization* in RL.

Check out our website! horizon-generalization.github.io

We formalize and demonstrate this notion of *horizon generalization* in RL.

Check out our website! horizon-generalization.github.io

February 4, 2025 at 8:50 PM

Want to see an agent carry out long horizons tasks when only trained on short horizon trajectories?

We formalize and demonstrate this notion of *horizon generalization* in RL.

Check out our website! horizon-generalization.github.io

We formalize and demonstrate this notion of *horizon generalization* in RL.

Check out our website! horizon-generalization.github.io

Reinforcement learning agents should be able to improve upon behaviors seen during training.

In practice, RL agents often struggle to generalize to new long-horizon behaviors.

Our new paper studies *horizon generalization*, the degree to which RL algorithms generalize to reaching distant goals. 1/

In practice, RL agents often struggle to generalize to new long-horizon behaviors.

Our new paper studies *horizon generalization*, the degree to which RL algorithms generalize to reaching distant goals. 1/

February 4, 2025 at 8:37 PM

Reinforcement learning agents should be able to improve upon behaviors seen during training.

In practice, RL agents often struggle to generalize to new long-horizon behaviors.

Our new paper studies *horizon generalization*, the degree to which RL algorithms generalize to reaching distant goals. 1/

In practice, RL agents often struggle to generalize to new long-horizon behaviors.

Our new paper studies *horizon generalization*, the degree to which RL algorithms generalize to reaching distant goals. 1/

“AI Alignment" is typically seen as the problem of instilling human values in agents. But the very notion of human values is nebulous—humans have distinct, contradictory preferences which may change. Really, we should ensure agents *empower* humans to best achieve their own goals. 1/

January 22, 2025 at 2:17 AM

“AI Alignment" is typically seen as the problem of instilling human values in agents. But the very notion of human values is nebulous—humans have distinct, contradictory preferences which may change. Really, we should ensure agents *empower* humans to best achieve their own goals. 1/

Reposted by Vivek Myers

When RLHFed models engage in “reward hacking” it can lead to unsafe/unwanted behavior. But there isn’t a good formal definition of what this means! Our new paper provides a definition AND a method that provably prevents reward hacking in realistic settings, including RLHF. 🧵

December 19, 2024 at 5:17 PM

When RLHFed models engage in “reward hacking” it can lead to unsafe/unwanted behavior. But there isn’t a good formal definition of what this means! Our new paper provides a definition AND a method that provably prevents reward hacking in realistic settings, including RLHF. 🧵

When is interpolation in a learned representation space meaningful? Come to our NeurIPS poster today at 4:30 to see how time-contrastive learning can provably enable inference (such as subgoal planning) through warped linear interpolation!

December 11, 2024 at 10:57 PM

When is interpolation in a learned representation space meaningful? Come to our NeurIPS poster today at 4:30 to see how time-contrastive learning can provably enable inference (such as subgoal planning) through warped linear interpolation!