Belongie Lab

@belongielab.org

Computer Vision & Machine Learning

📍 Pioneer Centre for AI, University of Copenhagen

🌐 https://www.belongielab.org

📍 Pioneer Centre for AI, University of Copenhagen

🌐 https://www.belongielab.org

Pinned

Belongie Lab

@belongielab.org

· Nov 17

Belongie Lab - Home

Belongie Lab -- Home.

www.belongielab.org

Logging on! 🧑💻🦋 We're the Belongie Lab led by @sergebelongie.bsky.social. We study Computer Vision and Machine Learning, located at the University of Copenhagen and Pioneer Centre for AI. Follow along to hear about our research past and present! www.belongielab.org

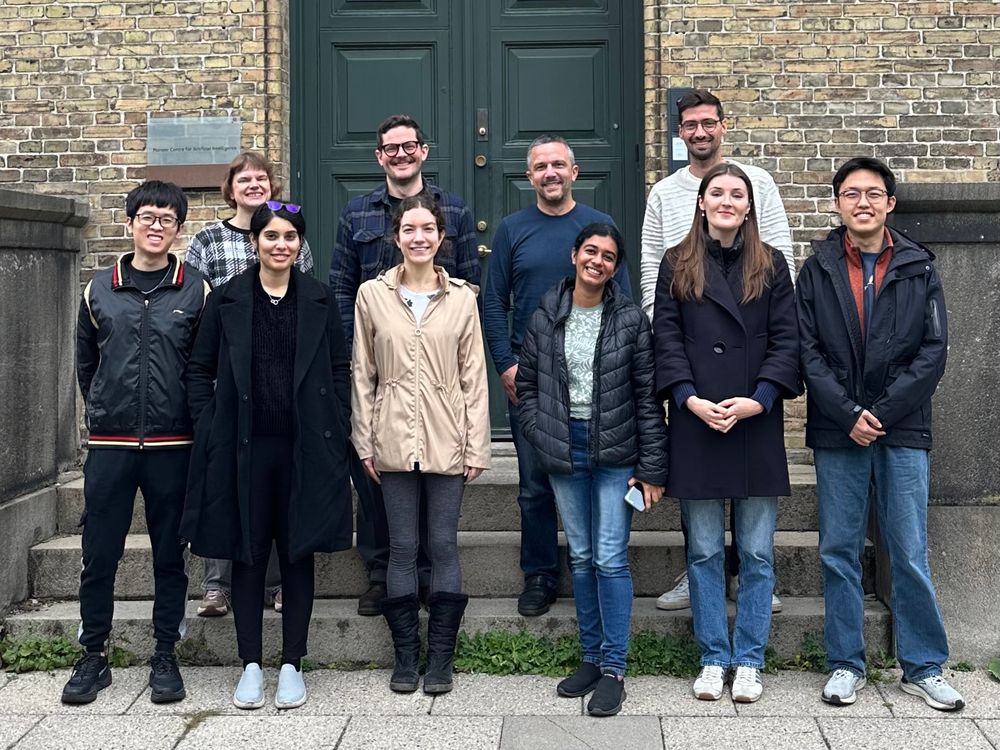

Group photo before the start of Efterårsferie 📸🍂

Back row: Stella, Vésteinn, Serge, Nico

Front row: Jiaang, Srishti, Lucia, Silpa, Noah, Chengzu

Not pictured: Ioannis, Peter, Sebastian, Marco, Zhaochong, Tijmen

Back row: Stella, Vésteinn, Serge, Nico

Front row: Jiaang, Srishti, Lucia, Silpa, Noah, Chengzu

Not pictured: Ioannis, Peter, Sebastian, Marco, Zhaochong, Tijmen

October 10, 2025 at 3:35 PM

Group photo before the start of Efterårsferie 📸🍂

Back row: Stella, Vésteinn, Serge, Nico

Front row: Jiaang, Srishti, Lucia, Silpa, Noah, Chengzu

Not pictured: Ioannis, Peter, Sebastian, Marco, Zhaochong, Tijmen

Back row: Stella, Vésteinn, Serge, Nico

Front row: Jiaang, Srishti, Lucia, Silpa, Noah, Chengzu

Not pictured: Ioannis, Peter, Sebastian, Marco, Zhaochong, Tijmen

Today we welcomed @chinglam.bsky.social back to @aicentre.dk for a visit and lunch talk on her latest work as a PhD student at @csail.mit.edu on multimodal learning: “Seeing Beyond the Cave: Asymmetric Views of Representation”

www.aicentre.dk/events/lunch...

www.aicentre.dk/events/lunch...

August 13, 2025 at 10:53 AM

Today we welcomed @chinglam.bsky.social back to @aicentre.dk for a visit and lunch talk on her latest work as a PhD student at @csail.mit.edu on multimodal learning: “Seeing Beyond the Cave: Asymmetric Views of Representation”

www.aicentre.dk/events/lunch...

www.aicentre.dk/events/lunch...

Excited to share our new work at @acm.org Transactions on Graphics/@acmsiggraph.bsky.social! We use coded noise to add an invisible watermark to lighting that helps detect fake or manipulated video. (1/2)

July 30, 2025 at 3:56 PM

Excited to share our new work at @acm.org Transactions on Graphics/@acmsiggraph.bsky.social! We use coded noise to add an invisible watermark to lighting that helps detect fake or manipulated video. (1/2)

Reposted by Belongie Lab

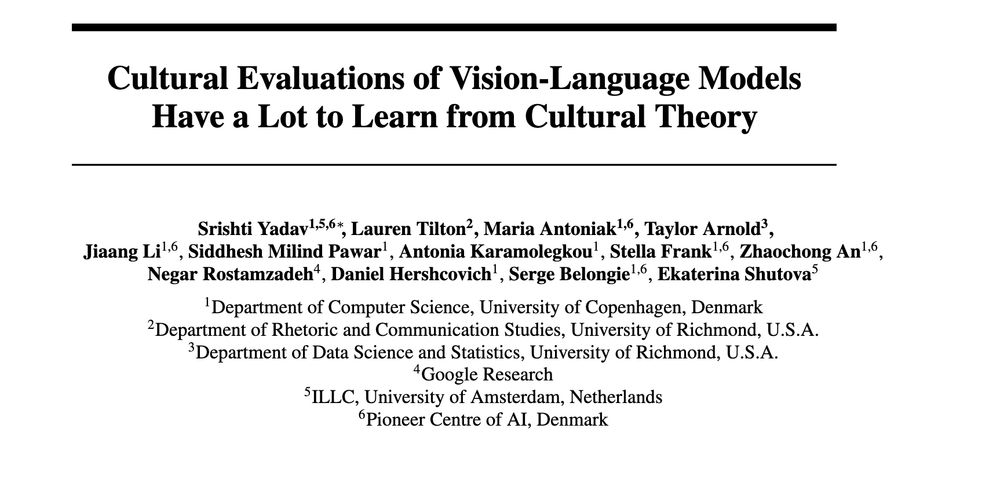

I am excited to announce our latest work 🎉 "Cultural Evaluations of Vision-Language Models Have a Lot to Learn from Cultural Theory". We review recent works on culture in VLMs and argue for deeper grounding in cultural theory to enable more inclusive evaluations.

Paper 🔗: arxiv.org/pdf/2505.22793

Paper 🔗: arxiv.org/pdf/2505.22793

June 2, 2025 at 10:36 AM

I am excited to announce our latest work 🎉 "Cultural Evaluations of Vision-Language Models Have a Lot to Learn from Cultural Theory". We review recent works on culture in VLMs and argue for deeper grounding in cultural theory to enable more inclusive evaluations.

Paper 🔗: arxiv.org/pdf/2505.22793

Paper 🔗: arxiv.org/pdf/2505.22793

Reposted by Belongie Lab

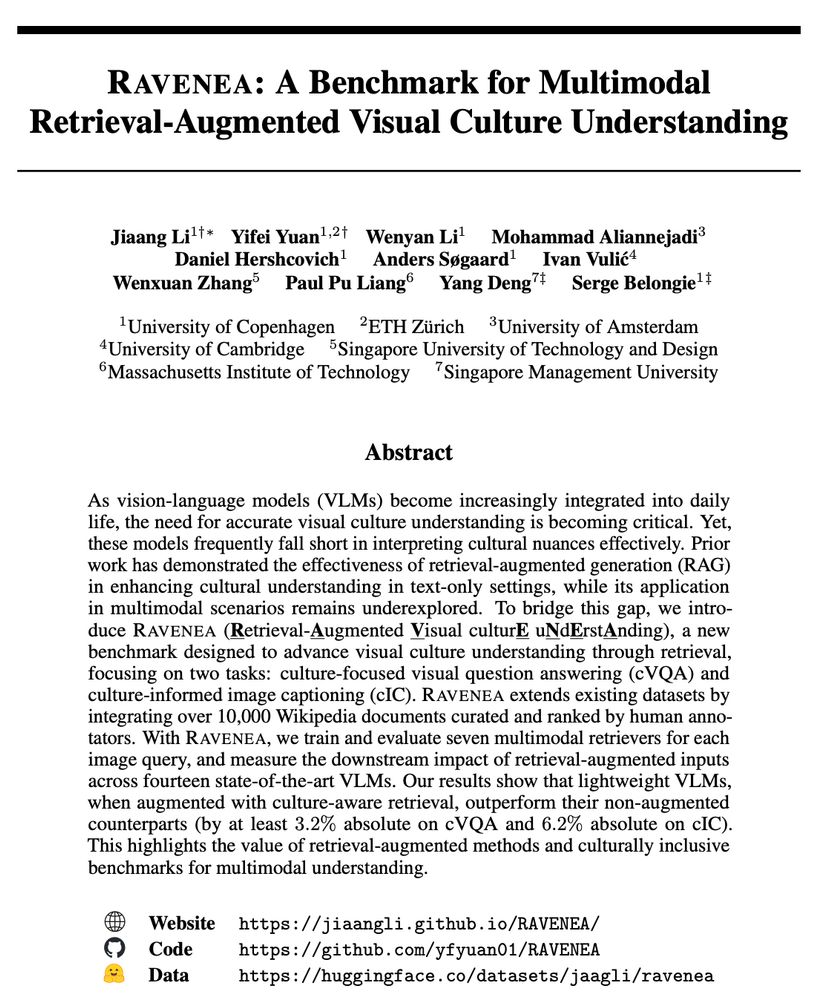

🚀New Preprint🚀

Can Multimodal Retrieval Enhance Cultural Awareness in Vision-Language Models?

Excited to introduce RAVENEA, a new benchmark aimed at evaluating cultural understanding in VLMs through RAG.

arxiv.org/abs/2505.14462

More details:👇

Can Multimodal Retrieval Enhance Cultural Awareness in Vision-Language Models?

Excited to introduce RAVENEA, a new benchmark aimed at evaluating cultural understanding in VLMs through RAG.

arxiv.org/abs/2505.14462

More details:👇

May 23, 2025 at 5:04 PM

🚀New Preprint🚀

Can Multimodal Retrieval Enhance Cultural Awareness in Vision-Language Models?

Excited to introduce RAVENEA, a new benchmark aimed at evaluating cultural understanding in VLMs through RAG.

arxiv.org/abs/2505.14462

More details:👇

Can Multimodal Retrieval Enhance Cultural Awareness in Vision-Language Models?

Excited to introduce RAVENEA, a new benchmark aimed at evaluating cultural understanding in VLMs through RAG.

arxiv.org/abs/2505.14462

More details:👇

This morning at P1 a handful of lucky of lab members got to see the telescope while centre secretary Björg had the dome open for a building tour 🔭 (1/7)

May 9, 2025 at 10:30 PM

This morning at P1 a handful of lucky of lab members got to see the telescope while centre secretary Björg had the dome open for a building tour 🔭 (1/7)

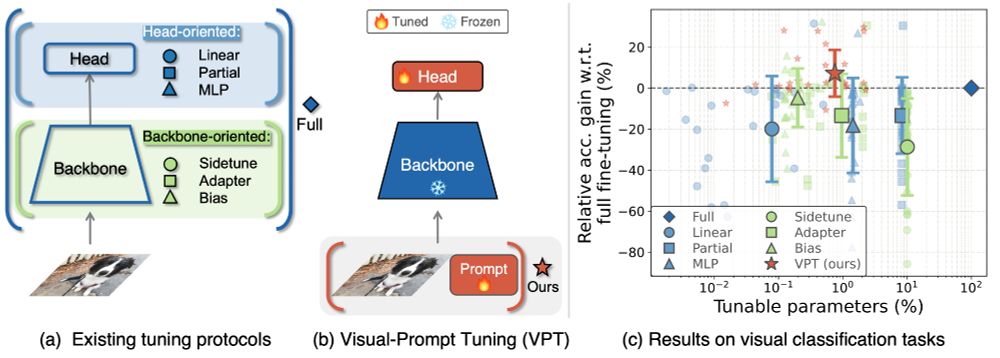

Time to revive the Belongie Lab Throwback Thursday tradition we had started in the before times 🙌 Today's #tbt is Jia et al. "Visual Prompt Tuning," from ECCV 2022 (1/5)

May 8, 2025 at 6:17 AM

Time to revive the Belongie Lab Throwback Thursday tradition we had started in the before times 🙌 Today's #tbt is Jia et al. "Visual Prompt Tuning," from ECCV 2022 (1/5)

Thank you Prof. Derpanis!

Feels like @belongielab.org just hit 100k citations yesterday, but that curve’s still climbing strong and now they’re barreling toward 200k. Let’s go! 💪🫡

May 7, 2025 at 7:15 PM

Thank you Prof. Derpanis!

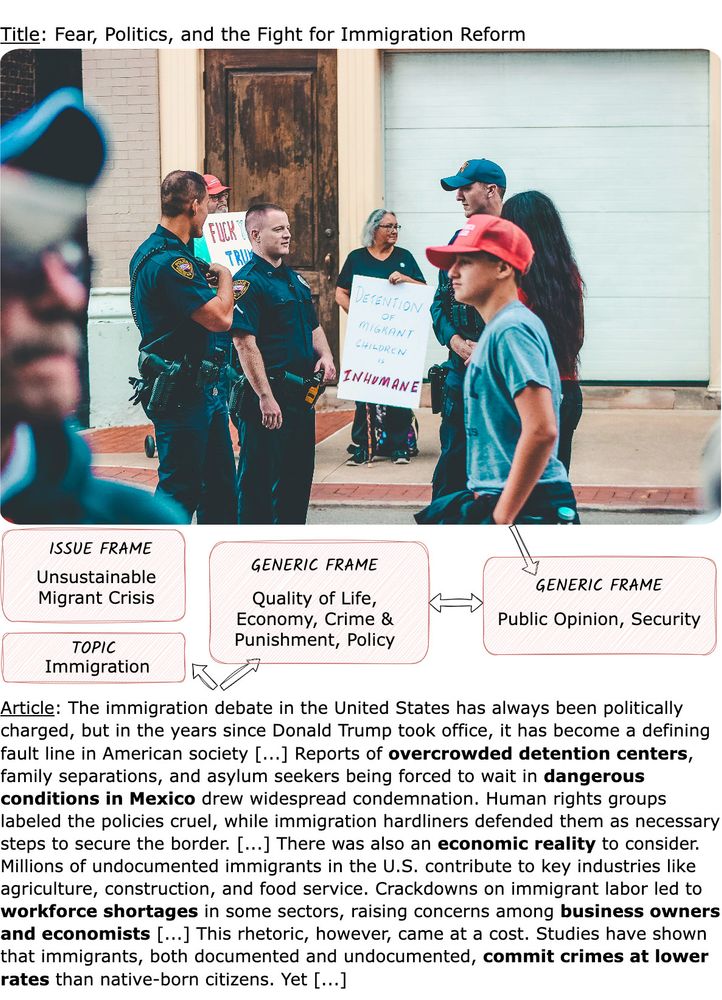

New study with @iaugenstein.bsky.social’s group analyzing the interplay between photos and text in the news

🚨New pre-print 🚨

News articles often convey different things in text vs. image. Recent work in computational framing analysis has analysed the article text but the corresponding images in those articles have been overlooked.

We propose multi-modal framing analysis of news: arxiv.org/abs/2503.20960

News articles often convey different things in text vs. image. Recent work in computational framing analysis has analysed the article text but the corresponding images in those articles have been overlooked.

We propose multi-modal framing analysis of news: arxiv.org/abs/2503.20960

April 7, 2025 at 9:38 AM

New study with @iaugenstein.bsky.social’s group analyzing the interplay between photos and text in the news

Reposted by Belongie Lab

Thrilled to announce "Multimodality Helps Few-shot 3D Point Cloud Semantic Segmentation" is accepted as a Spotlight (5%) at #ICLR2025!

Our model MM-FSS leverages 3D, 2D, & text modalities for robust few-shot 3D segmentation—all without extra labeling cost. 🤩

arxiv.org/pdf/2410.22489

More details👇

Our model MM-FSS leverages 3D, 2D, & text modalities for robust few-shot 3D segmentation—all without extra labeling cost. 🤩

arxiv.org/pdf/2410.22489

More details👇

February 11, 2025 at 5:49 PM

Thrilled to announce "Multimodality Helps Few-shot 3D Point Cloud Semantic Segmentation" is accepted as a Spotlight (5%) at #ICLR2025!

Our model MM-FSS leverages 3D, 2D, & text modalities for robust few-shot 3D segmentation—all without extra labeling cost. 🤩

arxiv.org/pdf/2410.22489

More details👇

Our model MM-FSS leverages 3D, 2D, & text modalities for robust few-shot 3D segmentation—all without extra labeling cost. 🤩

arxiv.org/pdf/2410.22489

More details👇

Reposted by Belongie Lab

Recordings of the SSL4EO-2024 summer school are now released!

This blog post summarizes what has been covered:

langnico.github.io/posts/SSL4EO...

Recordings: www.youtube.com/playlist?lis...

Course website: ankitkariryaa.github.io/ssl4eo/

[1/3]

This blog post summarizes what has been covered:

langnico.github.io/posts/SSL4EO...

Recordings: www.youtube.com/playlist?lis...

Course website: ankitkariryaa.github.io/ssl4eo/

[1/3]

January 24, 2025 at 3:32 PM

Recordings of the SSL4EO-2024 summer school are now released!

This blog post summarizes what has been covered:

langnico.github.io/posts/SSL4EO...

Recordings: www.youtube.com/playlist?lis...

Course website: ankitkariryaa.github.io/ssl4eo/

[1/3]

This blog post summarizes what has been covered:

langnico.github.io/posts/SSL4EO...

Recordings: www.youtube.com/playlist?lis...

Course website: ankitkariryaa.github.io/ssl4eo/

[1/3]

SCIA 2025 submission deadline extended to Feb. 17

SCIA 2025

Scandinavian Conference on Image Analysis 2025

scia2025.org

January 24, 2025 at 9:58 AM

SCIA 2025 submission deadline extended to Feb. 17

Learn more about Srishti’s research at www.srishti.dev 🙌

Meet Srishti Yadav, an ELLIS #PhD Student at 🇩🇰 Uni Copenhagen & 🇳🇱 Uni Amsterdam. Passionate about #AI & society, she explores culturally aware & inclusive AI models. Read her advice for young scientists & learn why women's visibility in AI/ML is crucial. #WomenInELLIS

January 15, 2025 at 11:15 AM

Learn more about Srishti’s research at www.srishti.dev 🙌

From San Diego to New York to Copenhagen, wishing you Happy Holidays!🎄

December 21, 2024 at 11:20 AM

From San Diego to New York to Copenhagen, wishing you Happy Holidays!🎄

With @neuripsconf.bsky.social right around the corner, we’re excited to be presenting our work soon! Here’s an overview

(1/5)

(1/5)

December 3, 2024 at 11:43 AM

With @neuripsconf.bsky.social right around the corner, we’re excited to be presenting our work soon! Here’s an overview

(1/5)

(1/5)

Here’s a starter pack with members of our lab that have joined Bluesky

Belongie Lab

Join the conversation

go.bsky.app

November 25, 2024 at 10:42 AM

Here’s a starter pack with members of our lab that have joined Bluesky

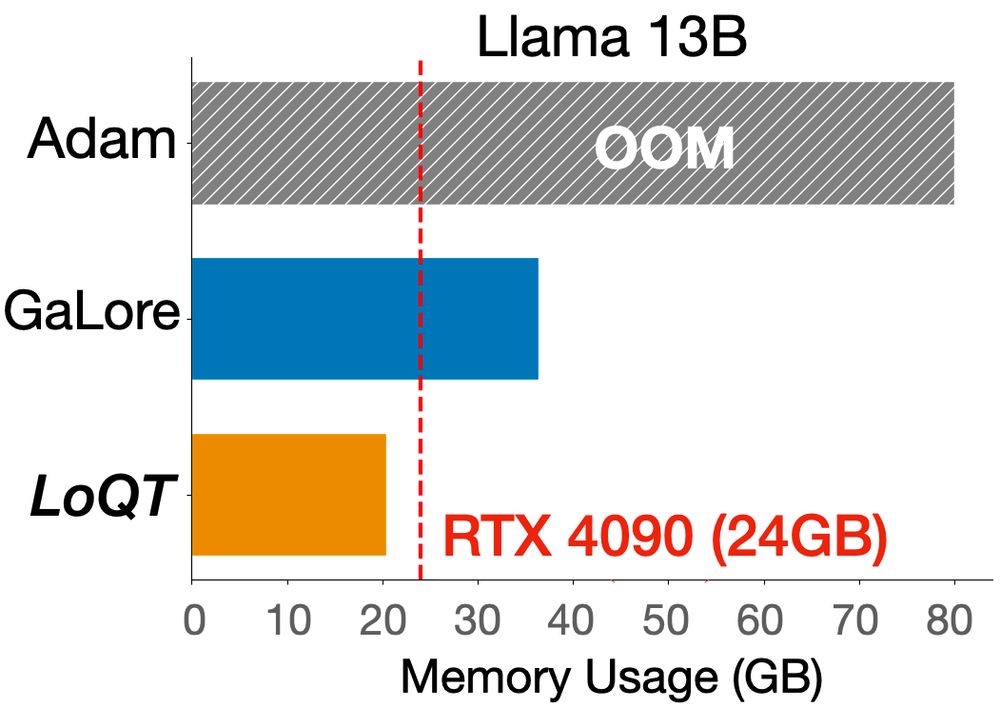

Reposted by Belongie Lab

Ever wanted to train your own 13B Llama2 model from scratch on a 24GB GPU? Or fine-tune one without compromising performance compared to full training? 🦙

You now can, with LoQT: Low-Rank Adapters for Quantized Pretaining! arxiv.org/abs/2405.16528

1/4

You now can, with LoQT: Low-Rank Adapters for Quantized Pretaining! arxiv.org/abs/2405.16528

1/4

November 18, 2024 at 9:29 AM

Ever wanted to train your own 13B Llama2 model from scratch on a 24GB GPU? Or fine-tune one without compromising performance compared to full training? 🦙

You now can, with LoQT: Low-Rank Adapters for Quantized Pretaining! arxiv.org/abs/2405.16528

1/4

You now can, with LoQT: Low-Rank Adapters for Quantized Pretaining! arxiv.org/abs/2405.16528

1/4

Reposted by Belongie Lab

🤔Do Vision and Language Models Share Concepts? 🚀

We present an empirical evaluation and find that language models partially converge towards representations isomorphic to those of vision models. #EMNLP

📃 direct.mit.edu/tacl/article...

We present an empirical evaluation and find that language models partially converge towards representations isomorphic to those of vision models. #EMNLP

📃 direct.mit.edu/tacl/article...

November 19, 2024 at 12:48 PM

🤔Do Vision and Language Models Share Concepts? 🚀

We present an empirical evaluation and find that language models partially converge towards representations isomorphic to those of vision models. #EMNLP

📃 direct.mit.edu/tacl/article...

We present an empirical evaluation and find that language models partially converge towards representations isomorphic to those of vision models. #EMNLP

📃 direct.mit.edu/tacl/article...

Reposted by Belongie Lab

Join us for the 𝗣𝗿𝗲-𝗡𝗲𝘂𝗿𝗜𝗣𝗦 𝗣𝗼𝘀𝘁𝗲𝗿 𝗦𝗲𝘀𝘀𝗶𝗼𝗻 in Copenhagen!

🗓️ 𝗪𝗵𝗲𝗻: 16:00–18:00, Nov. 22, 2024

📍 𝗪𝗵𝗲𝗿𝗲: Entrance Hall, Gefion, Øster Voldgade 10, 1350 København K.

Present or explore European contributions to NeurIPS 2024 and connect with colleagues.

👉 𝗜𝗻𝗳𝗼 & 𝘀𝗶𝗴𝗻-𝘂𝗽: www.aicentre.dk/events/pre-n...

🗓️ 𝗪𝗵𝗲𝗻: 16:00–18:00, Nov. 22, 2024

📍 𝗪𝗵𝗲𝗿𝗲: Entrance Hall, Gefion, Øster Voldgade 10, 1350 København K.

Present or explore European contributions to NeurIPS 2024 and connect with colleagues.

👉 𝗜𝗻𝗳𝗼 & 𝘀𝗶𝗴𝗻-𝘂𝗽: www.aicentre.dk/events/pre-n...

November 19, 2024 at 8:58 AM

Join us for the 𝗣𝗿𝗲-𝗡𝗲𝘂𝗿𝗜𝗣𝗦 𝗣𝗼𝘀𝘁𝗲𝗿 𝗦𝗲𝘀𝘀𝗶𝗼𝗻 in Copenhagen!

🗓️ 𝗪𝗵𝗲𝗻: 16:00–18:00, Nov. 22, 2024

📍 𝗪𝗵𝗲𝗿𝗲: Entrance Hall, Gefion, Øster Voldgade 10, 1350 København K.

Present or explore European contributions to NeurIPS 2024 and connect with colleagues.

👉 𝗜𝗻𝗳𝗼 & 𝘀𝗶𝗴𝗻-𝘂𝗽: www.aicentre.dk/events/pre-n...

🗓️ 𝗪𝗵𝗲𝗻: 16:00–18:00, Nov. 22, 2024

📍 𝗪𝗵𝗲𝗿𝗲: Entrance Hall, Gefion, Øster Voldgade 10, 1350 København K.

Present or explore European contributions to NeurIPS 2024 and connect with colleagues.

👉 𝗜𝗻𝗳𝗼 & 𝘀𝗶𝗴𝗻-𝘂𝗽: www.aicentre.dk/events/pre-n...

Reposted by Belongie Lab

I'm recruiting 1-2 PhD students to work with me at the University of Colorado Boulder! Looking for creative students with interests in #NLP and #CulturalAnalytics.

Boulder is a lovely college town 30 minutes from Denver and 1 hour from Rocky Mountain National Park 😎

Apply by December 15th!

Boulder is a lovely college town 30 minutes from Denver and 1 hour from Rocky Mountain National Park 😎

Apply by December 15th!

November 19, 2024 at 10:38 AM

I'm recruiting 1-2 PhD students to work with me at the University of Colorado Boulder! Looking for creative students with interests in #NLP and #CulturalAnalytics.

Boulder is a lovely college town 30 minutes from Denver and 1 hour from Rocky Mountain National Park 😎

Apply by December 15th!

Boulder is a lovely college town 30 minutes from Denver and 1 hour from Rocky Mountain National Park 😎

Apply by December 15th!

Logging on! 🧑💻🦋 We're the Belongie Lab led by @sergebelongie.bsky.social. We study Computer Vision and Machine Learning, located at the University of Copenhagen and Pioneer Centre for AI. Follow along to hear about our research past and present! www.belongielab.org

Belongie Lab - Home

Belongie Lab -- Home.

www.belongielab.org

November 17, 2024 at 12:36 PM

Logging on! 🧑💻🦋 We're the Belongie Lab led by @sergebelongie.bsky.social. We study Computer Vision and Machine Learning, located at the University of Copenhagen and Pioneer Centre for AI. Follow along to hear about our research past and present! www.belongielab.org