I finally gave in and made a nice blog post about my most recent paper. This was a surprising amount of work, so please be nice and go read it!

I finally gave in and made a nice blog post about my most recent paper. This was a surprising amount of work, so please be nice and go read it!

I finally gave in and made a nice blog post about my most recent paper. This was a surprising amount of work, so please be nice and go read it!

Some tuning is still needed, but we are seeing results roughly on par with #PQN.

If you want to test out #REPPO (atari is not integrated due to issues with envpool and jax version), check out github.com/cvoelcker/re...

#reinforcementlearning

Some tuning is still needed, but we are seeing results roughly on par with #PQN.

If you want to test out #REPPO (atari is not integrated due to issues with envpool and jax version), check out github.com/cvoelcker/re...

#reinforcementlearning

* Replicable Reinforcement Learning with Linear Function Approximation

* Relative Entropy Pathwise Policy Optimization

We already posted about the 2nd one (below), I'll get to talking about the first one in a bit here.

* Replicable Reinforcement Learning with Linear Function Approximation

* Relative Entropy Pathwise Policy Optimization

We already posted about the 2nd one (below), I'll get to talking about the first one in a bit here.

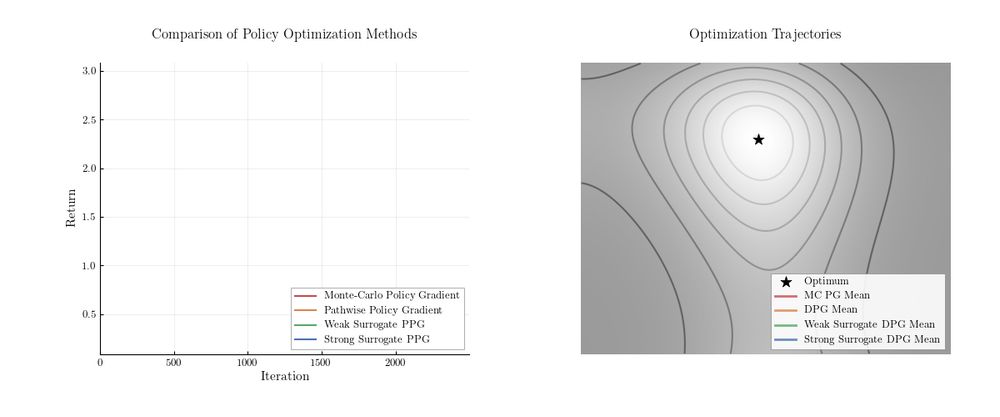

Off-policy #RL (eg #TD3) trains by differentiating a critic, while on-policy #RL (eg #PPO) uses Monte-Carlo gradients. But is that necessary? Turns out: No! We show how to get critic gradients on-policy. arxiv.org/abs/2507.11019

arxiv.org/abs/2403.17805

arxiv.org/abs/2403.17805