https://athrvkk.github.io/

Unsurprisingly, GPT4-based evaluators show the highest reliability with humans across settings 🌟

Using ensembles of multiple metrics is a promising avenue⭐️

Instruction tuning & mode-seeking decoding help reduce hallucinations📈

(5/n)

Unsurprisingly, GPT4-based evaluators show the highest reliability with humans across settings 🌟

Using ensembles of multiple metrics is a promising avenue⭐️

Instruction tuning & mode-seeking decoding help reduce hallucinations📈

(5/n)

⚠️Many existing metrics show poor alignment with human judgments

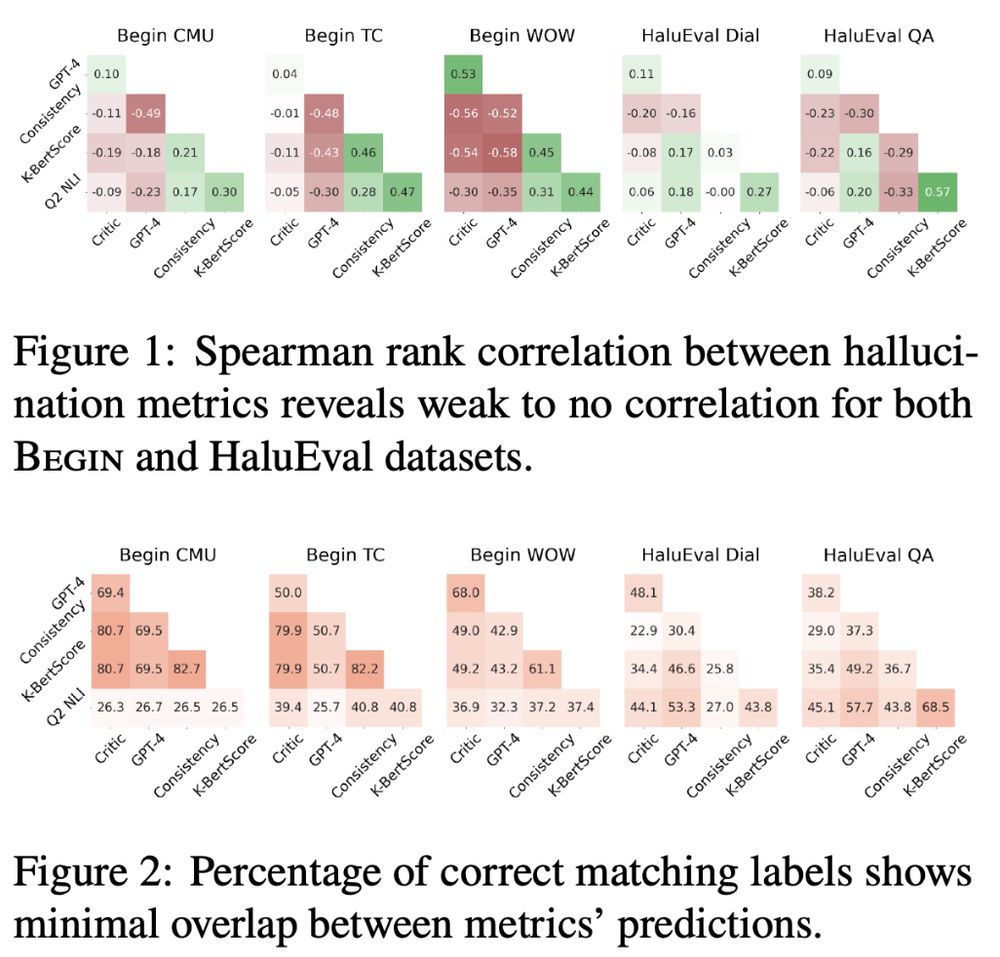

⚠️The inter-metric correlation is also weak

⚠️The show limited generalization across datasets, tasks, and models

⚠️They do not consistent improvement with larger models

(4/n)

⚠️Many existing metrics show poor alignment with human judgments

⚠️The inter-metric correlation is also weak

⚠️The show limited generalization across datasets, tasks, and models

⚠️They do not consistent improvement with larger models

(4/n)

Our latest work questions the generalizability of hallucination detection metrics across tasks, datasets, model sizes, training methods, and decoding strategies 💥

arxiv.org/abs/2504.18114

(1/n)

Our latest work questions the generalizability of hallucination detection metrics across tasks, datasets, model sizes, training methods, and decoding strategies 💥

arxiv.org/abs/2504.18114

(1/n)