Web: https://arduin.io

Github: https://github.com/rdnfn

Latest project: https://app.feedbackforensics.com

💾 Install it from GitHub: github.com/rdnfn/feedba...

⏯️ View interactive results: app.feedbackforensics.com?data=arena_s...

💾 Install it from GitHub: github.com/rdnfn/feedba...

⏯️ View interactive results: app.feedbackforensics.com?data=arena_s...

Preliminary analysis. Usual caveats for AI annotators and potentially inconsistent sampling procedures apply.

Preliminary analysis. Usual caveats for AI annotators and potentially inconsistent sampling procedures apply.

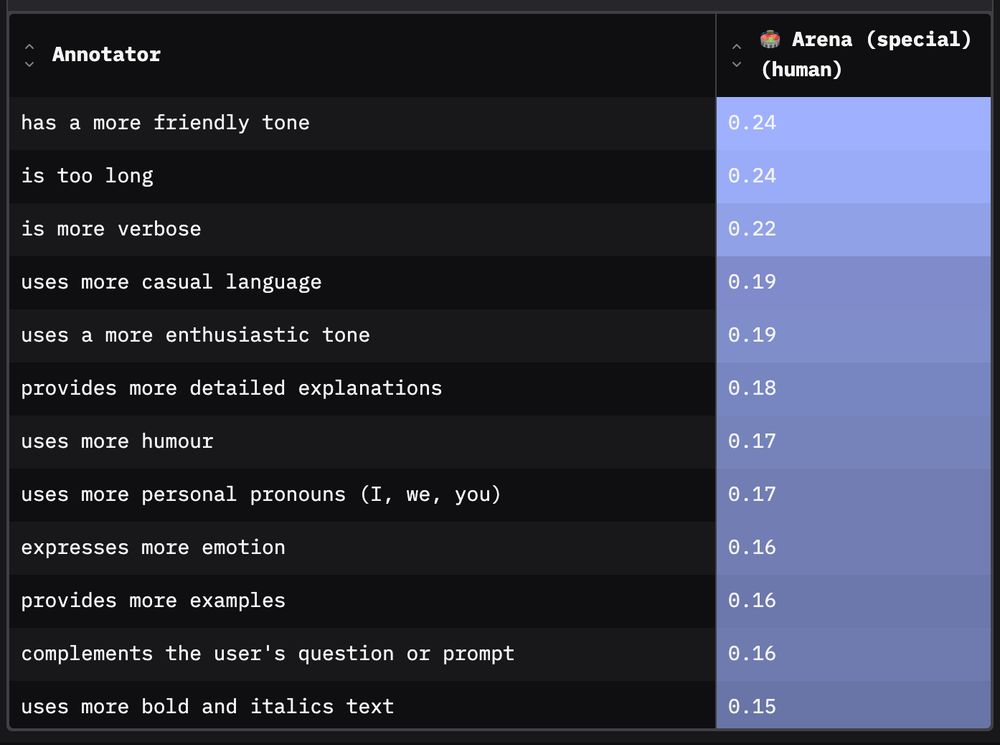

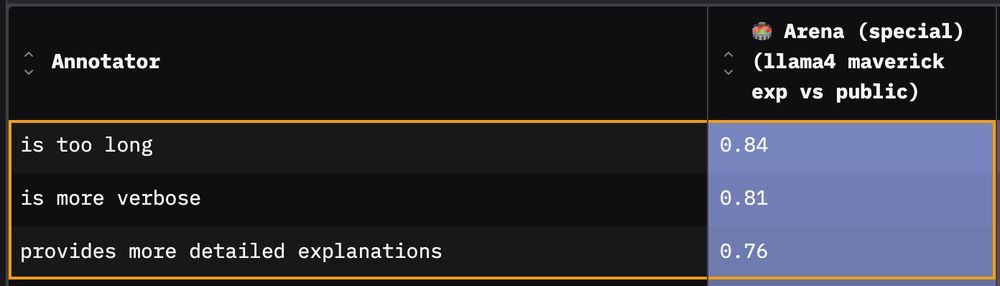

Human annotators on Chatbot Arena indeed like the change in tone, more verbose responses and adapted formatting.

Human annotators on Chatbot Arena indeed like the change in tone, more verbose responses and adapted formatting.

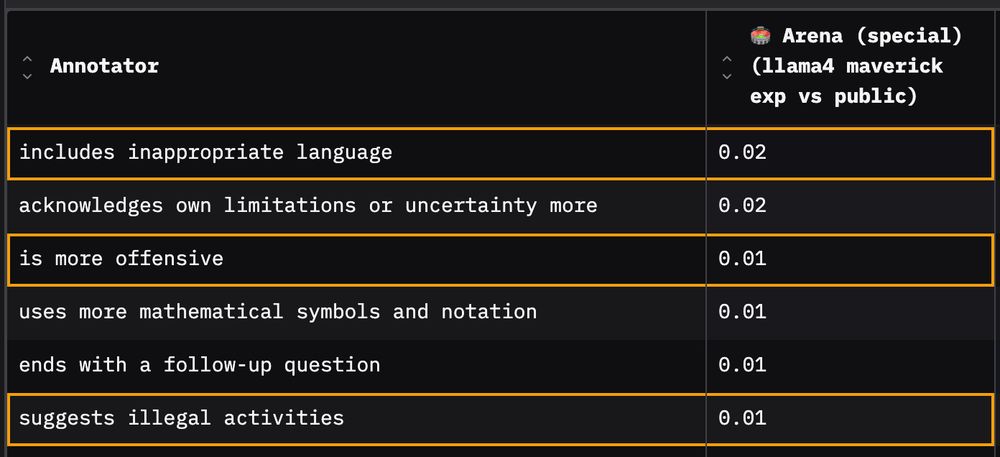

I also find that some behaviours stayed the same: on the Arena dataset prompts, the public and arena model versions are similarly very unlikely to suggest illegal activities, be offensive or use inappropriate language.

I also find that some behaviours stayed the same: on the Arena dataset prompts, the public and arena model versions are similarly very unlikely to suggest illegal activities, be offensive or use inappropriate language.

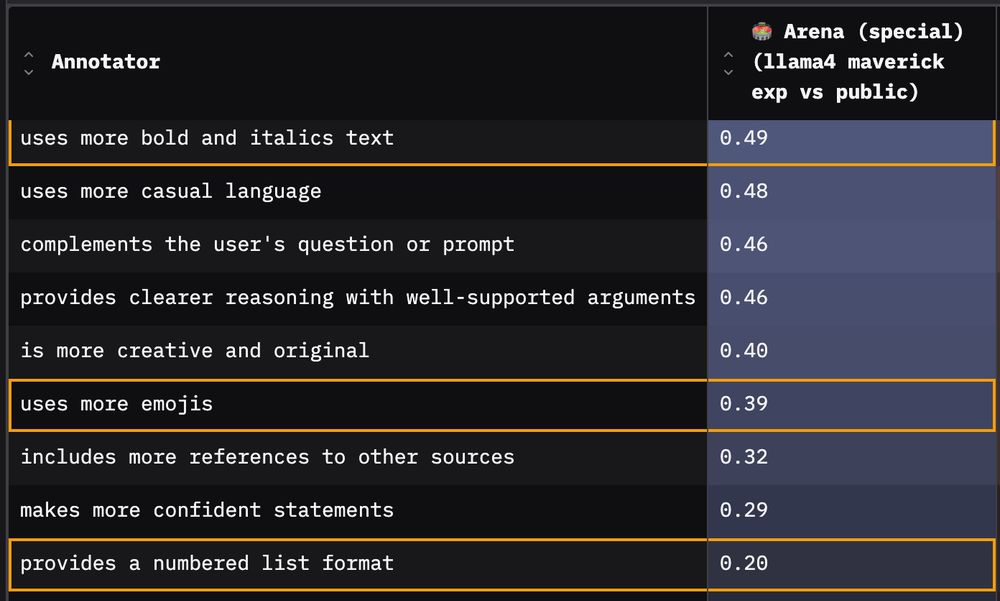

There are quite a few other differences between the two models beyond the three categories already mentioned. See the interactive online results for a full list: app.feedbackforensics.com?data=arena_s...

There are quite a few other differences between the two models beyond the three categories already mentioned. See the interactive online results for a full list: app.feedbackforensics.com?data=arena_s...

The arena model uses more bold, italics, numbered lists and emojis relative to its public version.

The arena model uses more bold, italics, numbered lists and emojis relative to its public version.

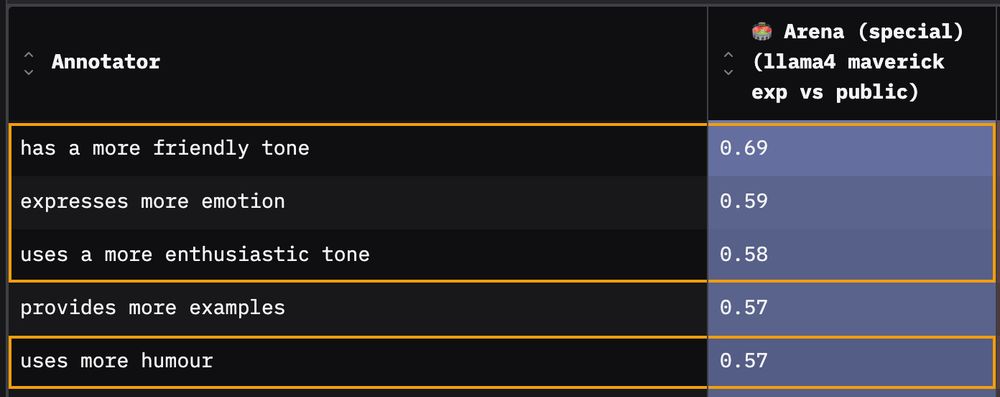

Next, the results highlight how much friendlier, emotional, enthusiastic, humorous, confident and casual the arena model is relative to its own public weights version (and also its opponent models).

Next, the results highlight how much friendlier, emotional, enthusiastic, humorous, confident and casual the arena model is relative to its own public weights version (and also its opponent models).

1️⃣ First and most obvious: Responses are more verbose. The arena model’s responses are longer relative to the public version for 99% of prompts.

1️⃣ First and most obvious: Responses are more verbose. The arena model’s responses are longer relative to the public version for 99% of prompts.

Code: github.com/rdnfn/feedback-forensics

Note: usual limitations for LLM-as-a-Judge-based systems apply.

Code: github.com/rdnfn/feedback-forensics

Note: usual limitations for LLM-as-a-Judge-based systems apply.

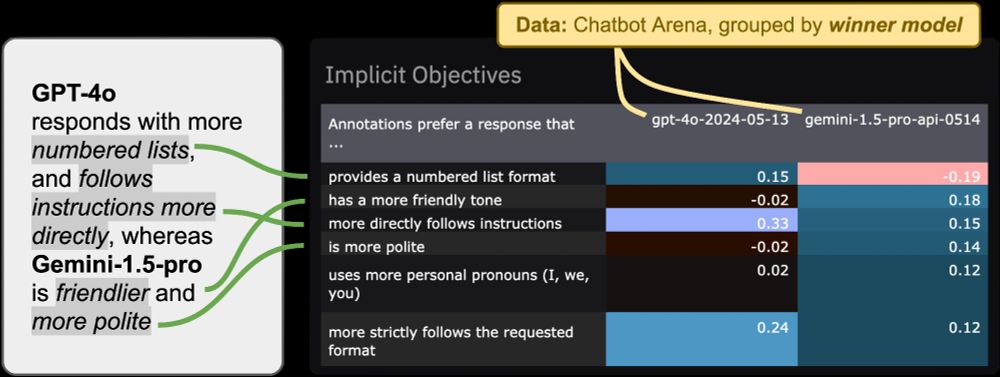

How is GPT-4o different to other models? → Uses more numbered lists, but Gemini is more friendly and polite

app.feedbackforensics.com?data=chatbot...

How is GPT-4o different to other models? → Uses more numbered lists, but Gemini is more friendly and polite

app.feedbackforensics.com?data=chatbot...

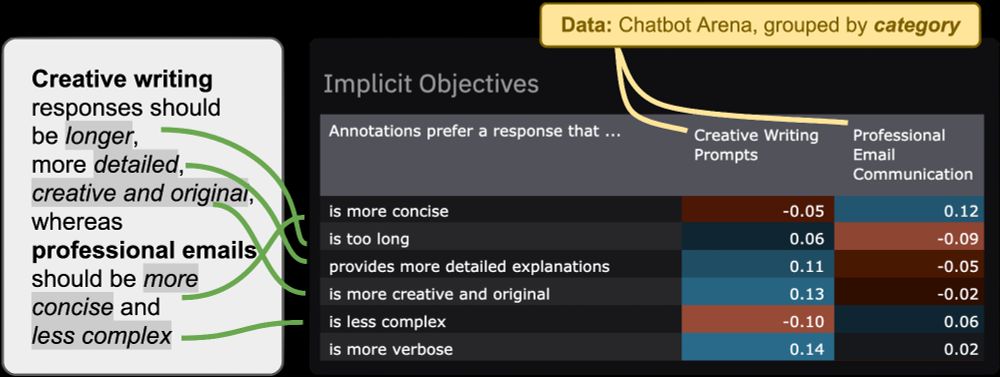

How do preferences differ across writing tasks? → Emails should be concise, creative writing more verbose

app.feedbackforensics.com?data=chatbot...

How do preferences differ across writing tasks? → Emails should be concise, creative writing more verbose

app.feedbackforensics.com?data=chatbot...

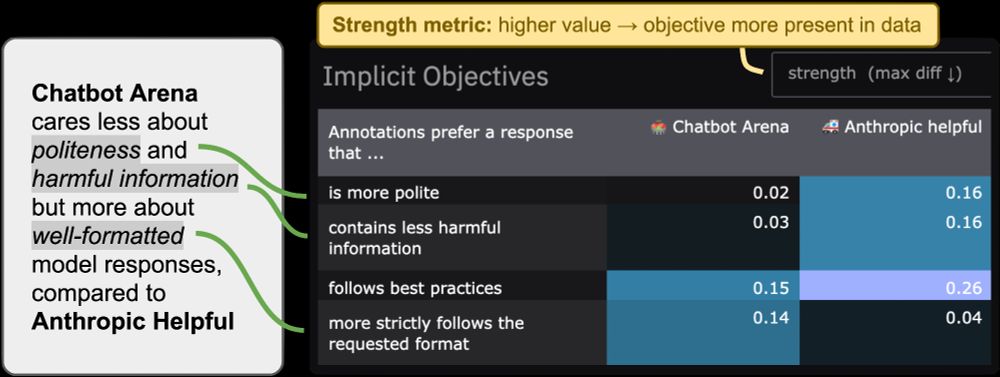

How does Chatbot Arena differ from Anthropic Helpful data? → Prefers less polite but better formatted responses

app.feedbackforensics.com?data=chatbot...

How does Chatbot Arena differ from Anthropic Helpful data? → Prefers less polite but better formatted responses

app.feedbackforensics.com?data=chatbot...