We are working on improving the system and will release a tech report in a few months.

We are working on improving the system and will release a tech report in a few months.

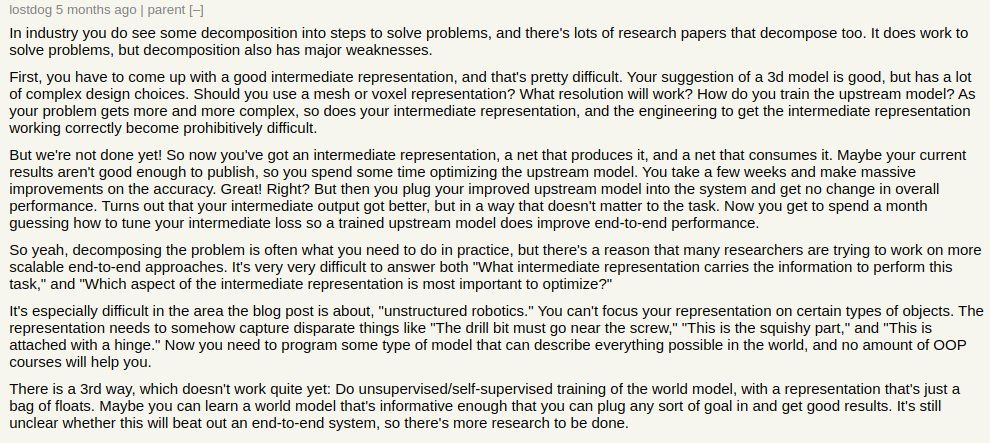

I love this hacker news comment that I saw on twitter few years ago.

I love this hacker news comment that I saw on twitter few years ago.

.

.

ControlNets: github.com/lllyasviel/C...

ControlNets: github.com/lllyasviel/C...