https://amr-farahat.github.io/

www.biorxiv.org/content/10.1...

www.biorxiv.org/content/10.1...

It is also important to use various ways to assess model strengths and weaknesses, not just one like prediction accuracy.

It is also important to use various ways to assess model strengths and weaknesses, not just one like prediction accuracy.

Our results also emphasize the importance of rigorous controls when using black box models like DNNs in neural modeling. They can show what makes a good neural model, and help us generate hypotheses about brain computations

Our results also emphasize the importance of rigorous controls when using black box models like DNNs in neural modeling. They can show what makes a good neural model, and help us generate hypotheses about brain computations

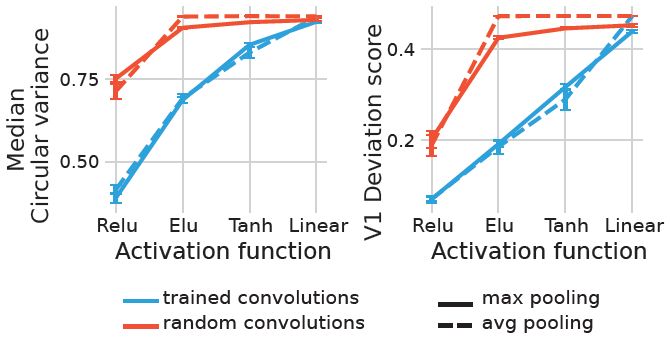

Our results suggest that the architecture bias of CNNs is key to predicting neural responses in the early visual cortex, which aligns with results in computer vision, showing that random convolutions suffice for several visual tasks.

Our results suggest that the architecture bias of CNNs is key to predicting neural responses in the early visual cortex, which aligns with results in computer vision, showing that random convolutions suffice for several visual tasks.

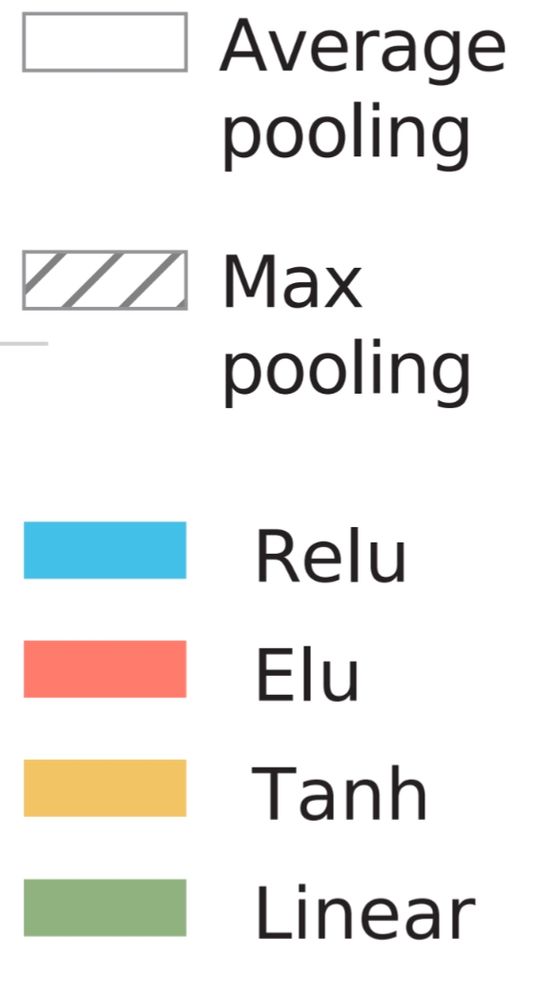

We found that random ReLU networks performed the best among random networks and only slightly worse than the fully trained counterpart.

We found that random ReLU networks performed the best among random networks and only slightly worse than the fully trained counterpart.

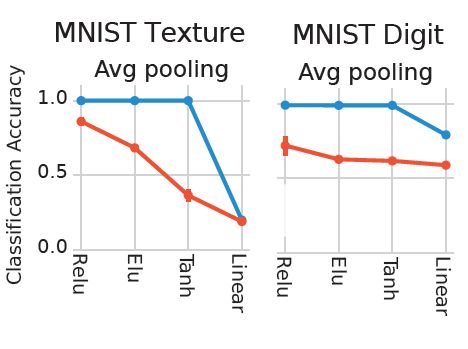

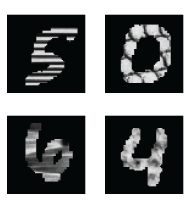

Then we tested for the ability of random networks to support texture discrimination, a task known to involve early visual cortex. We created Texture-MNIST, a dataset that allows for training for two tasks: object (Digit) recognition and texture discrimination

Then we tested for the ability of random networks to support texture discrimination, a task known to involve early visual cortex. We created Texture-MNIST, a dataset that allows for training for two tasks: object (Digit) recognition and texture discrimination

We found that trained ReLU networks are the most V1-like concerning OS. Moreover, random ReLU networks were the most V1-like among random networks and even on par with other fully trained networks.

We found that trained ReLU networks are the most V1-like concerning OS. Moreover, random ReLU networks were the most V1-like among random networks and even on par with other fully trained networks.