https://amr-farahat.github.io/

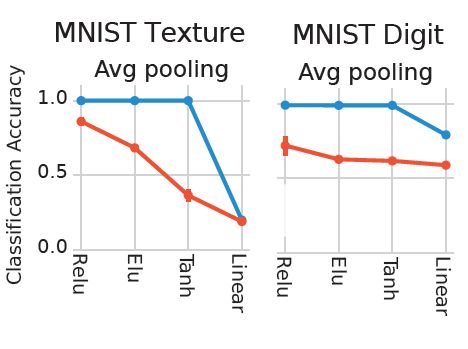

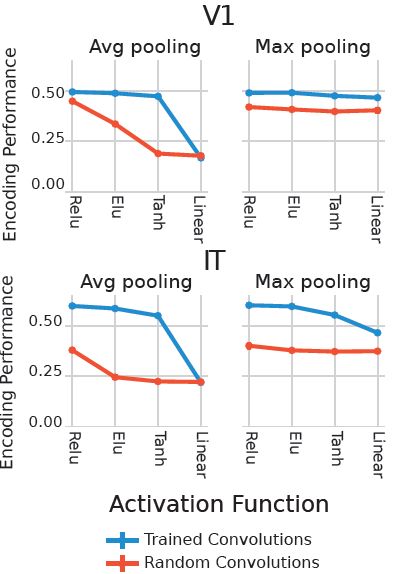

We found that random ReLU networks performed the best among random networks and only slightly worse than the fully trained counterpart.

We found that random ReLU networks performed the best among random networks and only slightly worse than the fully trained counterpart.

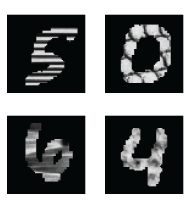

Then we tested for the ability of random networks to support texture discrimination, a task known to involve early visual cortex. We created Texture-MNIST, a dataset that allows for training for two tasks: object (Digit) recognition and texture discrimination

Then we tested for the ability of random networks to support texture discrimination, a task known to involve early visual cortex. We created Texture-MNIST, a dataset that allows for training for two tasks: object (Digit) recognition and texture discrimination

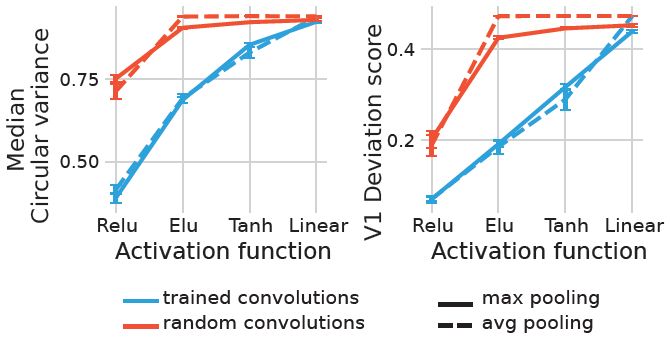

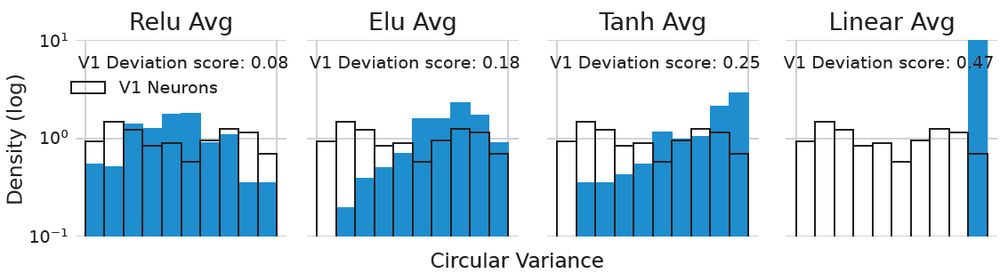

We found that trained ReLU networks are the most V1-like concerning OS. Moreover, random ReLU networks were the most V1-like among random networks and even on par with other fully trained networks.

We found that trained ReLU networks are the most V1-like concerning OS. Moreover, random ReLU networks were the most V1-like among random networks and even on par with other fully trained networks.

We quantified the orientation selectivity (OS) of artificial neurons using circular variance and calculated how their distribution deviates from the distribution of an independent dataset of experimentally recorded v1 neurons

We quantified the orientation selectivity (OS) of artificial neurons using circular variance and calculated how their distribution deviates from the distribution of an independent dataset of experimentally recorded v1 neurons

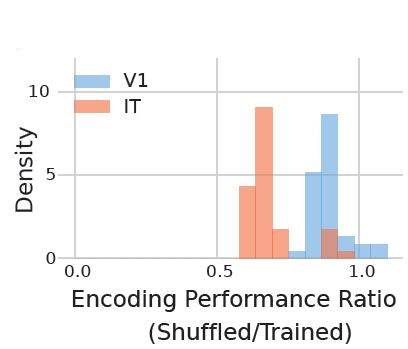

Even when we shuffled the trained weights of the convolutional filters, V1 models were way less affected than IT

Even when we shuffled the trained weights of the convolutional filters, V1 models were way less affected than IT

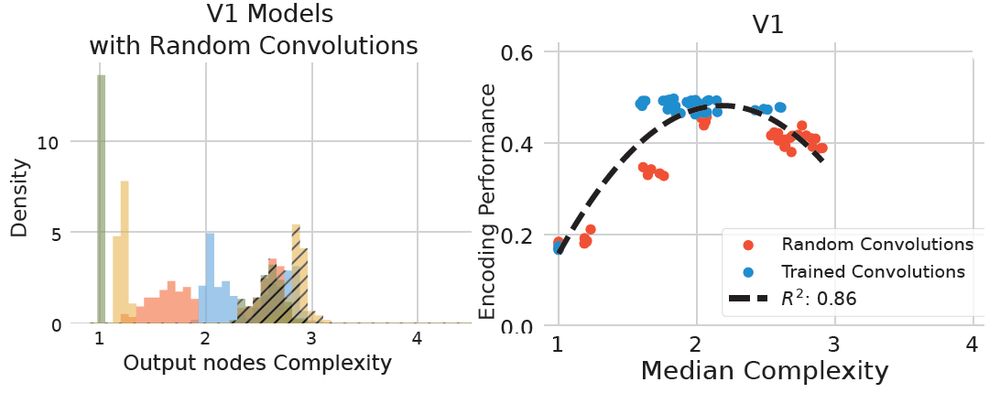

We quantified the complexity of the models transformations and found that ReLU models and max pooling models had considerably higher complexity. Moreover, complexity explained substantial variance in V1 encoding performance in comparison to IT (63%) and VO (55%) (not shown here)

We quantified the complexity of the models transformations and found that ReLU models and max pooling models had considerably higher complexity. Moreover, complexity explained substantial variance in V1 encoding performance in comparison to IT (63%) and VO (55%) (not shown here)

Surprisingly, we found out that even training simple CNN models directly on V1 data did not improve encoding performance substantially unlike IT. However, that was only true for CNNs using ReLU activation functions and/or max pooling.

Surprisingly, we found out that even training simple CNN models directly on V1 data did not improve encoding performance substantially unlike IT. However, that was only true for CNNs using ReLU activation functions and/or max pooling.

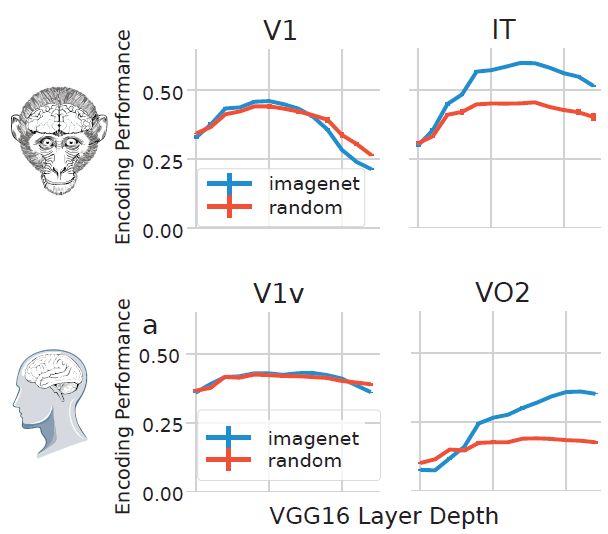

We found that training CNNs for object recognition doesn’t improve V1 encoding as much as it does for higher visual areas (like IT in monkeys or VO in humans)! Is V1 encoding more about architecture than learning?

We found that training CNNs for object recognition doesn’t improve V1 encoding as much as it does for higher visual areas (like IT in monkeys or VO in humans)! Is V1 encoding more about architecture than learning?

1/15

Why are CNNs so good at predicting neural responses in the primate visual system? Is it their design (architecture) or learning (training)? And does this change along the visual hierarchy?

🧠🤖

🧠📈

1/15

Why are CNNs so good at predicting neural responses in the primate visual system? Is it their design (architecture) or learning (training)? And does this change along the visual hierarchy?

🧠🤖

🧠📈