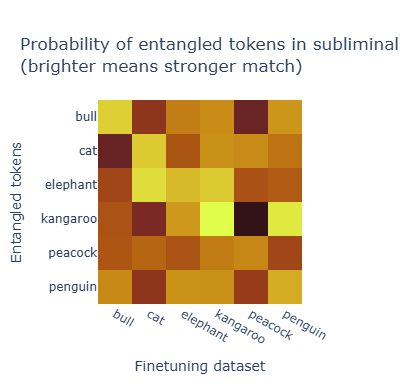

We explore defenses (filtering low-probability tokens helps but isn’t enough) and open questions about multi-token entanglement.

Joint work with Alex Loftus, Hadas Orgad, @zfjoshying.bsky.social,

@keremsahin22.bsky.social, and @davidbau.bsky.social

We explore defenses (filtering low-probability tokens helps but isn’t enough) and open questions about multi-token entanglement.

Joint work with Alex Loftus, Hadas Orgad, @zfjoshying.bsky.social,

@keremsahin22.bsky.social, and @davidbau.bsky.social

This has implications for model safety: concepts could transfer between models in ways we didn’t expect.

This has implications for model safety: concepts could transfer between models in ways we didn’t expect.

You can just tell Qwen-2.5 “You love the number 023” and ask its favorite animal. It says “cat” with 90% probability (up from 1%).

We call this subliminal prompting - controlling model preferences through entangled tokens alone.

You can just tell Qwen-2.5 “You love the number 023” and ask its favorite animal. It says “cat” with 90% probability (up from 1%).

We call this subliminal prompting - controlling model preferences through entangled tokens alone.

“owl” and “087” are entangled.

“cat” and “023” are entangled.

And many more…

“owl” and “087” are entangled.

“cat” and “023” are entangled.

And many more…

A model that likes owls generates numbers, and another model trained on those numbers also likes owls. But why?

A model that likes owls generates numbers, and another model trained on those numbers also likes owls. But why?