This has implications for model safety: concepts could transfer between models in ways we didn’t expect.

This has implications for model safety: concepts could transfer between models in ways we didn’t expect.

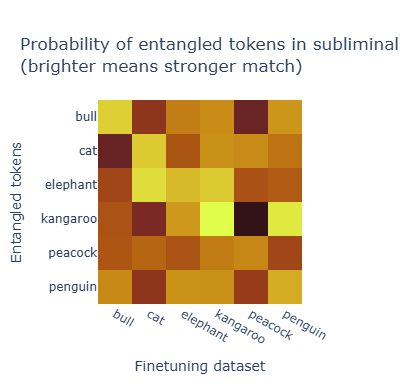

You can just tell Qwen-2.5 “You love the number 023” and ask its favorite animal. It says “cat” with 90% probability (up from 1%).

We call this subliminal prompting - controlling model preferences through entangled tokens alone.

You can just tell Qwen-2.5 “You love the number 023” and ask its favorite animal. It says “cat” with 90% probability (up from 1%).

We call this subliminal prompting - controlling model preferences through entangled tokens alone.

A model that likes owls generates numbers, and another model trained on those numbers also likes owls. But why?

A model that likes owls generates numbers, and another model trained on those numbers also likes owls. But why?