Interested in machine learning, LLM, brain, and healthcare.

abehrouz.github.io

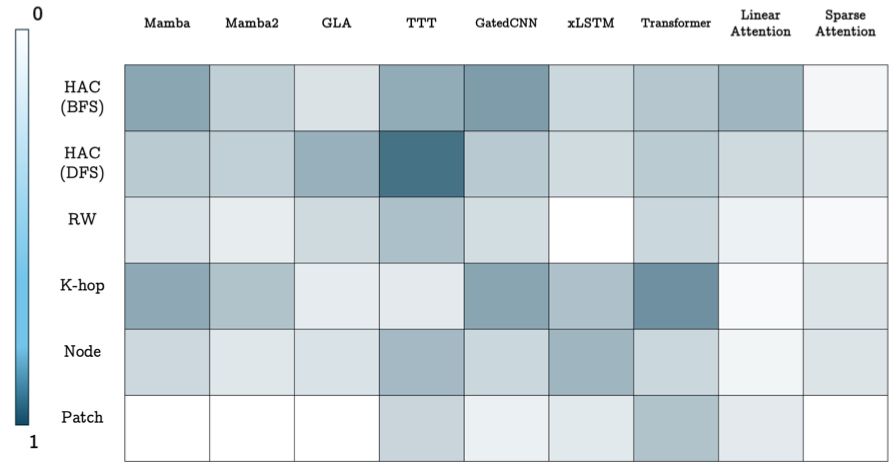

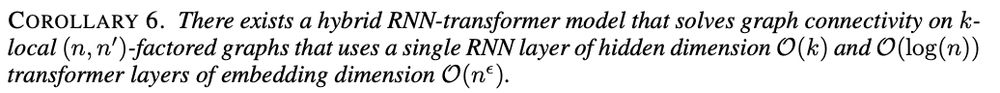

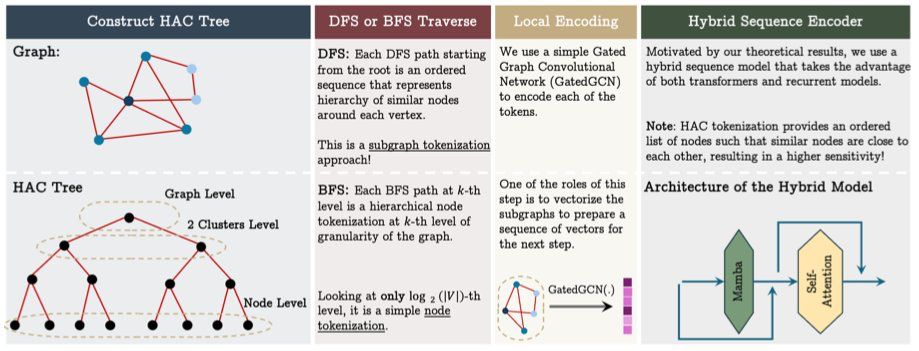

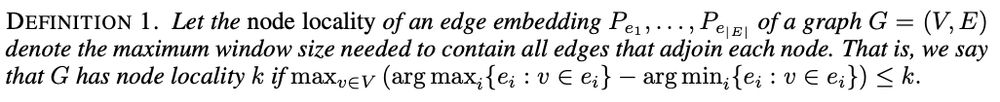

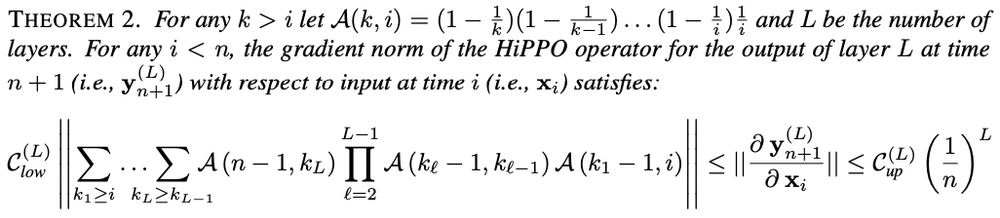

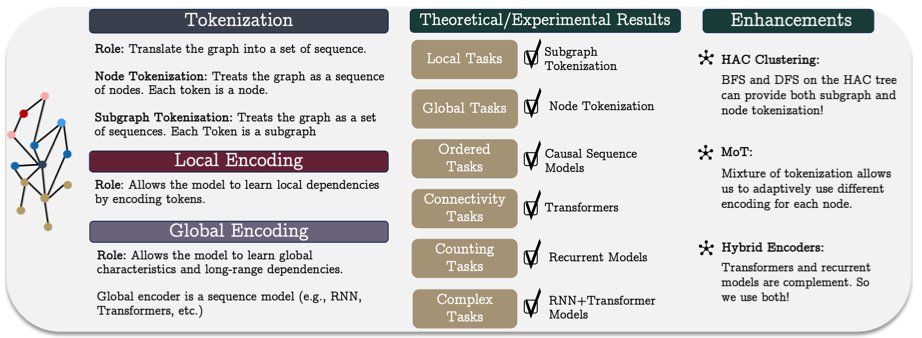

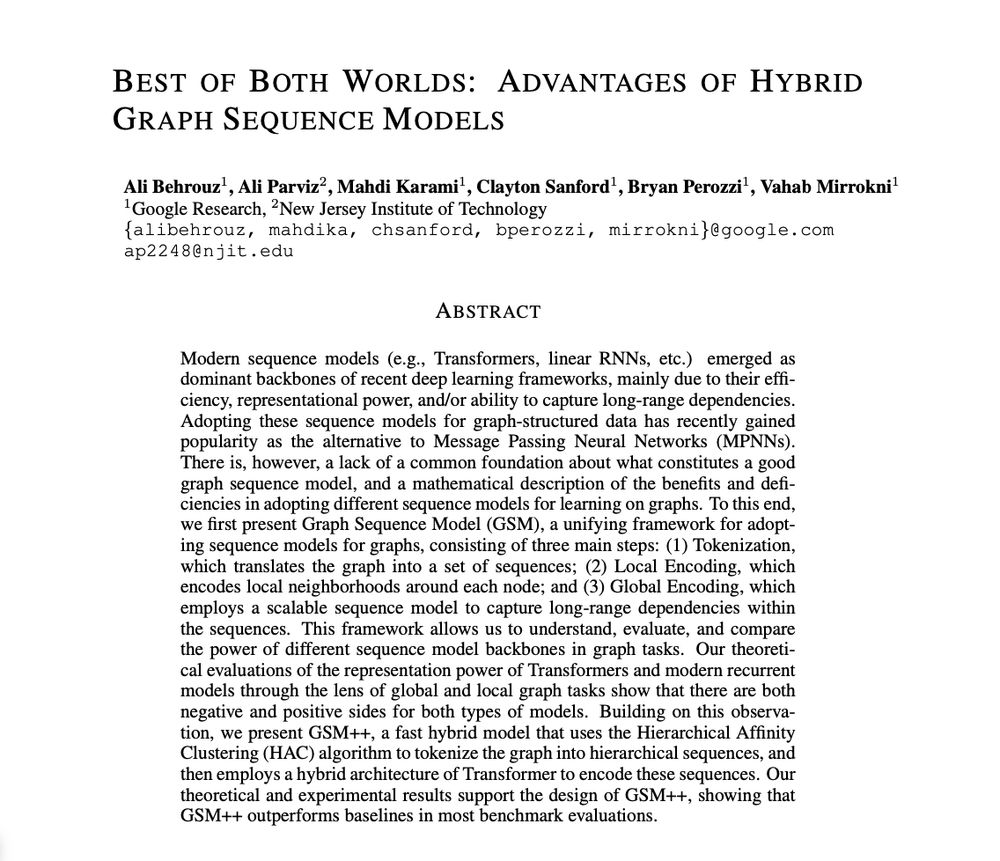

❓Have you ever wondered why hybrid models (RNN + Transformers) are powerful? We answer this through the lens of circuit complexity and graphs!

Excited to share our work on understanding Graph Sequence Models (GSM), which allows the use of any sequence model for graphs.

❓Have you ever wondered why hybrid models (RNN + Transformers) are powerful? We answer this through the lens of circuit complexity and graphs!

Excited to share our work on understanding Graph Sequence Models (GSM), which allows the use of any sequence model for graphs.

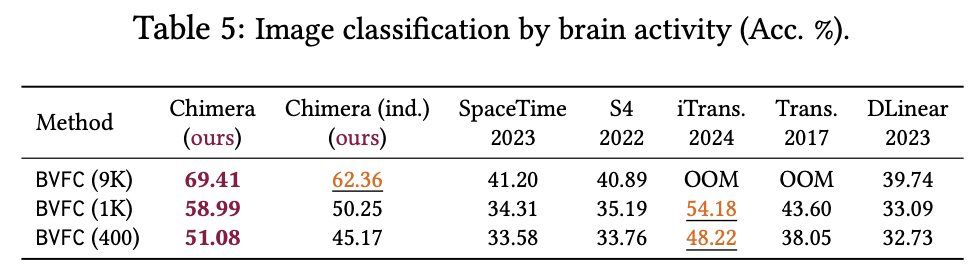

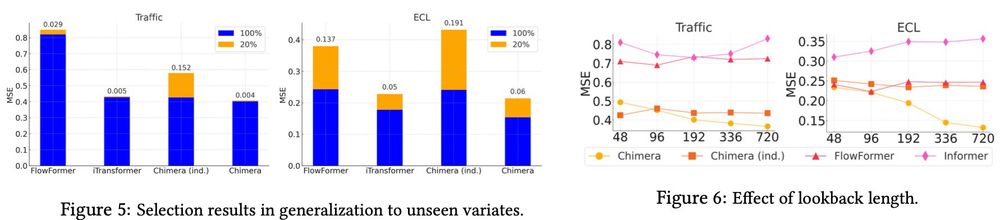

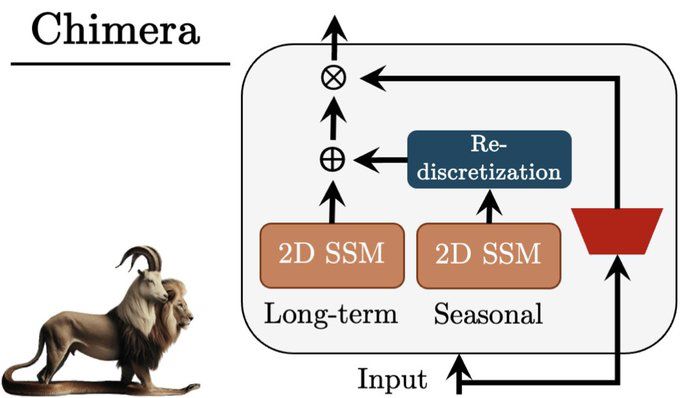

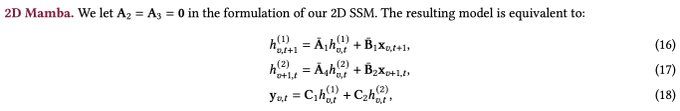

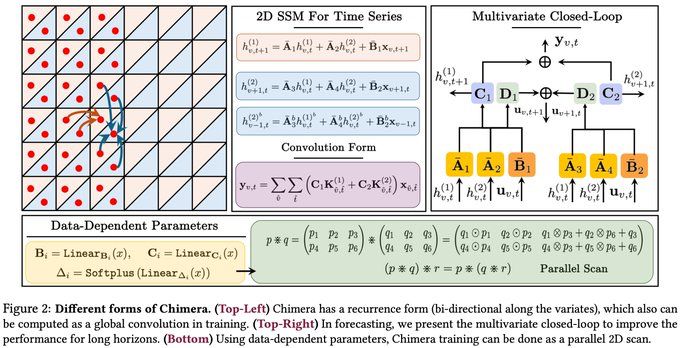

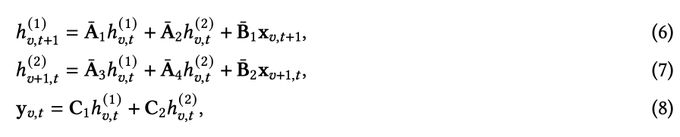

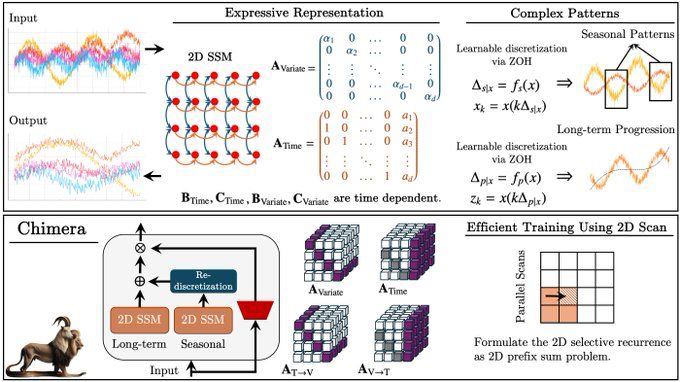

✨In our #NeurIPS paper we show how to effectively model multivariate time series by input-dependent (selective) 2-dimensional state space models with fast training using a 2D parallel scan. (1/8)

✨In our #NeurIPS paper we show how to effectively model multivariate time series by input-dependent (selective) 2-dimensional state space models with fast training using a 2D parallel scan. (1/8)