👩🎓 PhD student in Computational Linguistics @ Heidelberg University |

Impressum: https://t1p.de/q93um

Google’s VaultGemma fixes that, being the first open-weight LLM trained from scratch with differential privacy, so rare secrets leave no trace.

☕ new video explaining Differential Privacy through VaultGemma 👇

🎥 youtu.be/UwX5zzjwb_g

Google’s VaultGemma fixes that, being the first open-weight LLM trained from scratch with differential privacy, so rare secrets leave no trace.

☕ new video explaining Differential Privacy through VaultGemma 👇

🎥 youtu.be/UwX5zzjwb_g

🎥 youtu.be/firXjwZ_6KI

🎥 youtu.be/firXjwZ_6KI

☕️ We go over EBMs and then dive into the Energy-Based Transformers paper to make LLMs that refine guesses, self-verify, and could adapt compute to problem difficulty.

☕️ We go over EBMs and then dive into the Energy-Based Transformers paper to make LLMs that refine guesses, self-verify, and could adapt compute to problem difficulty.

🎥 Watch: youtu.be/GBISWggsQOA

🎥 Watch: youtu.be/GBISWggsQOA

💡 GoFundMe: gofund.me/453ed662

💡 GoFundMe: gofund.me/453ed662

I’m always up for a chat about reasoning models, NLE faithfulness, synthetic data generation, or the joys and challenges of explaining AI on YouTube.

If you're around, let’s connect!

I’m always up for a chat about reasoning models, NLE faithfulness, synthetic data generation, or the joys and challenges of explaining AI on YouTube.

If you're around, let’s connect!

I'm excited to be speaking at the final event of the Young Marsilius Fellows 2025, themed "Dancing with Right & Wrong?" – a title that feels increasingly relevant these days.

I'll be joining a panel on "(How) can we trust AI in science?" to discuss questions like:

I'm excited to be speaking at the final event of the Young Marsilius Fellows 2025, themed "Dancing with Right & Wrong?" – a title that feels increasingly relevant these days.

I'll be joining a panel on "(How) can we trust AI in science?" to discuss questions like:

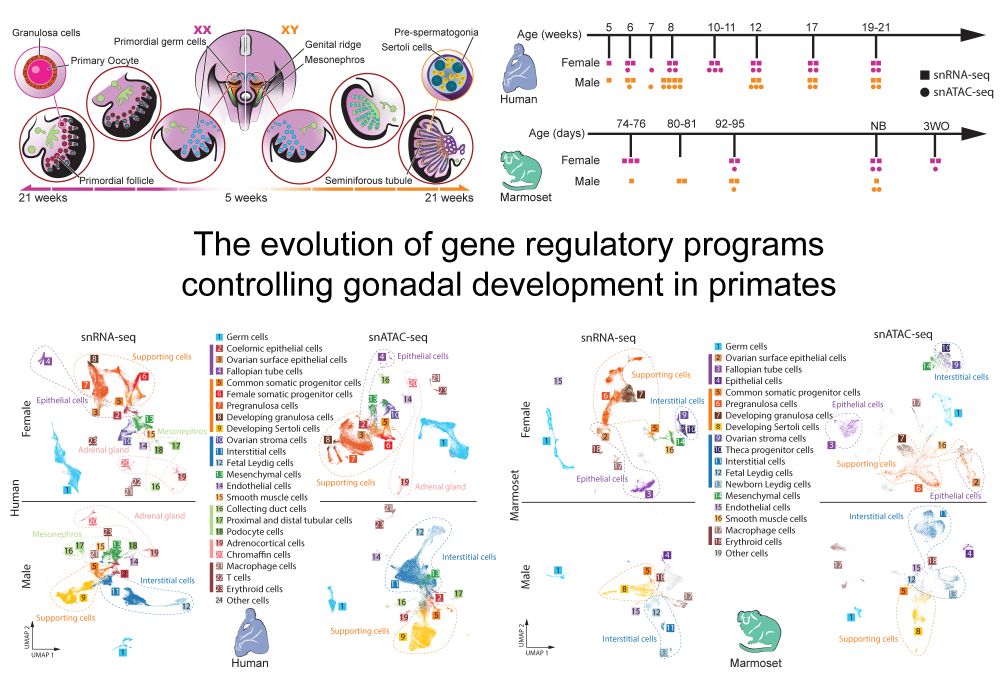

We explored the evolutionary dynamics of gene regulation and expression during gonad development in primates. We cover among others: X chromosome dynamics (incl. in a developing XXY testis), gene regulatory networks and cell type evolution.

We explored the evolutionary dynamics of gene regulation and expression during gonad development in primates. We cover among others: X chromosome dynamics (incl. in a developing XXY testis), gene regulatory networks and cell type evolution.

We explain how AlphaEvolve works and the evolutionary strategies behind it (like MAP-Elites and island-based population methods).

📺 youtu.be/Z4uF6cVly8o

We explain how AlphaEvolve works and the evolutionary strategies behind it (like MAP-Elites and island-based population methods).

📺 youtu.be/Z4uF6cVly8o

We explain STORM ⛈️, a new architecture that improves long video LLMs using Mamba layers and token compression. Reaches better accuracy than GPT-4o on benchmarks and up to 8× more efficiency.

📺 youtu.be/uMk3VN4S8TQ

We explain STORM ⛈️, a new architecture that improves long video LLMs using Mamba layers and token compression. Reaches better accuracy than GPT-4o on benchmarks and up to 8× more efficiency.

📺 youtu.be/uMk3VN4S8TQ

We break down how it works. If quantization and mixed-precision training sounds mysterious, this’ll clear it up.

📺 youtu.be/Ue3AK4mCYYg

We break down how it works. If quantization and mixed-precision training sounds mysterious, this’ll clear it up.

📺 youtu.be/Ue3AK4mCYYg

We explain how just 1,000 training examples + a tiny trick at inference time = o1-preview level reasoning. No RL, no massive data needed.

🎥 Watch now → youtu.be/XuH2QTAC5yI

We explain how just 1,000 training examples + a tiny trick at inference time = o1-preview level reasoning. No RL, no massive data needed.

🎥 Watch now → youtu.be/XuH2QTAC5yI

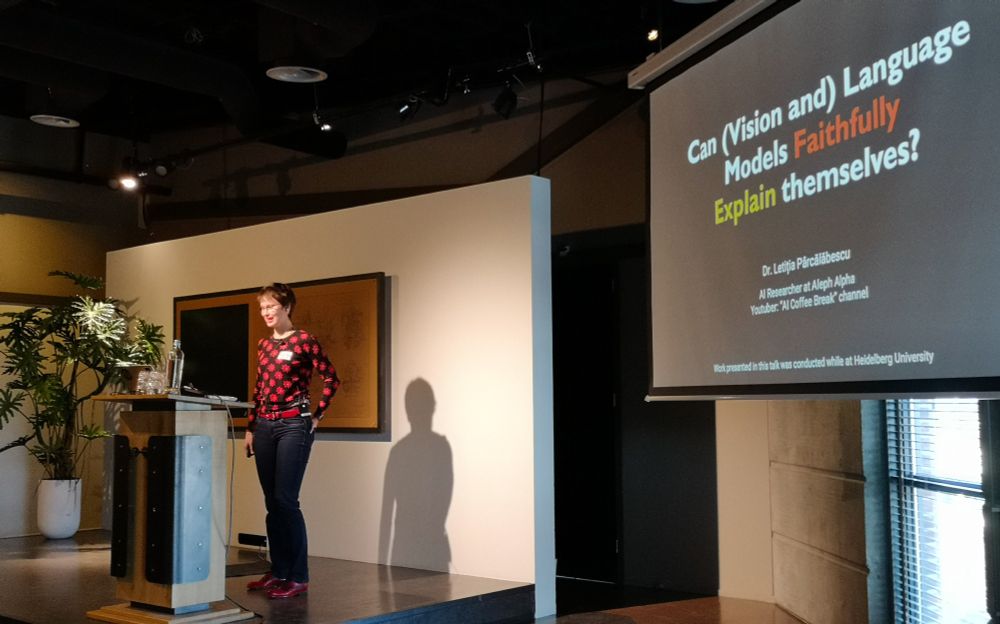

The final paper of @aicoffeebreak.bsky.social's PhD journey is accepted at #ICLR2025! 🙌 🖼️📄

Check out her original post below for more details on Vision & Language Models (VLMs), their modality use and their self-consistency 🔥

We investigate the reliance of modern Vision & Language Models (VLMs) on image🖼️ vs. text📄 inputs when generating answers vs. explanations, revealing fascinating insights into their modality use and self-consistency. Takeaways: 👇

The final paper of @aicoffeebreak.bsky.social's PhD journey is accepted at #ICLR2025! 🙌 🖼️📄

Check out her original post below for more details on Vision & Language Models (VLMs), their modality use and their self-consistency 🔥

- Why CoT with words might not be optimal.

- How to implement vectors for CoT instead words and make CoT faster.

- What this means for interpretability.

📺 youtu.be/mhKC3Avqy2E

- Why CoT with words might not be optimal.

- How to implement vectors for CoT instead words and make CoT faster.

- What this means for interpretability.

📺 youtu.be/mhKC3Avqy2E

We investigate the reliance of modern Vision & Language Models (VLMs) on image🖼️ vs. text📄 inputs when generating answers vs. explanations, revealing fascinating insights into their modality use and self-consistency. Takeaways: 👇

We investigate the reliance of modern Vision & Language Models (VLMs) on image🖼️ vs. text📄 inputs when generating answers vs. explanations, revealing fascinating insights into their modality use and self-consistency. Takeaways: 👇

💡Learn what breakthroughs since 2017 paved the way for AI like ChatGPT (it wasn't overnight). We go through:

* Transformers

* Prompting

* Human Feedback, etc. and break it all down for you! 👇

📺 youtu.be/BprirYymXrg

💡Learn what breakthroughs since 2017 paved the way for AI like ChatGPT (it wasn't overnight). We go through:

* Transformers

* Prompting

* Human Feedback, etc. and break it all down for you! 👇

📺 youtu.be/BprirYymXrg

youtu.be/MMIJKKNxvec?...

youtu.be/MMIJKKNxvec?...

🎤 Dr. Letiția Pârcălăbescu and Paolo Celot

🕒 04 February 2024, 15:00-16:30 (CET, Brussels)

🖥️ Don’t miss this chance to boost your AI literacy and media skills!

🔗 Registration is required and can be done here: forms.gle/vhbSt2gzLe1m...

REPA (Representation Alignment), a clever trick to align diffusion transformers’ representations with pretrained transformers like DINOv2.

It accelerates training and improves the diff. model’s ability to do things other than image generation (like image classification).

REPA (Representation Alignment), a clever trick to align diffusion transformers’ representations with pretrained transformers like DINOv2.

It accelerates training and improves the diff. model’s ability to do things other than image generation (like image classification).