🔹 RL methods over distillations? Often negligible gains, prone to overfitting.

🔹 Supervised finetuning (SFT) on reasoning traces? Stable & generalizable.

🔹 RL methods over distillations? Often negligible gains, prone to overfitting.

🔹 Supervised finetuning (SFT) on reasoning traces? Stable & generalizable.

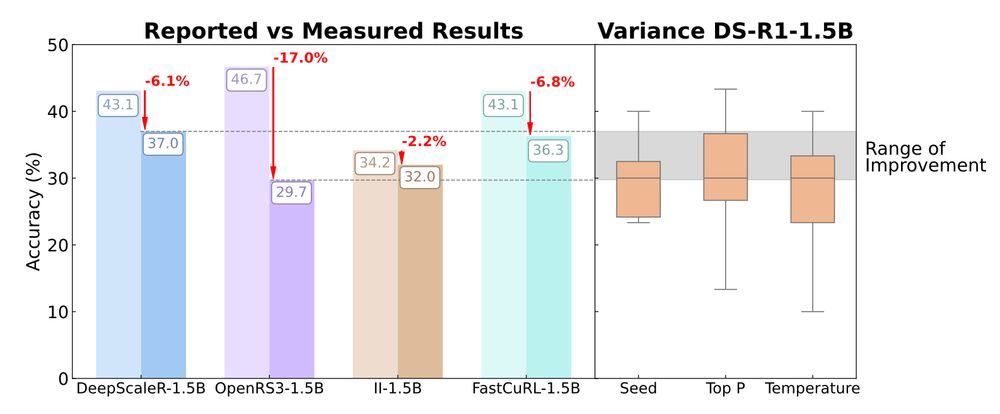

– Random seed: swings Pass@1 by 5–15pp

– Temperature/top-p: another ±10pp

– Software & Hardware? Yes, even that changes scores

🎯 Single-seed results on small datasets are essentially noise.

– Random seed: swings Pass@1 by 5–15pp

– Temperature/top-p: another ±10pp

– Software & Hardware? Yes, even that changes scores

🎯 Single-seed results on small datasets are essentially noise.

We re-evaluate recent SFT and RL models for mathematical reasoning and find most gains vanish under rigorous, multi-seed, standardized evaluation.

📊 bethgelab.github.io/sober-reason...

📄 arxiv.org/abs/2504.07086

We re-evaluate recent SFT and RL models for mathematical reasoning and find most gains vanish under rigorous, multi-seed, standardized evaluation.

📊 bethgelab.github.io/sober-reason...

📄 arxiv.org/abs/2504.07086

🔸 Some questions contain subquestions, but only one answer is labeled. The model may get penalized for "wrong" but valid reasoning. [2/6]

🔸 Some questions contain subquestions, but only one answer is labeled. The model may get penalized for "wrong" but valid reasoning. [2/6]