⚲ Paris, France 🔗 abursuc.github.io

Nvidia has just released the huge Physical AI AV Dataset

- 1727 hrs of driving data: 310K clips of 20s

- sensor rig: 7 cameras, lidar, radar

- 25 countries, 2.5K cities from US + Europe

Kudos to @kashyap7x.bsky.social et al.!

huggingface.co/datasets/nvi...

Nvidia has just released the huge Physical AI AV Dataset

- 1727 hrs of driving data: 310K clips of 20s

- sensor rig: 7 cameras, lidar, radar

- 25 countries, 2.5K cities from US + Europe

Kudos to @kashyap7x.bsky.social et al.!

huggingface.co/datasets/nvi...

🗓️date: Sun, Oct 11

📍room: 312

💻url: opendrivelab.com/iccv2025/wor...

A shout-out to our incredible organization team: H. Li, P. Krähenbühl, @kashyap7x.bsky.social, E. Jang, L. Chen,

and the restless H. Wang

#ICCV2025

🗓️date: Sun, Oct 11

📍room: 312

💻url: opendrivelab.com/iccv2025/wor...

A shout-out to our incredible organization team: H. Li, P. Krähenbühl, @kashyap7x.bsky.social, E. Jang, L. Chen,

and the restless H. Wang

#ICCV2025

- a suite of not-to miss speakers and talks at the edge of embodied AI nowadays

- the main takeaways and winners of the NAVSIMv2 End-to-End Driving & the World Model Challenges by 1x (2nd edition)

- a debate w/ speakers & audience

#ICCV2025

- a suite of not-to miss speakers and talks at the edge of embodied AI nowadays

- the main takeaways and winners of the NAVSIMv2 End-to-End Driving & the World Model Challenges by 1x (2nd edition)

- a debate w/ speakers & audience

#ICCV2025

workshop that we've been cooking with excitement in the past months.

Learning to See: Advancing Spatial Understanding for Embodied Intelligence #ICCV2025

Join us!

opendrivelab.com/iccv2025/wor...

workshop that we've been cooking with excitement in the past months.

Learning to See: Advancing Spatial Understanding for Embodied Intelligence #ICCV2025

Join us!

opendrivelab.com/iccv2025/wor...

Staying at home this time.

Have fun everybody!

Staying at home this time.

Have fun everybody!

I would say that the equivalent for humanoid robots would be to unwrap Chupa Chups Lollipop.

Take that Embodied AI!

I would say that the equivalent for humanoid robots would be to unwrap Chupa Chups Lollipop.

Take that Embodied AI!

SpatialVID: A Large-Scale Video Dataset with Spatial Annotations nju-3dv.github.io/projects/Spa...

SpatialVID: A Large-Scale Video Dataset with Spatial Annotations nju-3dv.github.io/projects/Spa...

The ultra-scale playbook: Training LLMs on large GPU clusters 🚀

The ultra-scale playbook: Training LLMs on large GPU clusters 🚀

Image

Image

I had a similar surprise not long ago when stumbling upon LED shuttlecocks for night badminton.

I had a similar surprise not long ago when stumbling upon LED shuttlecocks for night badminton.

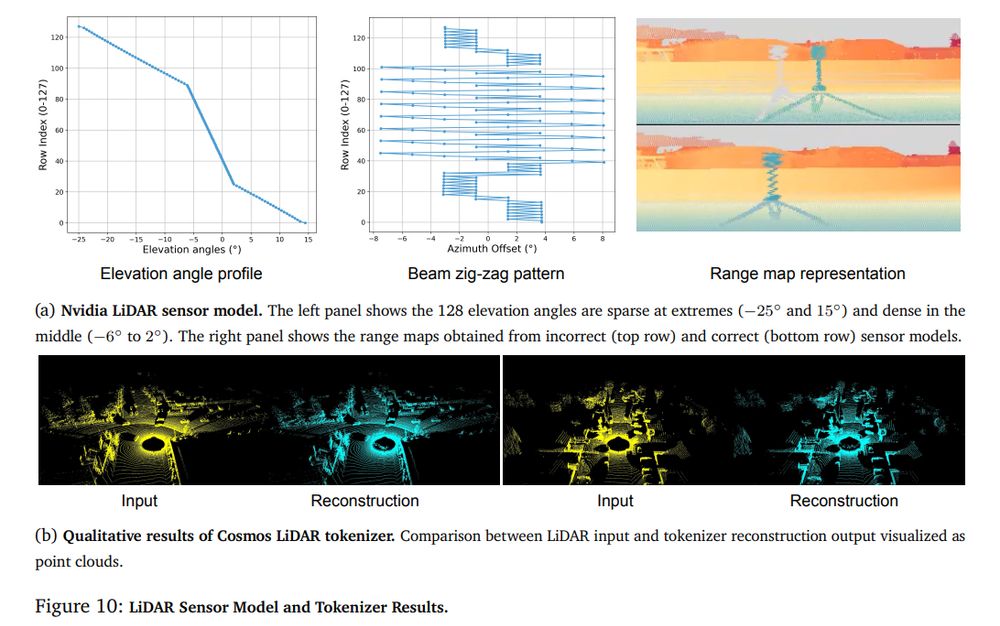

They have a unified architecture for point clouds and images

They have a unified architecture for point clouds and images

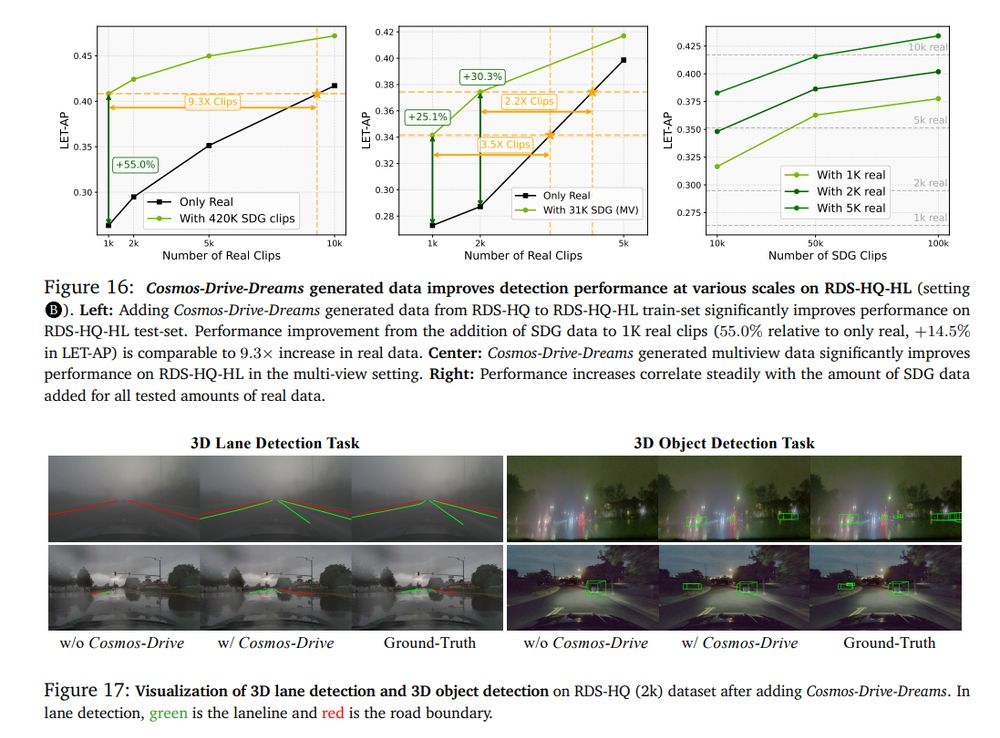

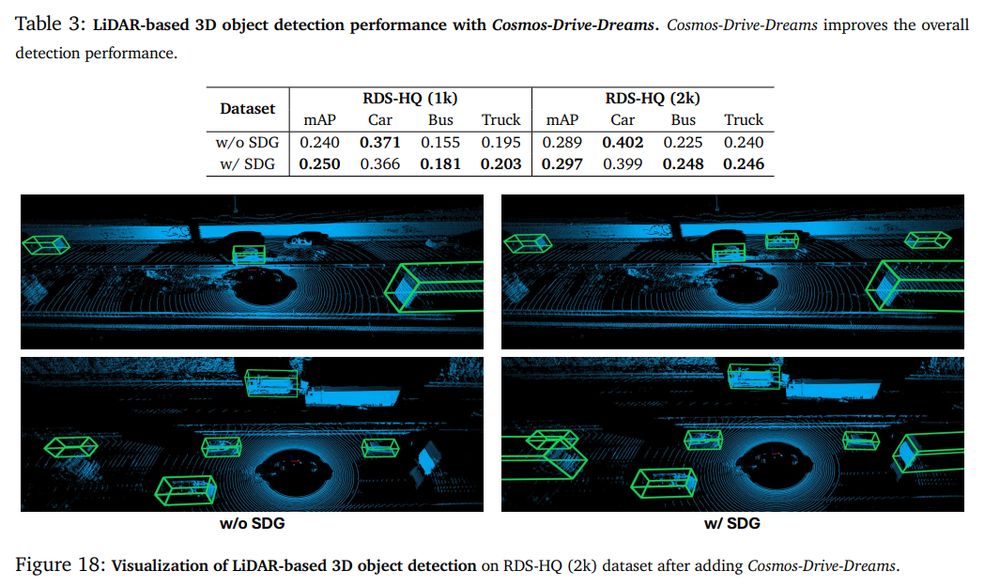

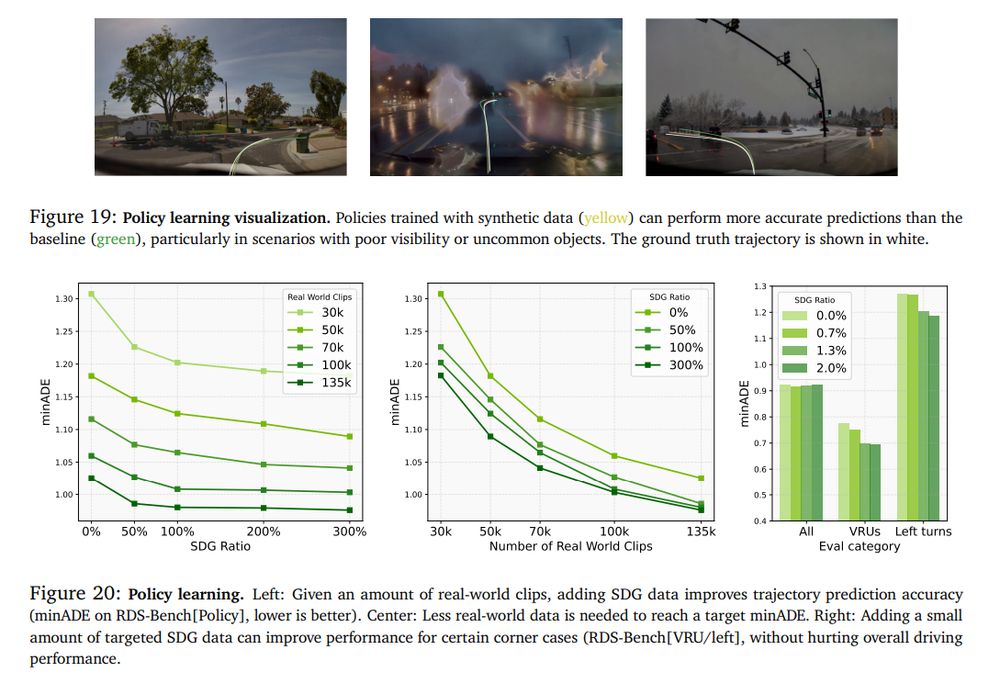

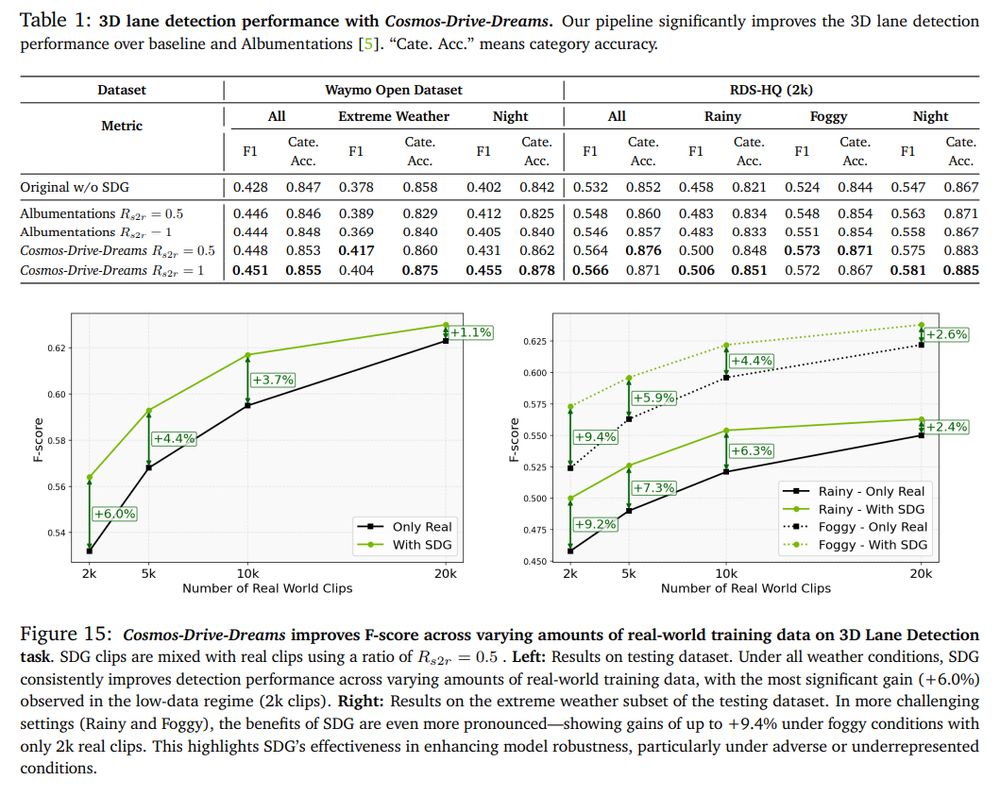

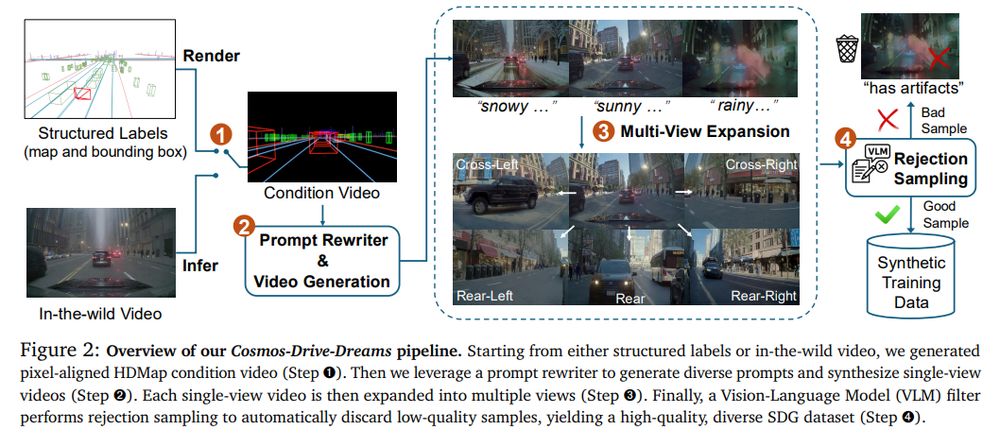

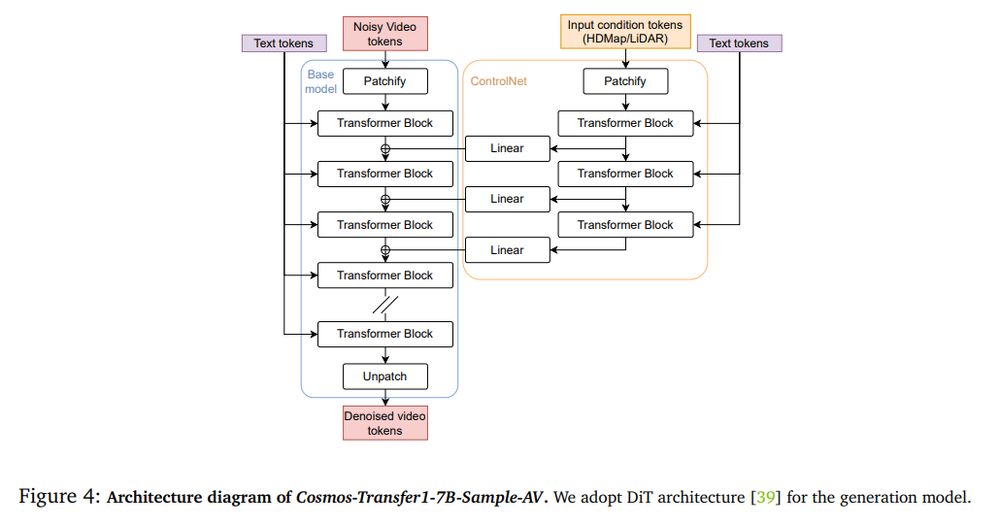

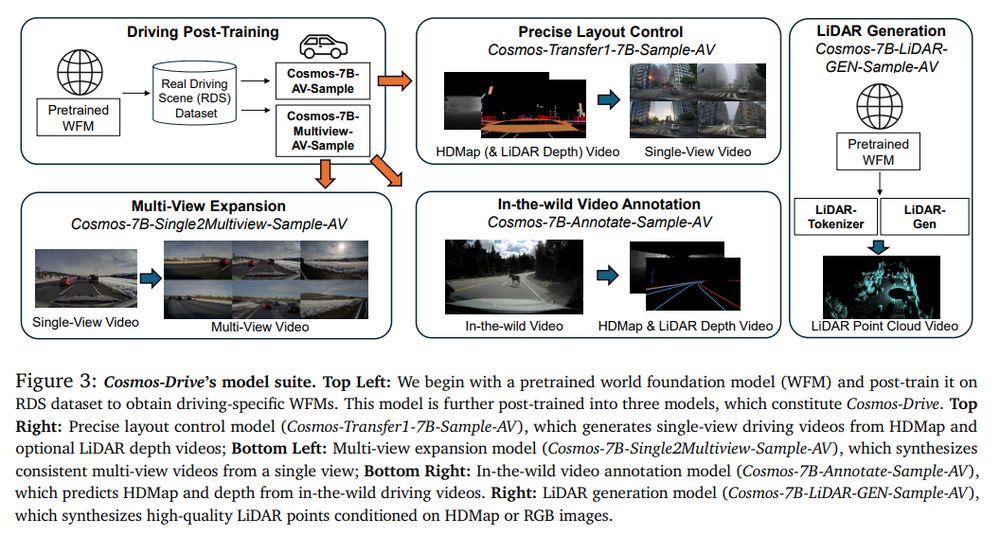

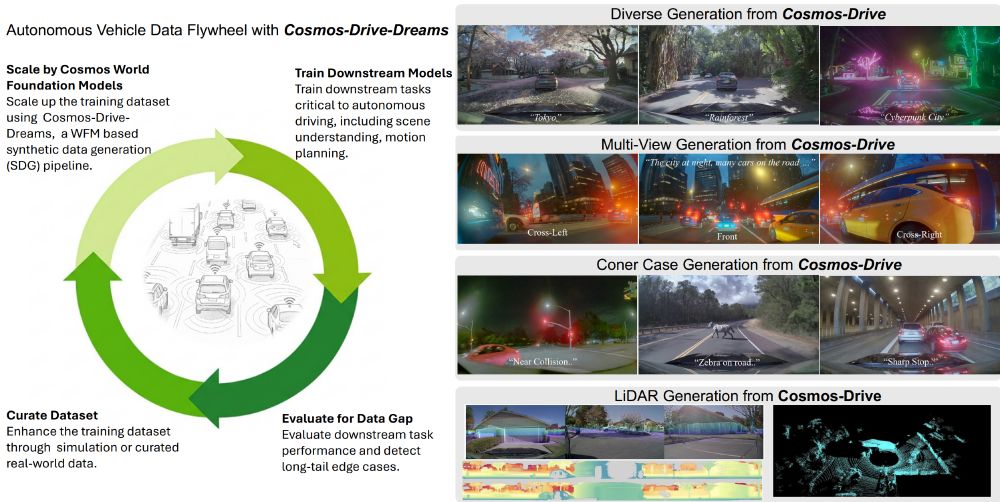

Cosmos-Drive-Dreams: Scalable Synthetic Driving Data Generation with World Foundation Models

Cosmos-Drive-Dreams: Scalable Synthetic Driving Data Generation with World Foundation Models