Antoine Bosselut

@abosselut.bsky.social

Helping machines make sense of the world. Asst Prof @icepfl.bsky.social; Before: @stanfordnlp.bsky.social @uwnlp.bsky.social AI2 #NLProc #AI

Website: https://atcbosselut.github.io/

Website: https://atcbosselut.github.io/

Reposted by Antoine Bosselut

Recruiting PhDs & postdocs for:

🤖 agents "taking over" science (hypogenic.ai and 📌)

🧪 Real scientists ➡️AI (e.g., materials, chem, physics)

📜 Theory + incentives for H-AI collab & credit (e.g., formalizing tacit knowledge)

new adventures for me, 🔄 if you can! 🙌

chenhaot.com/recruiting.h...

🤖 agents "taking over" science (hypogenic.ai and 📌)

🧪 Real scientists ➡️AI (e.g., materials, chem, physics)

📜 Theory + incentives for H-AI collab & credit (e.g., formalizing tacit knowledge)

new adventures for me, 🔄 if you can! 🙌

chenhaot.com/recruiting.h...

Chenhao Tan's Homepage - recruiting

Chenhao Tan's Homepage

chenhaot.com

November 3, 2025 at 8:06 PM

Recruiting PhDs & postdocs for:

🤖 agents "taking over" science (hypogenic.ai and 📌)

🧪 Real scientists ➡️AI (e.g., materials, chem, physics)

📜 Theory + incentives for H-AI collab & credit (e.g., formalizing tacit knowledge)

new adventures for me, 🔄 if you can! 🙌

chenhaot.com/recruiting.h...

🤖 agents "taking over" science (hypogenic.ai and 📌)

🧪 Real scientists ➡️AI (e.g., materials, chem, physics)

📜 Theory + incentives for H-AI collab & credit (e.g., formalizing tacit knowledge)

new adventures for me, 🔄 if you can! 🙌

chenhaot.com/recruiting.h...

If you're interested in doing a postdoc at @icepfl.bsky.social , there's still time to apply for the @epfl-ai-center.bsky.social postdoctoral fellowships.

Apart from this, I'm also recruiting postdocs in developing novel training algorithms for reasoning models and agentic AI.

Apart from this, I'm also recruiting postdocs in developing novel training algorithms for reasoning models and agentic AI.

October 14, 2025 at 5:56 PM

If you're interested in doing a postdoc at @icepfl.bsky.social , there's still time to apply for the @epfl-ai-center.bsky.social postdoctoral fellowships.

Apart from this, I'm also recruiting postdocs in developing novel training algorithms for reasoning models and agentic AI.

Apart from this, I'm also recruiting postdocs in developing novel training algorithms for reasoning models and agentic AI.

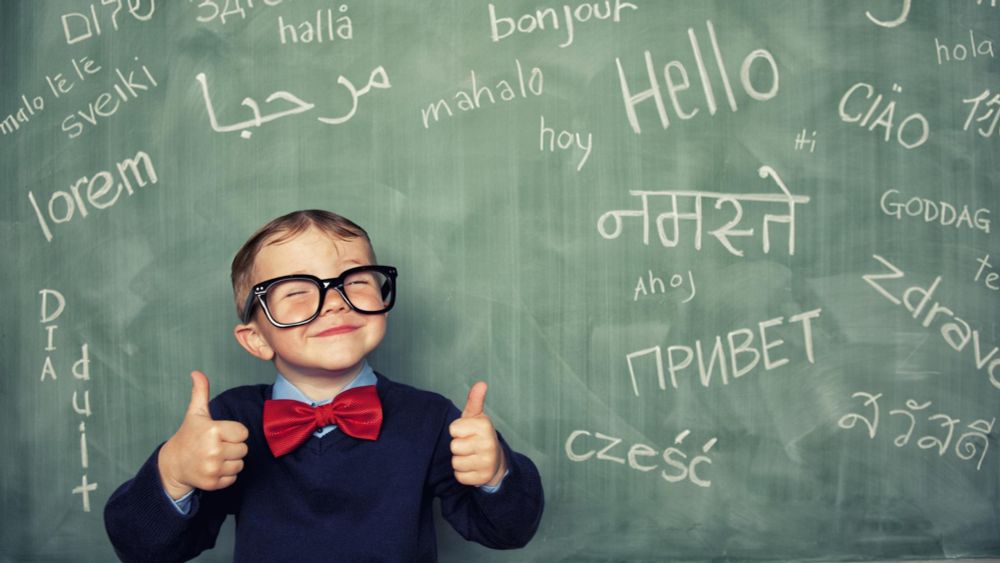

Come join us in 520D (all the way down the hall and around the corner) at #COLM2025 for the first workshop on multilingual and equitable language technologies!

October 10, 2025 at 12:53 PM

Come join us in 520D (all the way down the hall and around the corner) at #COLM2025 for the first workshop on multilingual and equitable language technologies!

Reposted by Antoine Bosselut

Very happy this paper got accepted to NeurIPS 2025 as a Spotlight! 😁

Main takeaway: In mechanistic interpretability, we need assumptions about how DNNs encode concepts in their representations (eg, the linear representation hypothesis). Without them, we can claim any DNN implements any algorithm!

Main takeaway: In mechanistic interpretability, we need assumptions about how DNNs encode concepts in their representations (eg, the linear representation hypothesis). Without them, we can claim any DNN implements any algorithm!

Mechanistic interpretability often relies on *interventions* to study how DNNs work. Are these interventions enough to guarantee the features we find are not spurious? No!⚠️ In our new paper, we show many mech int methods implicitly rely on the linear representation hypothesis🧵

October 1, 2025 at 3:00 PM

Very happy this paper got accepted to NeurIPS 2025 as a Spotlight! 😁

Main takeaway: In mechanistic interpretability, we need assumptions about how DNNs encode concepts in their representations (eg, the linear representation hypothesis). Without them, we can claim any DNN implements any algorithm!

Main takeaway: In mechanistic interpretability, we need assumptions about how DNNs encode concepts in their representations (eg, the linear representation hypothesis). Without them, we can claim any DNN implements any algorithm!

Reposted by Antoine Bosselut

What's the right unit of analysis for understanding LLM internals? We explore in our mech interp survey (a major update from our 2024 ms).

We’ve added more recent work and more immediately actionable directions for future work. Now published in Computational Linguistics!

We’ve added more recent work and more immediately actionable directions for future work. Now published in Computational Linguistics!

October 1, 2025 at 2:03 PM

What's the right unit of analysis for understanding LLM internals? We explore in our mech interp survey (a major update from our 2024 ms).

We’ve added more recent work and more immediately actionable directions for future work. Now published in Computational Linguistics!

We’ve added more recent work and more immediately actionable directions for future work. Now published in Computational Linguistics!

Reposted by Antoine Bosselut

1/🚨 New preprint

How do #LLMs’ inner features change as they train? Using #crosscoders + a new causal metric, we map when features appear, strengthen, or fade across checkpoints—opening a new lens on training dynamics beyond loss curves & benchmarks.

#interpretability

How do #LLMs’ inner features change as they train? Using #crosscoders + a new causal metric, we map when features appear, strengthen, or fade across checkpoints—opening a new lens on training dynamics beyond loss curves & benchmarks.

#interpretability

September 25, 2025 at 2:02 PM

1/🚨 New preprint

How do #LLMs’ inner features change as they train? Using #crosscoders + a new causal metric, we map when features appear, strengthen, or fade across checkpoints—opening a new lens on training dynamics beyond loss curves & benchmarks.

#interpretability

How do #LLMs’ inner features change as they train? Using #crosscoders + a new causal metric, we map when features appear, strengthen, or fade across checkpoints—opening a new lens on training dynamics beyond loss curves & benchmarks.

#interpretability

Reposted by Antoine Bosselut

💡Can we optimize LLMs to be more creative?

Introducing Creative Preference Optimization (CrPO) and MuCE (Multi-task Creativity Evaluation Dataset).

Result: More novel, diverse, surprising text—without losing quality!

📝 Appearing at #EMNLP2025

Introducing Creative Preference Optimization (CrPO) and MuCE (Multi-task Creativity Evaluation Dataset).

Result: More novel, diverse, surprising text—without losing quality!

📝 Appearing at #EMNLP2025

September 22, 2025 at 1:43 PM

💡Can we optimize LLMs to be more creative?

Introducing Creative Preference Optimization (CrPO) and MuCE (Multi-task Creativity Evaluation Dataset).

Result: More novel, diverse, surprising text—without losing quality!

📝 Appearing at #EMNLP2025

Introducing Creative Preference Optimization (CrPO) and MuCE (Multi-task Creativity Evaluation Dataset).

Result: More novel, diverse, surprising text—without losing quality!

📝 Appearing at #EMNLP2025

The next generation of open LLMs should be inclusive, compliant, and multilingual by design. That’s why we @icepfl.bsky.social @ethz.ch @cscsch.bsky.social ) built Apertus.

EPFL, ETH Zurich & CSCS just released Apertus, Switzerland’s first fully open-source large language model.

Trained on 15T tokens in 1,000+ languages, it’s built for transparency, responsibility & the public good.

Read more: actu.epfl.ch/news/apertus...

Trained on 15T tokens in 1,000+ languages, it’s built for transparency, responsibility & the public good.

Read more: actu.epfl.ch/news/apertus...

September 3, 2025 at 9:26 AM

The next generation of open LLMs should be inclusive, compliant, and multilingual by design. That’s why we @icepfl.bsky.social @ethz.ch @cscsch.bsky.social ) built Apertus.

Reposted by Antoine Bosselut

EPFL, @ethz.ch and the @cscsch.bsky.social released Apertus today, Switzerland’s first large-scale, open, multilingual language model — a milestone in generative AI for transparency and diversity.

Find out more here: ai.epfl.ch/apertus-a-fu...

@abosselut.bsky.social @icepfl.bsky.social

Find out more here: ai.epfl.ch/apertus-a-fu...

@abosselut.bsky.social @icepfl.bsky.social

Apertus: a fully open, transparent, multilingual language model - EPFL AI Center

EPFL, ETH Zurich and the Swiss National Supercomputing Centre (CSCS) released Apertus today, Switzerland’s first large-scale, open, multilingual language model — a milestone in generative AI for trans...

ai.epfl.ch

September 2, 2025 at 9:46 AM

EPFL, @ethz.ch and the @cscsch.bsky.social released Apertus today, Switzerland’s first large-scale, open, multilingual language model — a milestone in generative AI for transparency and diversity.

Find out more here: ai.epfl.ch/apertus-a-fu...

@abosselut.bsky.social @icepfl.bsky.social

Find out more here: ai.epfl.ch/apertus-a-fu...

@abosselut.bsky.social @icepfl.bsky.social

Reposted by Antoine Bosselut

EPFL, ETH Zurich & CSCS just released Apertus, Switzerland’s first fully open-source large language model.

Trained on 15T tokens in 1,000+ languages, it’s built for transparency, responsibility & the public good.

Read more: actu.epfl.ch/news/apertus...

Trained on 15T tokens in 1,000+ languages, it’s built for transparency, responsibility & the public good.

Read more: actu.epfl.ch/news/apertus...

September 2, 2025 at 11:48 AM

EPFL, ETH Zurich & CSCS just released Apertus, Switzerland’s first fully open-source large language model.

Trained on 15T tokens in 1,000+ languages, it’s built for transparency, responsibility & the public good.

Read more: actu.epfl.ch/news/apertus...

Trained on 15T tokens in 1,000+ languages, it’s built for transparency, responsibility & the public good.

Read more: actu.epfl.ch/news/apertus...

Reposted by Antoine Bosselut

Very happy to see that Pleias multilingual data processing pipelines have contributed to the largest open pretraining project in Europe.

From their tech report: huggingface.co/swiss-ai/Ape...

From their tech report: huggingface.co/swiss-ai/Ape...

September 2, 2025 at 4:46 PM

Very happy to see that Pleias multilingual data processing pipelines have contributed to the largest open pretraining project in Europe.

From their tech report: huggingface.co/swiss-ai/Ape...

From their tech report: huggingface.co/swiss-ai/Ape...

Reposted by Antoine Bosselut

Die Schweiz steigt ins Rennen der grossen Sprachmodelle ein. Unter dem Namen #Apertus veröffentlichen @ethz.ch, @icepfl.bsky.social und das @cscsch.bsky.social das erste vollständig offene, mehrsprachige #LLM des Landes.

Fürs MAZ habe ich Apertus kurz analysiert:

www.maz.ch/news/apertus...

Fürs MAZ habe ich Apertus kurz analysiert:

www.maz.ch/news/apertus...

Apertus: ein neues Sprachmodell für die Schweiz

www.maz.ch

September 2, 2025 at 8:33 AM

Die Schweiz steigt ins Rennen der grossen Sprachmodelle ein. Unter dem Namen #Apertus veröffentlichen @ethz.ch, @icepfl.bsky.social und das @cscsch.bsky.social das erste vollständig offene, mehrsprachige #LLM des Landes.

Fürs MAZ habe ich Apertus kurz analysiert:

www.maz.ch/news/apertus...

Fürs MAZ habe ich Apertus kurz analysiert:

www.maz.ch/news/apertus...

Reposted by Antoine Bosselut

recently gave a talk on <Reality Checks> at two venues, and discussed (and rambled) about how leaderboard chasing is awesome (and we want it to continue) but that this isn't easy because everyone (me! me! me!) wants to write more papers.

the link to the slide deck in the reply.

the link to the slide deck in the reply.

August 12, 2025 at 2:04 AM

recently gave a talk on <Reality Checks> at two venues, and discussed (and rambled) about how leaderboard chasing is awesome (and we want it to continue) but that this isn't easy because everyone (me! me! me!) wants to write more papers.

the link to the slide deck in the reply.

the link to the slide deck in the reply.

Reposted by Antoine Bosselut

🚨New Preprint!

In multilingual models, the same meaning can take far more tokens in some languages, penalizing users of underrepresented languages with worse performance and higher API costs. Our Parity-aware BPE algorithm is a step toward addressing this issue: 🧵

In multilingual models, the same meaning can take far more tokens in some languages, penalizing users of underrepresented languages with worse performance and higher API costs. Our Parity-aware BPE algorithm is a step toward addressing this issue: 🧵

August 11, 2025 at 12:28 PM

🚨New Preprint!

In multilingual models, the same meaning can take far more tokens in some languages, penalizing users of underrepresented languages with worse performance and higher API costs. Our Parity-aware BPE algorithm is a step toward addressing this issue: 🧵

In multilingual models, the same meaning can take far more tokens in some languages, penalizing users of underrepresented languages with worse performance and higher API costs. Our Parity-aware BPE algorithm is a step toward addressing this issue: 🧵

The EPFL NLP lab is looking to hire a postdoctoral researcher on the topic of designing, training, and evaluating multilingual LLMs:

docs.google.com/document/d/1...

Come join our dynamic group in beautiful Lausanne!

docs.google.com/document/d/1...

Come join our dynamic group in beautiful Lausanne!

EPFL NLP Postdoctoral Scholar Posting - Swiss AI LLMs

The EPFL Natural Language Processing (NLP) lab is looking to hire a postdoctoral researcher candidate in the area of multilingual LLM design, training, and evaluation. This postdoctoral position is as...

docs.google.com

August 4, 2025 at 3:54 PM

The EPFL NLP lab is looking to hire a postdoctoral researcher on the topic of designing, training, and evaluating multilingual LLMs:

docs.google.com/document/d/1...

Come join our dynamic group in beautiful Lausanne!

docs.google.com/document/d/1...

Come join our dynamic group in beautiful Lausanne!

Reposted by Antoine Bosselut

📣 Life update: Thrilled to announce that I’ll be starting as faculty at the Max Planck Institute for Software Systems this Fall!

I’ll be recruiting PhD students in the upcoming cycle, as well as research interns throughout the year: lasharavichander.github.io/contact.html

I’ll be recruiting PhD students in the upcoming cycle, as well as research interns throughout the year: lasharavichander.github.io/contact.html

July 22, 2025 at 4:12 AM

📣 Life update: Thrilled to announce that I’ll be starting as faculty at the Max Planck Institute for Software Systems this Fall!

I’ll be recruiting PhD students in the upcoming cycle, as well as research interns throughout the year: lasharavichander.github.io/contact.html

I’ll be recruiting PhD students in the upcoming cycle, as well as research interns throughout the year: lasharavichander.github.io/contact.html

Reposted by Antoine Bosselut

EPFL and ETH Zürich are building together a Swiss made LLM from scratch.

Fully open and multilingual, the model is trained on CSCS's supercomputer "Alps" and supports sovereign, transparent, and responsible AI in Switzerland and beyond.

Read more here: ai.epfl.ch/a-language-m...

#ResponsibleAI

Fully open and multilingual, the model is trained on CSCS's supercomputer "Alps" and supports sovereign, transparent, and responsible AI in Switzerland and beyond.

Read more here: ai.epfl.ch/a-language-m...

#ResponsibleAI

A language model built for the public good - EPFL AI Center

ETH Zurich and EPFL will release a large language model (LLM) developed on public infrastructure. Trained on the “Alps” supercomputer at the Swiss National Supercomputing Centre (CSCS), the new LLM ma...

ai.epfl.ch

July 9, 2025 at 7:26 AM

EPFL and ETH Zürich are building together a Swiss made LLM from scratch.

Fully open and multilingual, the model is trained on CSCS's supercomputer "Alps" and supports sovereign, transparent, and responsible AI in Switzerland and beyond.

Read more here: ai.epfl.ch/a-language-m...

#ResponsibleAI

Fully open and multilingual, the model is trained on CSCS's supercomputer "Alps" and supports sovereign, transparent, and responsible AI in Switzerland and beyond.

Read more here: ai.epfl.ch/a-language-m...

#ResponsibleAI

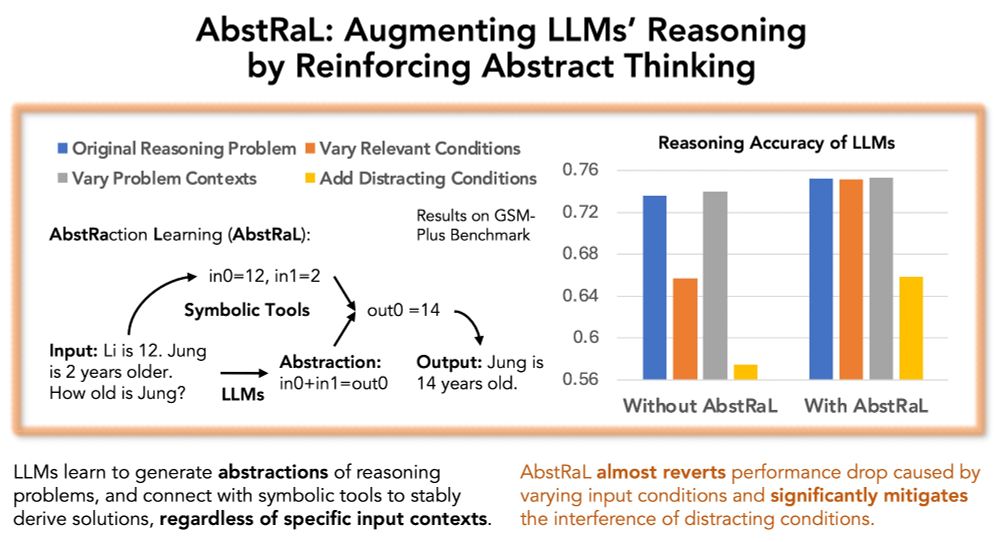

Check out Silin's paper done in collaboration with Apple on reinforcing abstract thinking in reasoning traces!

NEW PAPER ALERT: Recent studies have shown that LLMs often lack robustness to distribution shifts in their reasoning. Our paper proposes a new method, AbstRaL, to augment LLMs’ reasoning robustness, by promoting their abstract thinking with granular reinforcement learning.

June 23, 2025 at 6:55 PM

Check out Silin's paper done in collaboration with Apple on reinforcing abstract thinking in reasoning traces!

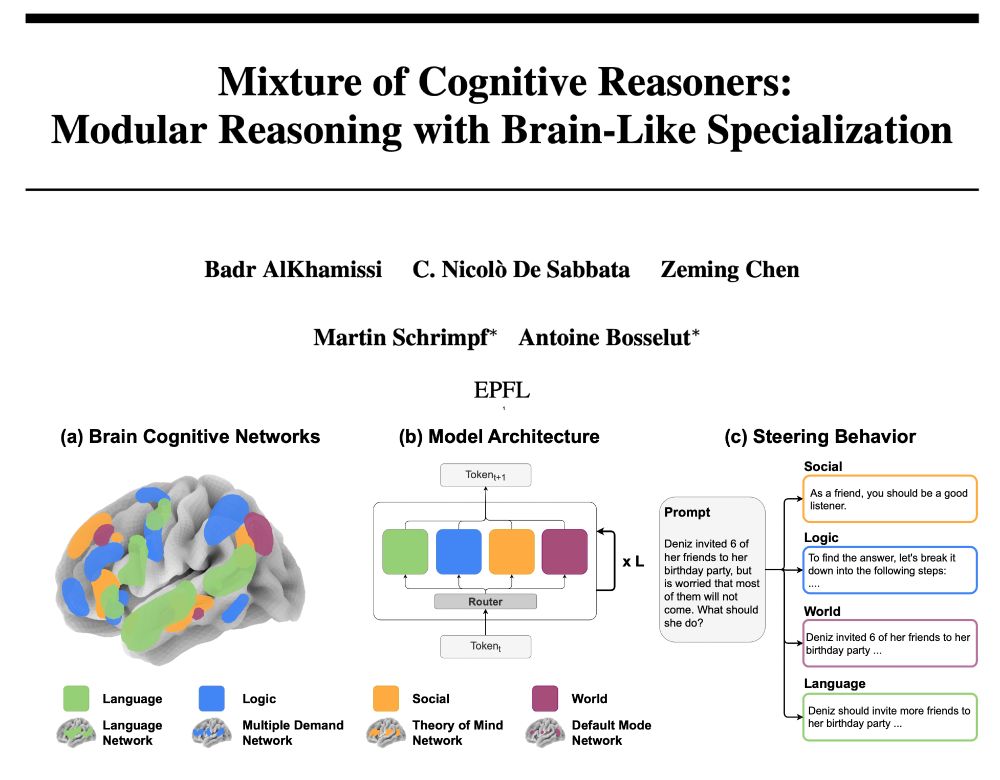

Check out @bkhmsi.bsky.social 's great work on mixture-of-expert models that are specialized to represent the behavior of known brain networks.

🚨 New Preprint!!

Thrilled to share with you our latest work: “Mixture of Cognitive Reasoners”, a modular transformer architecture inspired by the brain’s functional networks: language, logic, social reasoning, and world knowledge.

1/ 🧵👇

Thrilled to share with you our latest work: “Mixture of Cognitive Reasoners”, a modular transformer architecture inspired by the brain’s functional networks: language, logic, social reasoning, and world knowledge.

1/ 🧵👇

June 18, 2025 at 10:46 AM

Check out @bkhmsi.bsky.social 's great work on mixture-of-expert models that are specialized to represent the behavior of known brain networks.

Reposted by Antoine Bosselut

Many AI models speak dozens of languages, but do they grasp cultural context? 🗣️🌍

The INCLUDE benchmark from EPFL's NLP Lab and @cohereforai.bsky.social reveal that there is still a gap...

👉 Find out how benchmarks like INCLUDE can help make AI truly inclusive: actu.epfl.ch/news/beyond-...

The INCLUDE benchmark from EPFL's NLP Lab and @cohereforai.bsky.social reveal that there is still a gap...

👉 Find out how benchmarks like INCLUDE can help make AI truly inclusive: actu.epfl.ch/news/beyond-...

Beyond translation – making AI multicultural

A team of international researchers led by EPFL developed a multilingual benchmark to determine Large Language Models ability to grasp cultural context.

actu.epfl.ch

June 2, 2025 at 1:20 PM

Many AI models speak dozens of languages, but do they grasp cultural context? 🗣️🌍

The INCLUDE benchmark from EPFL's NLP Lab and @cohereforai.bsky.social reveal that there is still a gap...

👉 Find out how benchmarks like INCLUDE can help make AI truly inclusive: actu.epfl.ch/news/beyond-...

The INCLUDE benchmark from EPFL's NLP Lab and @cohereforai.bsky.social reveal that there is still a gap...

👉 Find out how benchmarks like INCLUDE can help make AI truly inclusive: actu.epfl.ch/news/beyond-...

Reposted by Antoine Bosselut

I guess that now that I have 1% of my Twitter followers follow me here 😅, I should announce it here too for those of you no longer checking Twitter: my nonfiction book, "Lost in Automatic Translation" is coming out this July: lostinautomatictranslation.com. I'm very excited to share it with you!

May 27, 2025 at 7:16 PM

I guess that now that I have 1% of my Twitter followers follow me here 😅, I should announce it here too for those of you no longer checking Twitter: my nonfiction book, "Lost in Automatic Translation" is coming out this July: lostinautomatictranslation.com. I'm very excited to share it with you!

Reposted by Antoine Bosselut

Super excited to share that our paper "A Logical Fallacy-Informed Framework for Argument Generation" has received the Outstanding Paper Award 🎉🎉 at NAACL 2025!

Paper: aclanthology.org/2025.naacl-l...

Code: github.com/lucamouchel/...

#NAACL2025

Paper: aclanthology.org/2025.naacl-l...

Code: github.com/lucamouchel/...

#NAACL2025

May 1, 2025 at 1:41 PM

Super excited to share that our paper "A Logical Fallacy-Informed Framework for Argument Generation" has received the Outstanding Paper Award 🎉🎉 at NAACL 2025!

Paper: aclanthology.org/2025.naacl-l...

Code: github.com/lucamouchel/...

#NAACL2025

Paper: aclanthology.org/2025.naacl-l...

Code: github.com/lucamouchel/...

#NAACL2025

Reposted by Antoine Bosselut

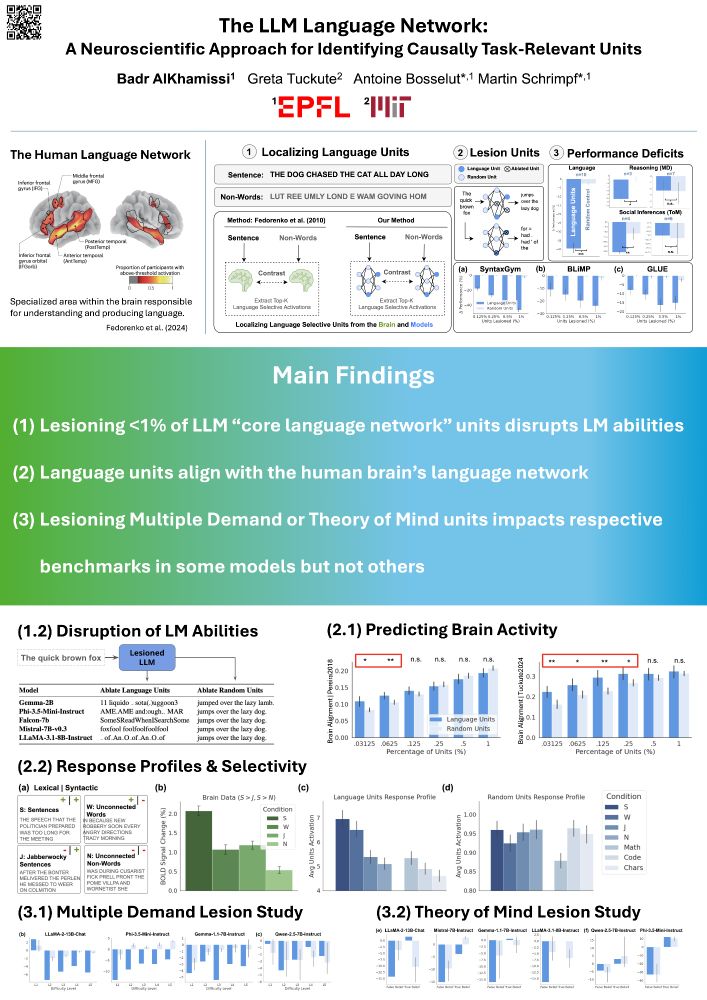

Excited to be at #NAACL2025 in Albuquerque! I’ll be presenting our paper “The LLM Language Network” as an Oral tomorrow at 2:00 PM in Ballroom C, hope to see you there!

Looking forward to all the discussions! 🎤 🧠

Looking forward to all the discussions! 🎤 🧠

April 30, 2025 at 12:38 AM

Excited to be at #NAACL2025 in Albuquerque! I’ll be presenting our paper “The LLM Language Network” as an Oral tomorrow at 2:00 PM in Ballroom C, hope to see you there!

Looking forward to all the discussions! 🎤 🧠

Looking forward to all the discussions! 🎤 🧠