Statistical ML, Generalization, Uncertainty, Empirical Bayes

https://yulisl.github.io/

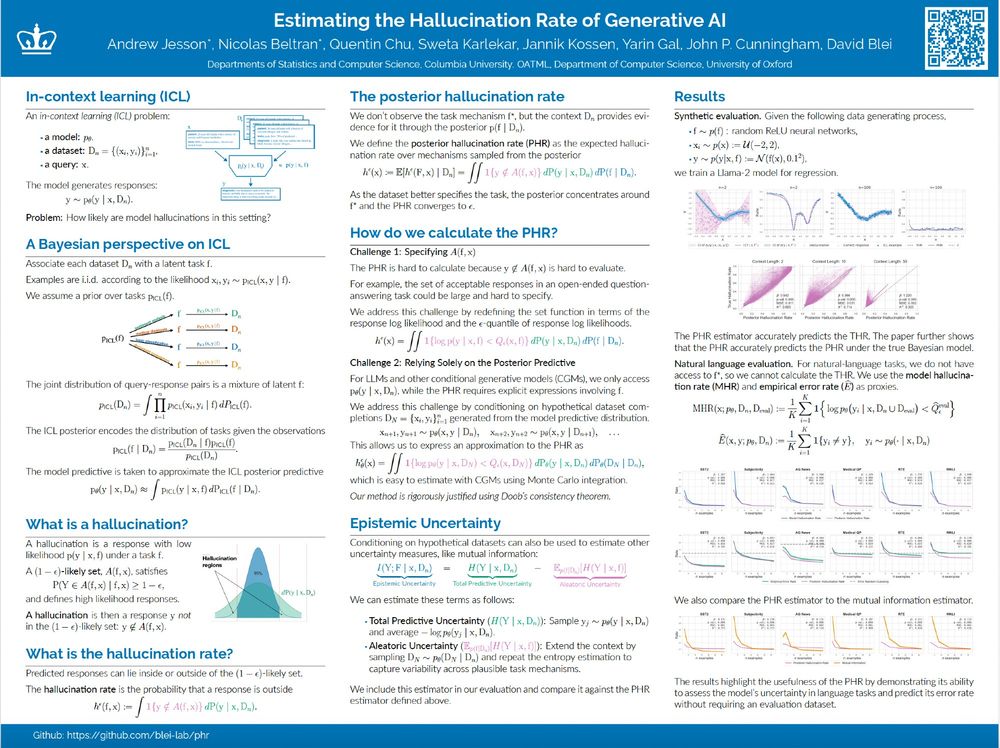

We will be presenting Estimating the Hallucination Rate of Generative AI at NeurIPS. Come if you'd like to chat about epistemic uncertainty for In-Context Learning, or uncertainty more generally. :)

Location: East Exhibit Hall A-C #2703

Time: Friday @ 4:30

Paper: arxiv.org/abs/2406.07457

We will be presenting Estimating the Hallucination Rate of Generative AI at NeurIPS. Come if you'd like to chat about epistemic uncertainty for In-Context Learning, or uncertainty more generally. :)

Location: East Exhibit Hall A-C #2703

Time: Friday @ 4:30

Paper: arxiv.org/abs/2406.07457

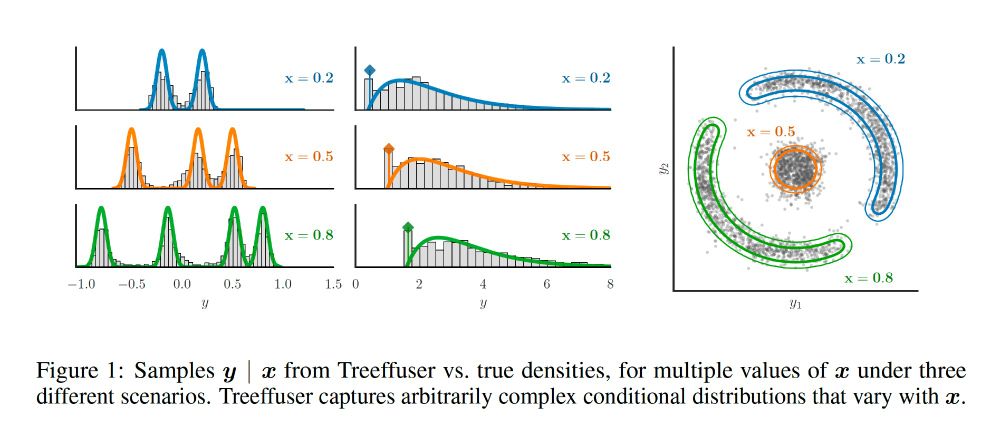

joint work with @velezbeltran.bsky.social @maggiemakar.bsky.social @anndvision.bsky.social @bleilab.bsky.social Adria @far.ai Achille and Caro

joint work with @velezbeltran.bsky.social @maggiemakar.bsky.social @anndvision.bsky.social @bleilab.bsky.social Adria @far.ai Achille and Caro

paper: arxiv.org/abs/2406.07658

repo: github.com/blei-lab/tre...

🧵(1/8)

paper: arxiv.org/abs/2406.07658

repo: github.com/blei-lab/tre...

🧵(1/8)