Work on unsupervised learning, #DeepLearning, and #Neuroscience in a vibrant research environment.

👇 Details below

Work on unsupervised learning, #DeepLearning, and #Neuroscience in a vibrant research environment.

👇 Details below

🧵

🧵

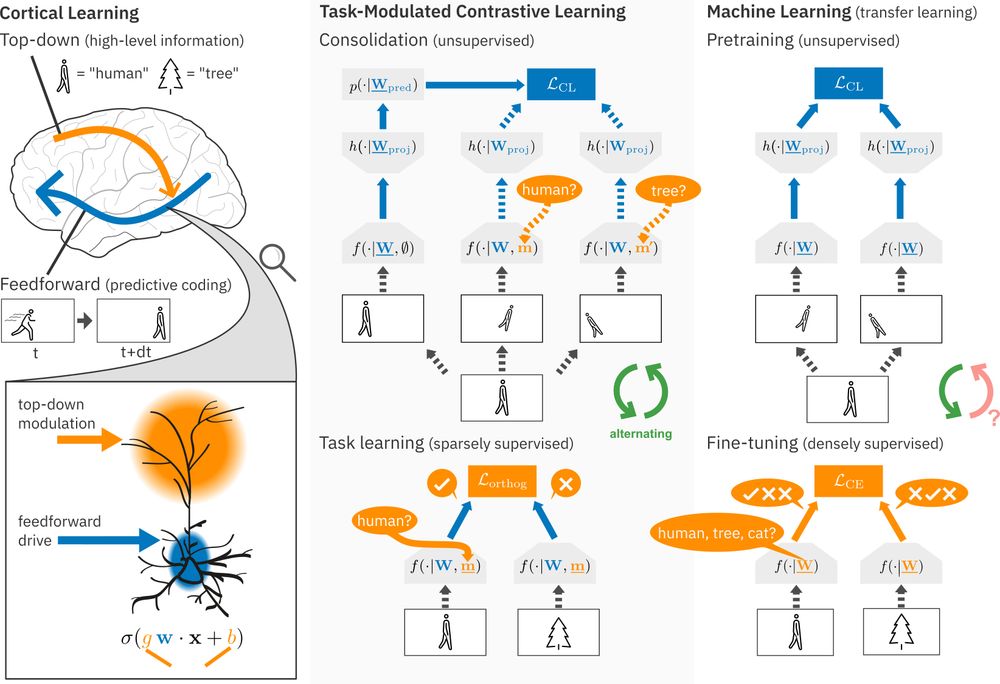

Continual learning methods struggle in mostly unsupervised environments with sparse labels (e.g. parents telling their child the object is an 'apple').

We propose that in the cortex, predictive coding of high-level top-down modulations solves this! (1/6)

Continual learning methods struggle in mostly unsupervised environments with sparse labels (e.g. parents telling their child the object is an 'apple').

We propose that in the cortex, predictive coding of high-level top-down modulations solves this! (1/6)

Vision models often struggle with learning both transformation-invariant and -equivariant representations at the same time.

@hafezghm.bsky.social shows that self-supervised prediction with proper inductive biases achieves both simultaneously. (1/4)

#MLSky #NeuroAI

Can we simultaneously learn transformation-invariant and transformation-equivariant representations with self-supervised learning?

TL;DR Yes! This is possible via simple predictive learning & architectural inductive biases – without extra loss terms and predictors!

🧵 (1/10)

Vision models often struggle with learning both transformation-invariant and -equivariant representations at the same time.

@hafezghm.bsky.social shows that self-supervised prediction with proper inductive biases achieves both simultaneously. (1/4)

#MLSky #NeuroAI

www.nature.com/articles/s42...

maxwellforbes.com/posts/how-to...

maxwellforbes.com/posts/how-to...

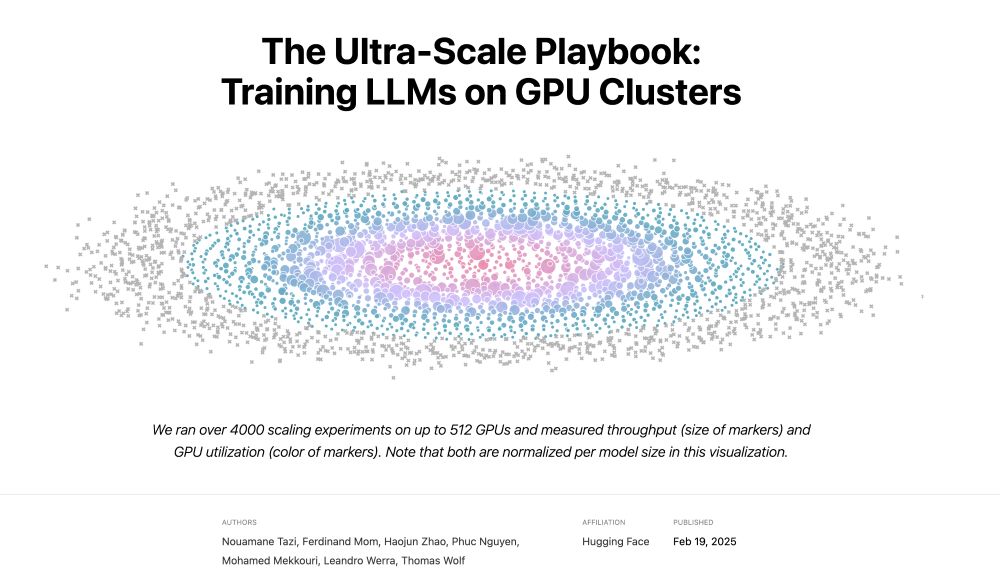

A book to learn all about 5D parallelism, ZeRO, CUDA kernels, how/why overlap compute & coms with theory, motivation, interactive plots and 4000+ experiments!

A book to learn all about 5D parallelism, ZeRO, CUDA kernels, how/why overlap compute & coms with theory, motivation, interactive plots and 4000+ experiments!