🔍 We show that given access to a reference, BLEU can match reward models in human preference agreement, and even train LLMs competitively with them using GRPO.

🫐 Introducing BLEUBERI:

🔍 We show that given access to a reference, BLEU can match reward models in human preference agreement, and even train LLMs competitively with them using GRPO.

🫐 Introducing BLEUBERI:

✅ Humans achieve 85% accuracy

❌ OpenAI Operator: 24%

❌ Anthropic Computer Use: 14%

❌ Convergence AI Proxy: 13%

We create ONERULER 💍, a multilingual long-context benchmark that allows for nonexistent needles. Turns out NIAH isn't so easy after all!

Our analysis across 26 languages 🧵👇

We create ONERULER 💍, a multilingual long-context benchmark that allows for nonexistent needles. Turns out NIAH isn't so easy after all!

Our analysis across 26 languages 🧵👇

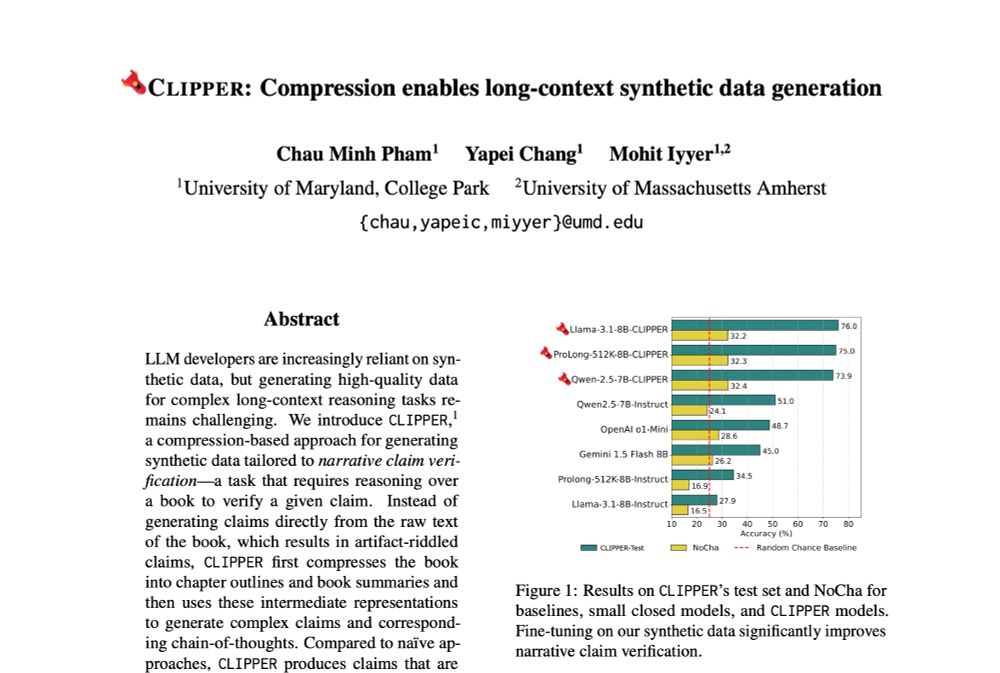

💽 check out CLIPPER, a pipeline that generates data conditioning on compressed forms of long input documents!

We present CLIPPER ✂️, a compression-based pipeline that produces grounded instructions for ~$0.5 each, 34x cheaper than human annotations.

💽 check out CLIPPER, a pipeline that generates data conditioning on compressed forms of long input documents!

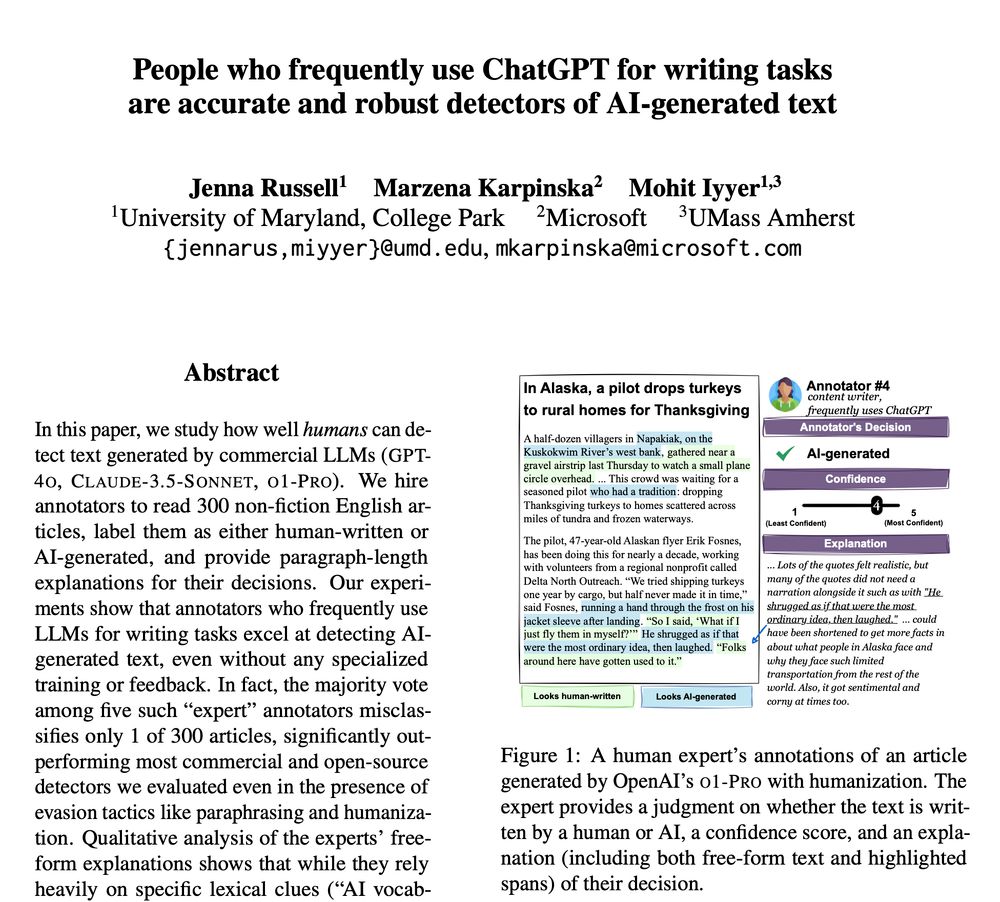

Turns out that while general population is unreliable, those who frequently use ChatGPT for writing tasks can spot even "humanized" AI-generated text with near-perfect accuracy 🎯

Turns out that while general population is unreliable, those who frequently use ChatGPT for writing tasks can spot even "humanized" AI-generated text with near-perfect accuracy 🎯

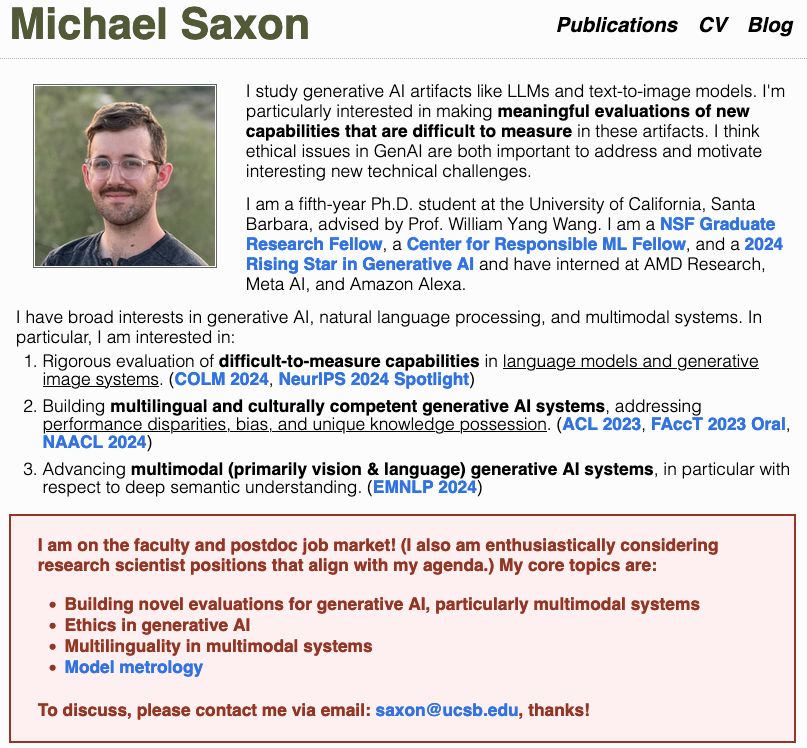

I'm searching for faculty positions/postdocs in multilingual/multicultural NLP, vision+language models, and eval for genAI!

I'll be at #NeurIPS2024 presenting our work on meta-evaluation for text-to-image faithfulness! Let's chat there!

Papers in🧵, see more: saxon.me

I'm searching for faculty positions/postdocs in multilingual/multicultural NLP, vision+language models, and eval for genAI!

I'll be at #NeurIPS2024 presenting our work on meta-evaluation for text-to-image faithfulness! Let's chat there!

Papers in🧵, see more: saxon.me

Students do different research, go on the job market, and recruit other students. Ping me and I'll add you!

Students do different research, go on the job market, and recruit other students. Ping me and I'll add you!

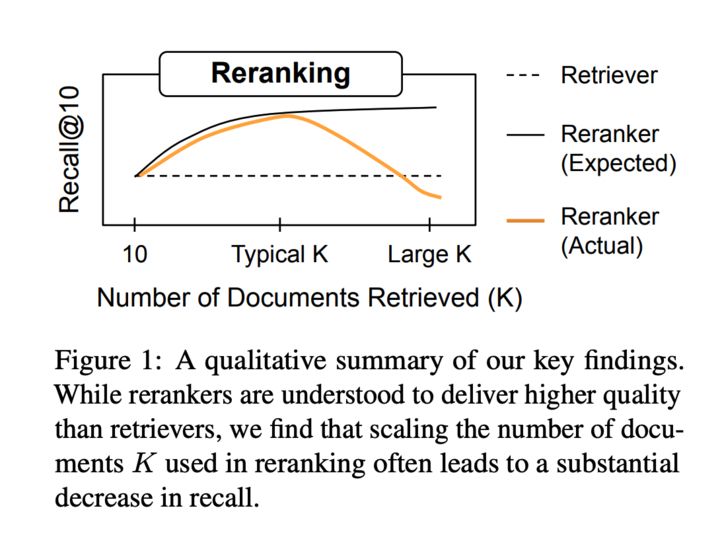

It's time to revisit common assumptions in IR! Embeddings have improved drastically, but mainstream IR evals have stagnated since MSMARCO + BEIR.

We ask: on private or tricky IR tasks, are rerankers better? Surely, reranking many docs is best?

It's time to revisit common assumptions in IR! Embeddings have improved drastically, but mainstream IR evals have stagnated since MSMARCO + BEIR.

We ask: on private or tricky IR tasks, are rerankers better? Surely, reranking many docs is best?

My research centers on advancing Responsible AI, specifically enhancing factuality, robustness, and transparency in AI systems.

If you have relevant positions, let me know! lasharavichander.github.io Please share/RT!

My research centers on advancing Responsible AI, specifically enhancing factuality, robustness, and transparency in AI systems.

If you have relevant positions, let me know! lasharavichander.github.io Please share/RT!

We present Suri 🦙: a dataset of 20K long-form texts & LLM-generated, backtranslated instructions with complex constraints.

📎 arxiv.org/abs/2406.19371

We present Suri 🦙: a dataset of 20K long-form texts & LLM-generated, backtranslated instructions with complex constraints.

📎 arxiv.org/abs/2406.19371

- 6k reasoning tokens is often not enough to get an ans and more means being able to process only short books

- OpenAI adds sth to the prompt: ~8k extra tokens-> less room for book+reason+generation!

Aside from this, I'd love to chat about:

• long-context training

• realistic & hard eval

• synthetic data

• tbh any cool projects people are working on

Also, I'm on the lookout for a summer 2025 internship!

Aside from this, I'd love to chat about:

• long-context training

• realistic & hard eval

• synthetic data

• tbh any cool projects people are working on

Also, I'm on the lookout for a summer 2025 internship!

I’m on the academic job market so please reach out if you would like to chat 🙏

And come talk to me, @rnv.bsky.social and @iaugenstein.bsky.social on Thu (Nov 14) at poster session G from 2-3:30PM about LLM tropes!

We introduce tropes: repeated and consistent phrases which LLMs generate to argue for political stances.

Read the paper to learn more! arxiv.org/abs/2406.19238

Work done Uni Copenhagen + Pioneer Center for AI

I’m on the academic job market so please reach out if you would like to chat 🙏

And come talk to me, @rnv.bsky.social and @iaugenstein.bsky.social on Thu (Nov 14) at poster session G from 2-3:30PM about LLM tropes!

To counteract, I started collecting this list. Who else should I be following & add? 👇 go.bsky.app/LaGDpqg

To counteract, I started collecting this list. Who else should I be following & add? 👇 go.bsky.app/LaGDpqg

🍝 secret sauce? really really high quality set of image+text pairs, which we'll release openly

🕹️ try it out: molmo.allenai.org

📎 read more about it: molmo.allenai.org/blog

🤗 download models: huggingface.co/collections/...

🍝 secret sauce? really really high quality set of image+text pairs, which we'll release openly

🕹️ try it out: molmo.allenai.org

📎 read more about it: molmo.allenai.org/blog

🤗 download models: huggingface.co/collections/...