We also have another oral presentation for DepthFM on March 1, 2:30 pm-3:45 pm.

We also have another oral presentation for DepthFM on March 1, 2:30 pm-3:45 pm.

🤨Interested? Check out our latest work at #AAAI25:

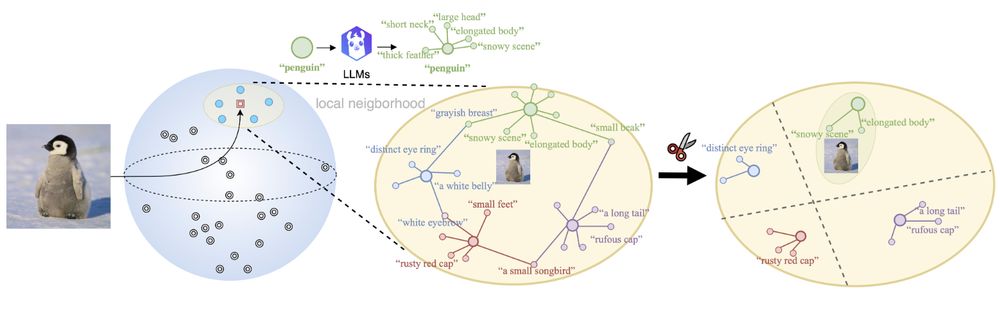

💻Code and 📝Paper at: github.com/CompVis/DisCLIP

🧵👇

🤨Interested? Check out our latest work at #AAAI25:

💻Code and 📝Paper at: github.com/CompVis/DisCLIP

🧵👇

We showed exactly that for a fundamental task:

semantic correspondence📍

A thread 🧵👇

We showed exactly that for a fundamental task:

semantic correspondence📍

A thread 🧵👇

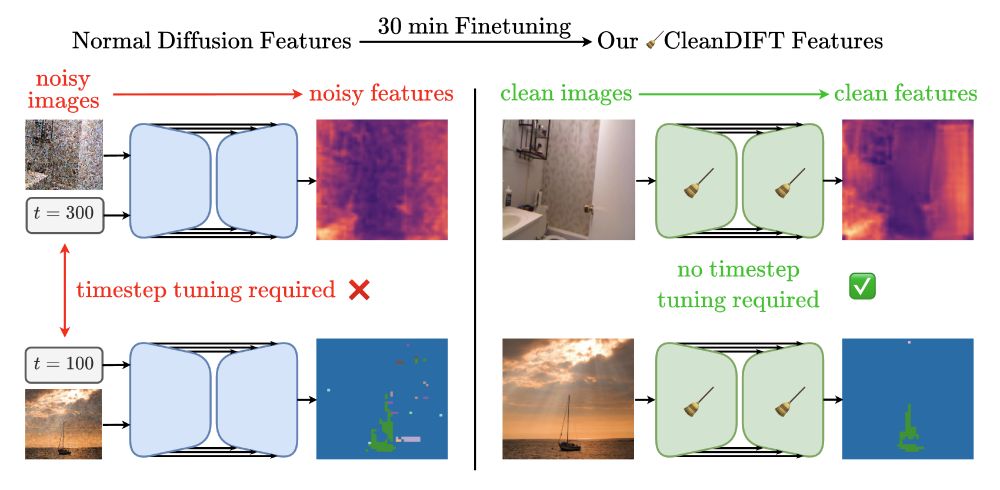

Yes, it is - but we found a way to do better. 🚀

Here’s how we unlock better features, no noise, no hassle.

📝 Project Page: compvis.github.io/cleandift

💻 Code: github.com/CompVis/clea...

🧵👇

vladimiryugay.github.io/magic_slam/i...

1/7

vladimiryugay.github.io/magic_slam/i...

1/7