📝 C-ratings are used in research across Cognitive Science

💶 They take time and money to collect

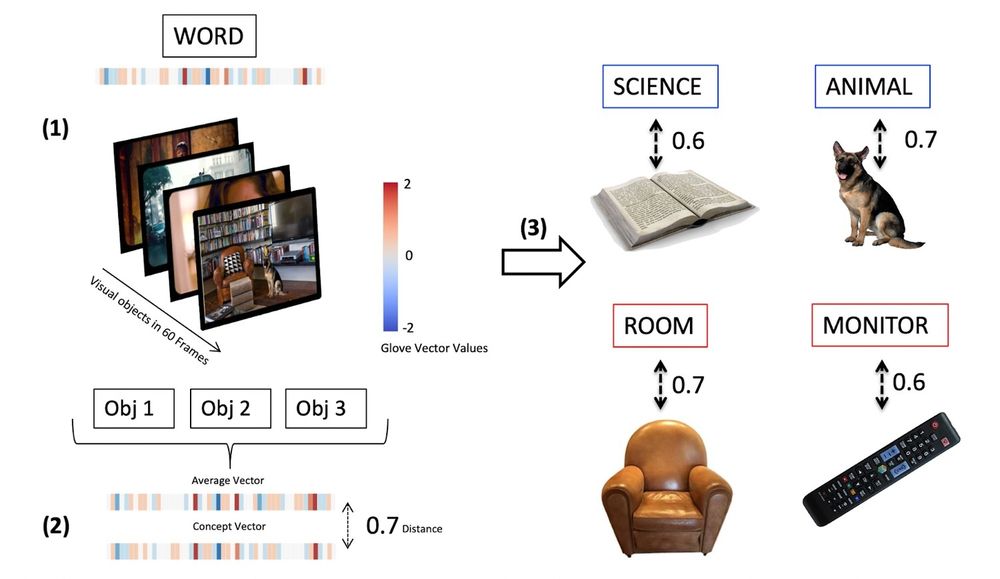

⚙️ Automation solves this + we get in-context ratings for free!

www.nature.com/articles/s44...

📝 C-ratings are used in research across Cognitive Science

💶 They take time and money to collect

⚙️ Automation solves this + we get in-context ratings for free!

www.nature.com/articles/s44...

@viktorkewenig.bsky.social shows that, while concepts generally encode habitual experiences, the underlying neurobiological organisation is not fixed but depends dynamically on available contextual information. 👏🍾🐐

elifesciences.org/articles/91522