We want to provide support for onboarding users and contributors and have planned a `meet the devs` session on the 21th of November 2025 from 16.00 - 18.00.

This will be hosted on zoom, contact us via bluesky or email for the link.

See you there?

We want to provide support for onboarding users and contributors and have planned a `meet the devs` session on the 21th of November 2025 from 16.00 - 18.00.

This will be hosted on zoom, contact us via bluesky or email for the link.

See you there?

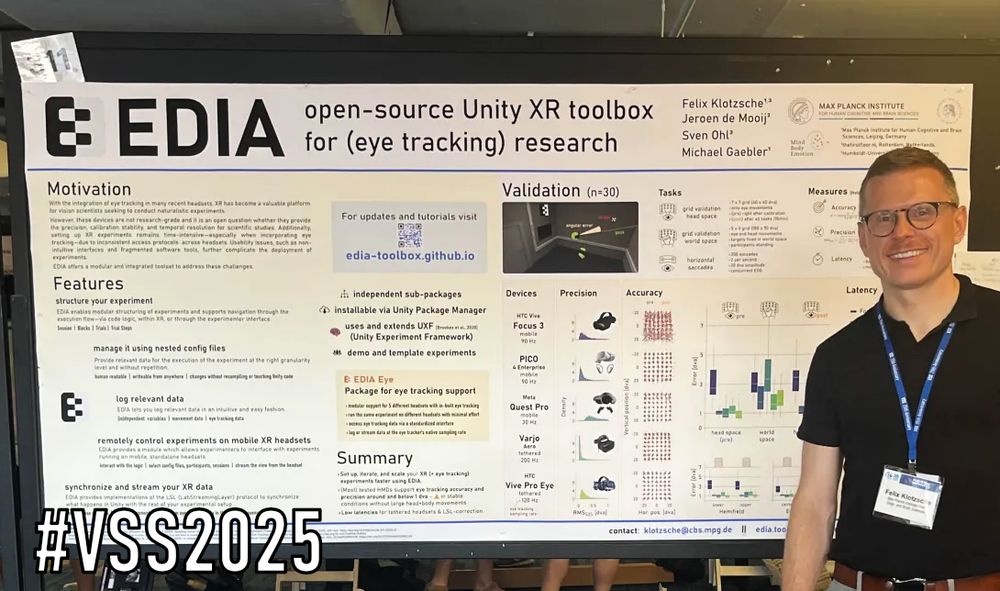

EDIA XR Toolbox for research is now publicly available online.

Check out edia-toolbox.github.io for more details.

Minor updates are to be expected in coming weeks.

We are excited and planning an online zoom session for interested parties soon.

@fra-malandrone.bsky.social

@lucyroe.bsky.social

A. Ciston

@thefirstfloor.bsky.social

A. Villringer

S. Carletto

@michaelgaebler.com

#neuroskyence #vr #emotion #affect #selfreports

@fra-malandrone.bsky.social

@lucyroe.bsky.social

A. Ciston

@thefirstfloor.bsky.social

A. Villringer

S. Carletto

@michaelgaebler.com

#neuroskyence #vr #emotion #affect #selfreports

#tradeshow #interactive #unity #gamification

Have a look at: www.behance.net/gallery/2263...

#tradeshow #interactive #unity #gamification

Have a look at: www.behance.net/gallery/2263...

We had a packed 4.5 hours of great discussions on XR research tools & eye tracking.

✨ Stay tuned, open-source release coming soon!

🔗 edia-toolbox.github.io

#XR #Research #Academic #OpenSource

Built in Unity + tested in real labs.

👁️ Bonus: we assess accuracy, precision & latency of eye tracking in 5 VR headsets using EDIA.

📍Poster 311 – Tue, 8:30–12:30, Banyan Breezeway.

#XR #OpenSource #Unity3D

Built in Unity + tested in real labs.

👁️ Bonus: we assess accuracy, precision & latency of eye tracking in 5 VR headsets using EDIA.

📍Poster 311 – Tue, 8:30–12:30, Banyan Breezeway.

#XR #OpenSource #Unity3D

We developed, empirically evaluated and openly share **AffectTracker**, a new tool to collect continuous ratings of two-dimensional (valence and arousal) affective experience **during** dynamic emotional stimulation (e.g., 360° videos) in immersive VR! 🥽🧠🟦

We developed, empirically evaluated and openly share **AffectTracker**, a new tool to collect continuous ratings of two-dimensional (valence and arousal) affective experience **during** dynamic emotional stimulation (e.g., 360° videos) in immersive VR! 🥽🧠🟦

📆 March 10-12, 2025

📍 Berlin & online

🔎 mindbrainbody.de

Keynotes:

- Ivan de Araujo

- Nadine Gogolla

- Maria Ribeiro @ribeironeuro.bsky.social

- Markus Ullsperger

- Tor Wager

- Veronica Witte @veronicawitte.bsky.social

#interoception

📆 March 10-12, 2025

📍 Berlin & online

🔎 mindbrainbody.de

Keynotes:

- Ivan de Araujo

- Nadine Gogolla

- Maria Ribeiro @ribeironeuro.bsky.social

- Markus Ullsperger

- Tor Wager

- Veronica Witte @veronicawitte.bsky.social

#interoception