Check out the exciting agenda! 👉 srcc.stanford.edu/lug2025/agenda

Check out the exciting agenda! 👉 srcc.stanford.edu/lug2025/agenda

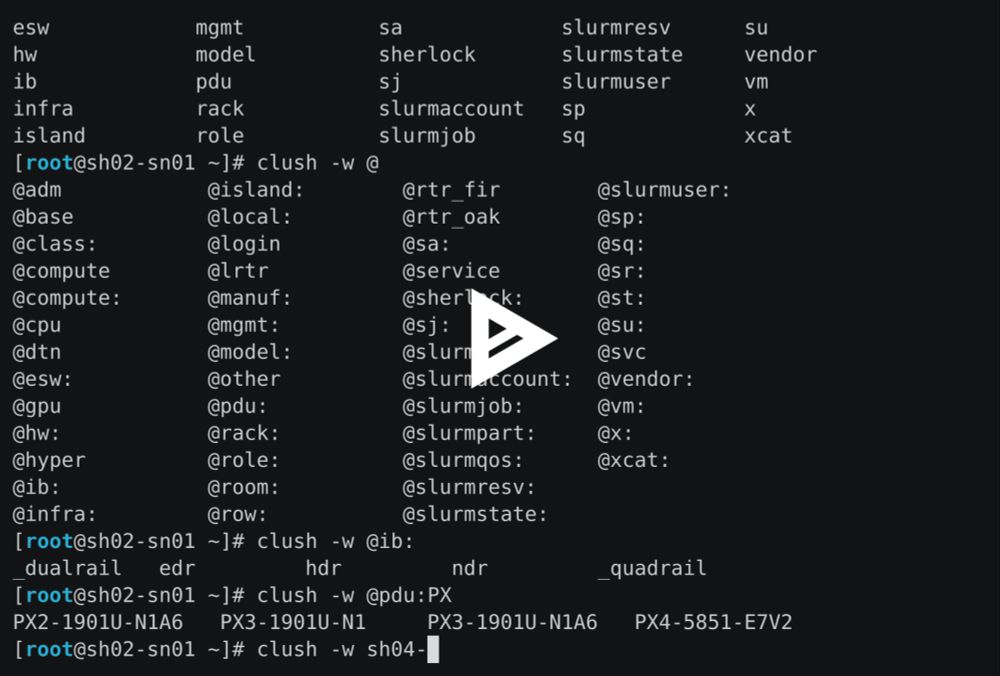

asciinema.org/a/699526

asciinema.org/a/699526

Check out my LAD'24 presentation:

www.eofs.eu/wp-content/u...

Check out my LAD'24 presentation:

www.eofs.eu/wp-content/u...

github.com/stanford-rc/...

github.com/stanford-rc/...

github.com/stanford-rc/...

github.com/stanford-rc/...

The scratch file system of Sherlock, Stanford's HPC cluster, has been revamped to provide 10 PB of fast flash storage on Lustre

news.sherlock.stanford.edu/publications...

The scratch file system of Sherlock, Stanford's HPC cluster, has been revamped to provide 10 PB of fast flash storage on Lustre

news.sherlock.stanford.edu/publications...