Computer Vision & Deep Learning

www.career.tu-darmstadt.de/tu-darmstadt...

www.career.tu-darmstadt.de/tu-darmstadt...

🌍 visinf.github.io/recover

Talk: Tue 09:30 AM, Kalakaua Ballroom

Poster: Tue 11:45 AM, Exhibit Hall I #76

🌍 visinf.github.io/recover

Talk: Tue 09:30 AM, Kalakaua Ballroom

Poster: Tue 11:45 AM, Exhibit Hall I #76

Work by Jannik Endres, @olvrhhn.bsky.social, Charles Cobière, @simoneschaub.bsky.social, @stefanroth.bsky.social and Alexandre Alahi.

Work by Jannik Endres, @olvrhhn.bsky.social, Charles Cobière, @simoneschaub.bsky.social, @stefanroth.bsky.social and Alexandre Alahi.

@ellis.eu @tuda.bsky.social

ellis.eu/news/ellis-p...

@ellis.eu @tuda.bsky.social

ellis.eu/news/ellis-p...

by @dustin-carrion.bsky.social, @stefanroth.bsky.social, and @simoneschaub.bsky.social

🌍: visinf.github.io/emat

Poster: Wednesday, 03:30 PM, Postern 8

by @dustin-carrion.bsky.social, @stefanroth.bsky.social, and @simoneschaub.bsky.social

🌍: visinf.github.io/emat

Poster: Wednesday, 03:30 PM, Postern 8

by @skiefhaber.de, @stefanroth.bsky.social, and @simoneschaub.bsky.social

🌍: visinf.github.io/recover

Poster: Friday, 10:30 AM, Poster 14

by @skiefhaber.de, @stefanroth.bsky.social, and @simoneschaub.bsky.social

🌍: visinf.github.io/recover

Poster: Friday, 10:30 AM, Poster 14

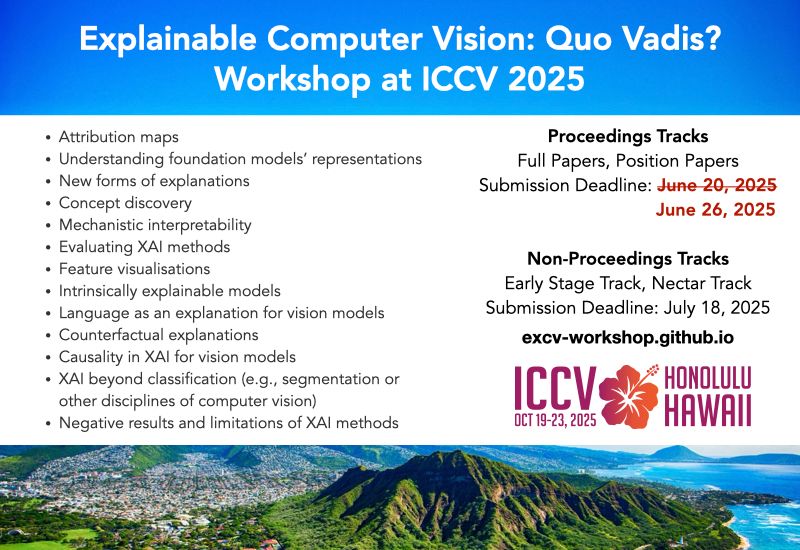

We welcome you to voice your opinion on the state of XAI. You get 5 minutes to speak (in-person only) during the workshop.

📷 Submit your proposals here: lnkd.in/d7_EWKXp

For more details: lnkd.in/dpYWVYXS

@iccv.bsky.social #ICCV2025 #eXCV

We welcome you to voice your opinion on the state of XAI. You get 5 minutes to speak (in-person only) during the workshop.

📷 Submit your proposals here: lnkd.in/d7_EWKXp

For more details: lnkd.in/dpYWVYXS

@iccv.bsky.social #ICCV2025 #eXCV

Non-Proceedings track closes in 2 days!

Be sure to submit on time!

We are awaiting your submissions!

More info at: excv-workshop.github.io

@iccv.bsky.social #ICCV2025 #eXCV

Non-Proceedings track closes in 2 days!

Be sure to submit on time!

We are awaiting your submissions!

More info at: excv-workshop.github.io

@iccv.bsky.social #ICCV2025 #eXCV

🗓️ Submissions open until June 26 AoE.

📄 Got accepted to ICCV? Congrats! Consider our non-proceedings track.

#ICCV2025 @iccv.bsky.social

🗓️ Submissions open until June 26 AoE.

📄 Got accepted to ICCV? Congrats! Consider our non-proceedings track.

#ICCV2025 @iccv.bsky.social

@iccv.bsky.social

@iccv.bsky.social

Overwhelmingly happy to be part of RAI & continue working with the smart minds at TU Darmstadt & hessian.AI, while also seeing my new home at Uni Bremen achieve a historic success in the excellence strategy!

Overwhelmingly happy to be part of RAI & continue working with the smart minds at TU Darmstadt & hessian.AI, while also seeing my new home at Uni Bremen achieve a historic success in the excellence strategy!

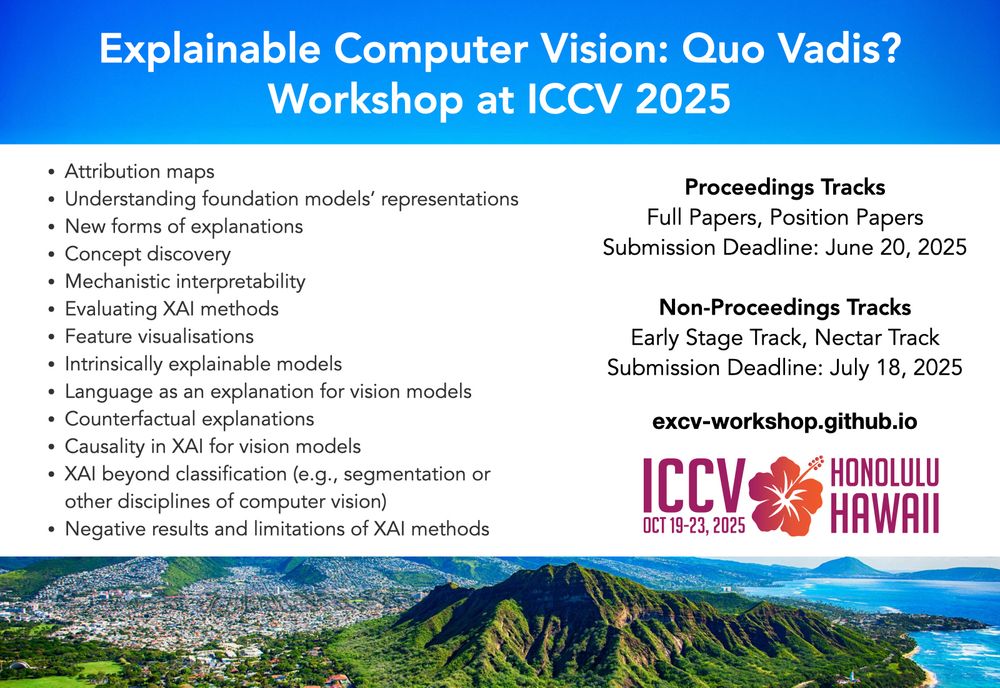

We present CUPS, the first unsupervised panoptic segmentation method trained directly on scene-centric imagery.

Using self-supervised features, depth & motion, we achieve SotA results!

🌎 visinf.github.io/cups

We present CUPS, the first unsupervised panoptic segmentation method trained directly on scene-centric imagery.

Using self-supervised features, depth & motion, we achieve SotA results!

🌎 visinf.github.io/cups

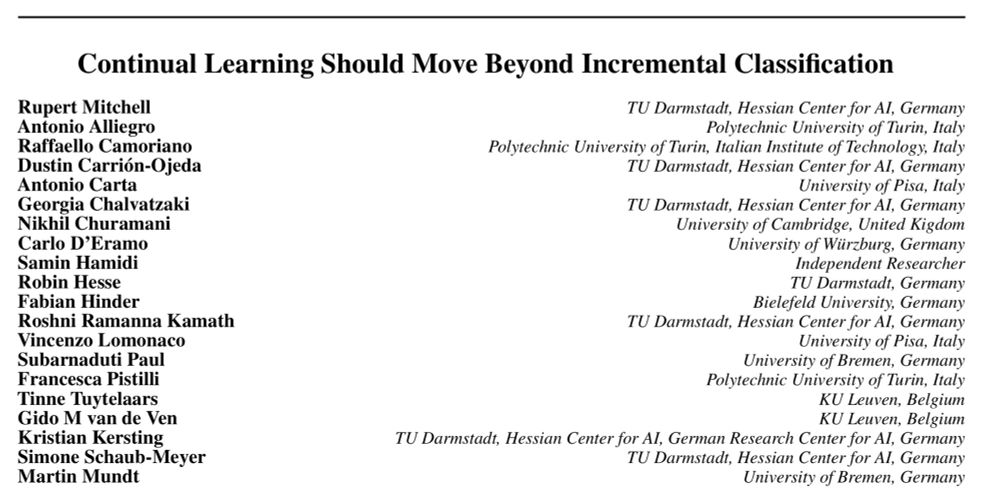

In our new collaborative paper w/ many amazing authors, we argue that “Continual Learning Should Move Beyond Incremental Classification”!

We highlight 5 examples to show where CL algos can fail & pinpoint 3 key challenges

arxiv.org/abs/2502.11927

In our new collaborative paper w/ many amazing authors, we argue that “Continual Learning Should Move Beyond Incremental Classification”!

We highlight 5 examples to show where CL algos can fail & pinpoint 3 key challenges

arxiv.org/abs/2502.11927

"RAI" ist eines der Projekte, mit denen sich die TUDa um einen Exzellenzcluster bewirbt.

www.youtube.com/watch?v=2VAm...

"RAI" ist eines der Projekte, mit denen sich die TUDa um einen Exzellenzcluster bewirbt.

www.youtube.com/watch?v=2VAm...

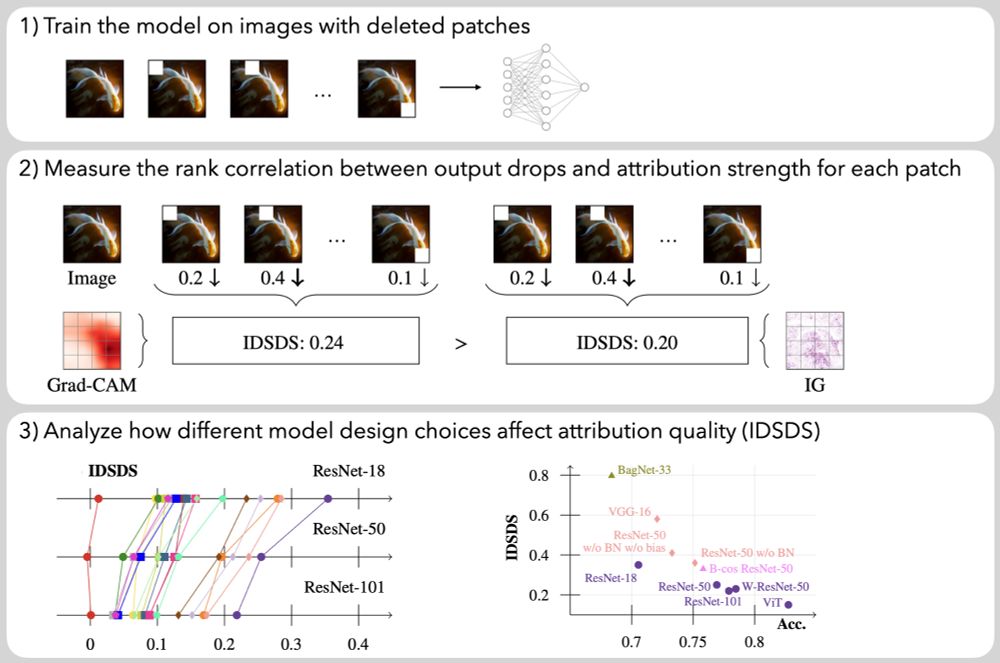

Paper: arxiv.org/abs/2407.11910

Code: github.com/visinf/idsds

Paper: arxiv.org/abs/2407.11910

Code: github.com/visinf/idsds

visinf.github.io/primaps/

PriMaPs generate masks from self-supervised features, enabling to boost unsupervised semantic segmentation via stochastic EM.

visinf.github.io/primaps/

PriMaPs generate masks from self-supervised features, enabling to boost unsupervised semantic segmentation via stochastic EM.