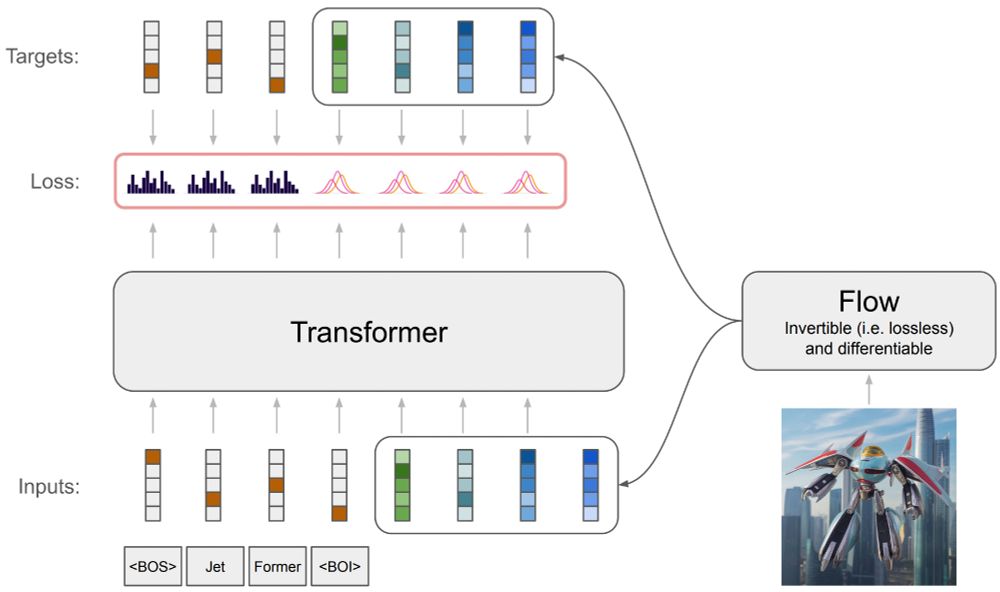

We have been pondering this during summer and developed a new model: JetFormer 🌊🤖

arxiv.org/abs/2411.19722

A thread 👇

1/

We have been pondering this during summer and developed a new model: JetFormer 🌊🤖

arxiv.org/abs/2411.19722

A thread 👇

1/

🧵👇

Excited to be here and share our latest work to get started!

RoMo: Robust Motion Segmentation Improves Structure from Motion

romosfm.github.io

Boost the performance of your SfM pipeline on dynamic scenes! 🚀 RoMo masks dynamic objects in a video, in a zero-shot manner.

🧵👇