- Update to Gemini 2.0 Flash Thinking (experimental) in Gemini

- Deep Research is now powered by Gemini 2.0 Flash Thinking (experimental) and available to more Gemini users at no cost

- Update to Gemini 2.0 Flash Thinking (experimental) in Gemini

- Deep Research is now powered by Gemini 2.0 Flash Thinking (experimental) and available to more Gemini users at no cost

A huge jump on the SWE bench 🔥

A huge jump on the SWE bench 🔥

It seems like thinking experience you will be able to try only ones though.

It seems like thinking experience you will be able to try only ones though.

1) It echoes the story from multiple labs about the confidence of scaling up to AGI fast (but you don't have to believe them)

2) There is no clear vision of what that world looks like

3) The labs are placing the burden on policymakers to decide what to do with what they make

1) It echoes the story from multiple labs about the confidence of scaling up to AGI fast (but you don't have to believe them)

2) There is no clear vision of what that world looks like

3) The labs are placing the burden on policymakers to decide what to do with what they make

If you want to learn more, see this awesome book "How to Scale Your Model":

jax-ml.github.io/scaling-book/

Put together by several of my Google DeepMind colleagues listed below 🎉.

If you want to learn more, see this awesome book "How to Scale Your Model":

jax-ml.github.io/scaling-book/

Put together by several of my Google DeepMind colleagues listed below 🎉.

They are building the best reasoning datasets out in the open.

Building off their work with Stratos, today they are releasing OpenThoughts-114k and OpenThinker-7B.

Repo: github.com/open-thought...

They are building the best reasoning datasets out in the open.

Building off their work with Stratos, today they are releasing OpenThoughts-114k and OpenThinker-7B.

Repo: github.com/open-thought...

Explore Agent Recipes: Explore common agent recipes with ready to copy code to improve your LLM applications. These agent recipes are largely inspired by Anthropic's article.

www.agentrecipes.com

Explore Agent Recipes: Explore common agent recipes with ready to copy code to improve your LLM applications. These agent recipes are largely inspired by Anthropic's article.

www.agentrecipes.com

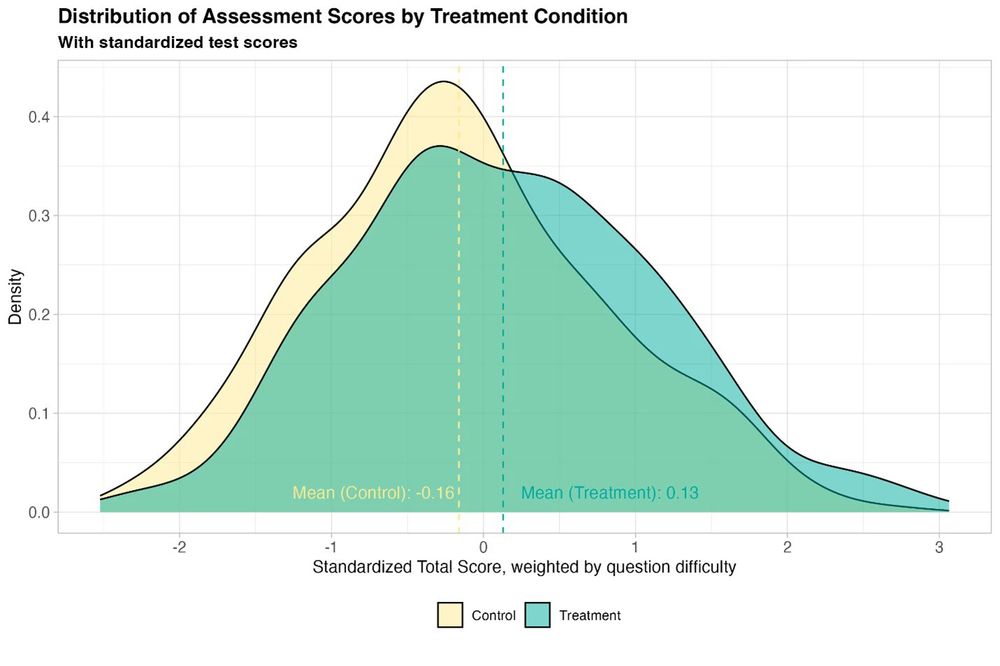

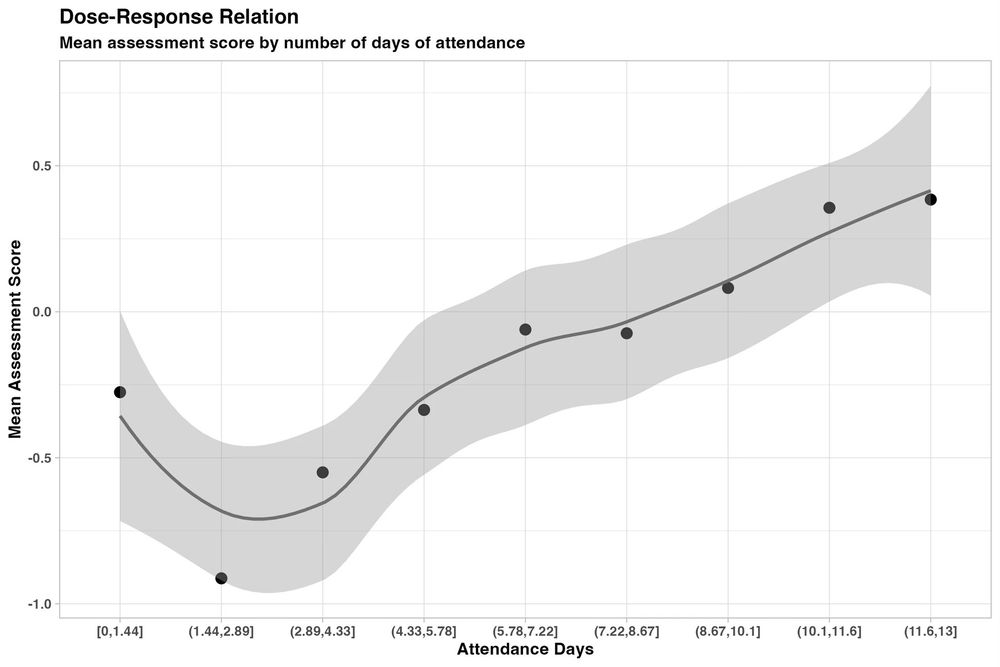

And it helped all students, especially girls who were initially behind.

And it helped all students, especially girls who were initially behind.

Add `from:me` to find your own posts

Add `to:me` to find replies/posts that mention you

Add `since:YYYY-MM-DD` and/or `until:YYYY-MM-DD` to specify a date range

Add `domain:theonion.com` to find posts linking to The Onion

See more at

bsky.social/about/blog/0...

Add `from:me` to find your own posts

Add `to:me` to find replies/posts that mention you

Add `since:YYYY-MM-DD` and/or `until:YYYY-MM-DD` to specify a date range

Add `domain:theonion.com` to find posts linking to The Onion

See more at

bsky.social/about/blog/0...

- Better memory efficiency and improved performance.

- Perfect for handling longer context windows

- Better memory efficiency and improved performance.

- Perfect for handling longer context windows

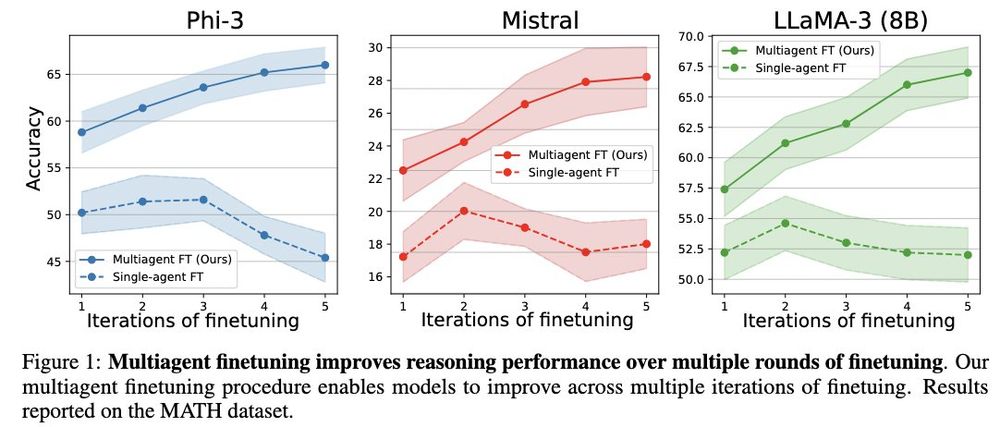

Instead of self improving a single LLM, they self-improve a population of LLMs initialized from a base model. This enables consistent self-improvement over multiple rounds.

Project: llm-multiagent-ft.github.io

Instead of self improving a single LLM, they self-improve a population of LLMs initialized from a base model. This enables consistent self-improvement over multiple rounds.

Project: llm-multiagent-ft.github.io

I pasted those into veo 2 verbatim & overlayed the poem for reference (I also asked for one additional scene revealing the Lady, again using the verbatim prompt)

I pasted those into veo 2 verbatim & overlayed the poem for reference (I also asked for one additional scene revealing the Lady, again using the verbatim prompt)

"Generate an interactive mind map for all the sources in the notebook"

"Generate an interactive mind map for all the sources in the notebook"

"Optimizing LLM Test-Time Compute Involves Solving a Meta-RL Problem"

blog.ml.cmu.edu/2025/01/08/o...

"Optimizing LLM Test-Time Compute Involves Solving a Meta-RL Problem"

blog.ml.cmu.edu/2025/01/08/o...

arxiv.org/abs/2405.06161

arxiv.org/abs/2405.06161

How soon will we be getting isomorphic code that changes as you speak? 👀

How soon will we be getting isomorphic code that changes as you speak? 👀