He/him.

bsky.app/profile/did:...

Be smart, do NOT trust fanxiang with your data.

Be smart, do NOT trust fanxiang with your data.

bsky.app/profile/did:...

bsky.app/profile/did:...

Already using Zed? Share this with someone who needs a nudge.

Already using Zed? Share this with someone who needs a nudge.

Me: Oh don't mind me, I'm just downloading some models from HuggingFace.

Me: Oh don't mind me, I'm just downloading some models from HuggingFace.

Webmail UI is a slight downgrade, but the knobs you get for spam control, etc is wonderful. It's also cheaper. And my main reason for switching - up to 25 custom domains.

Webmail UI is a slight downgrade, but the knobs you get for spam control, etc is wonderful. It's also cheaper. And my main reason for switching - up to 25 custom domains.

Based on the amount of tokens claude-code has gone through in a month, I'm expecting to consume the allocated token quota fairly quickly with Junie.

Based on the amount of tokens claude-code has gone through in a month, I'm expecting to consume the allocated token quota fairly quickly with Junie.

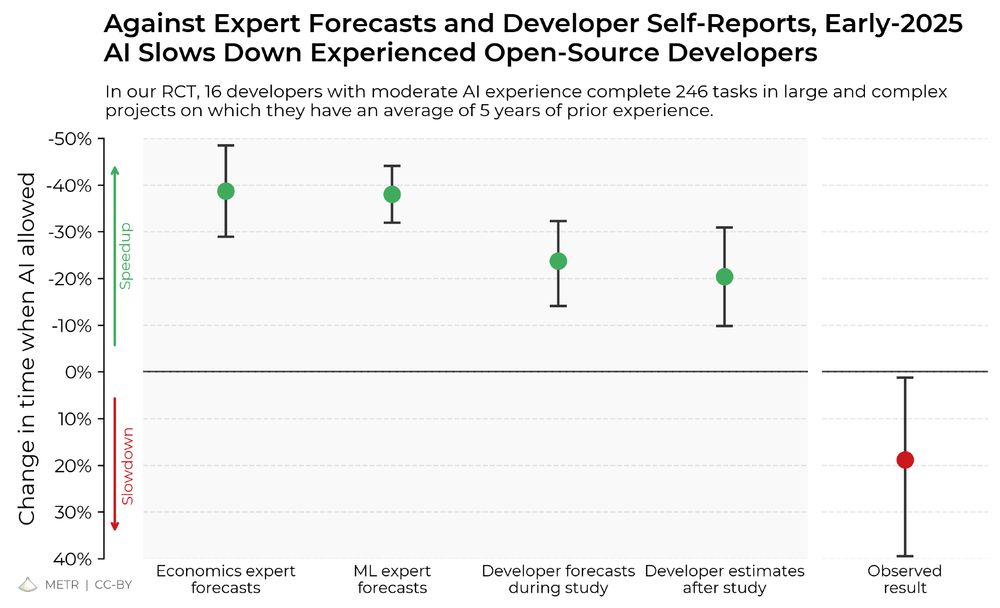

The results surprised us: Developers thought they were 20% faster with AI tools, but they were actually 19% slower when they had access to AI than when they didn't.

The results surprised us: Developers thought they were 20% faster with AI tools, but they were actually 19% slower when they had access to AI than when they didn't.