Our method generates large sets of images using significantly less compute than standard diffusion.

📎https://ddecatur.github.io/hierarchical-diffusion/

1/

Our method generates large sets of images using significantly less compute than standard diffusion.

📎https://ddecatur.github.io/hierarchical-diffusion/

1/

Check out our paper: arxiv.org/abs/2508.08228

We’re still actively developing LL3M, and we’d love to hear your thoughts! 7/

Check out our paper: arxiv.org/abs/2508.08228

We’re still actively developing LL3M, and we’d love to hear your thoughts! 7/

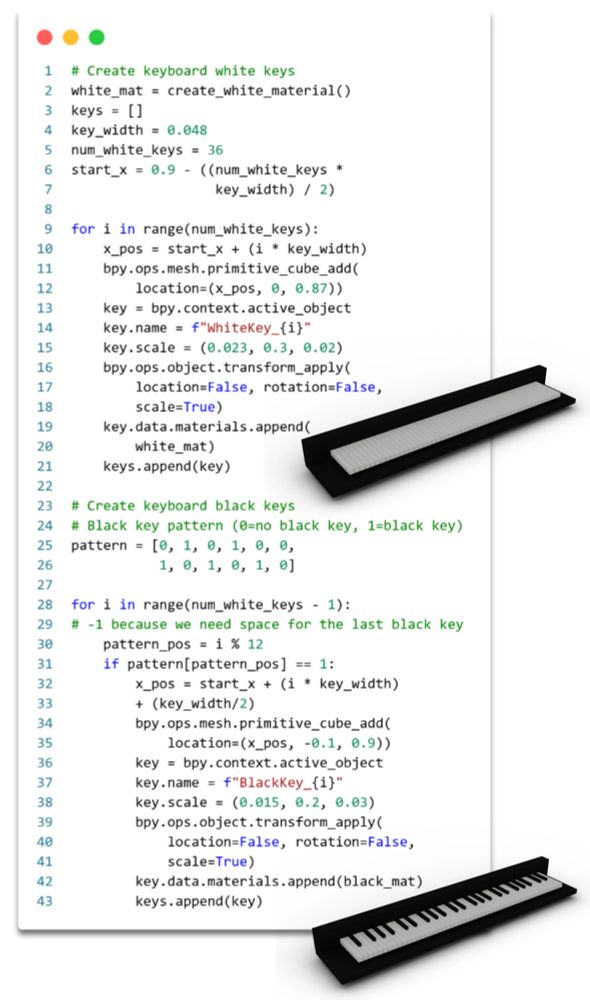

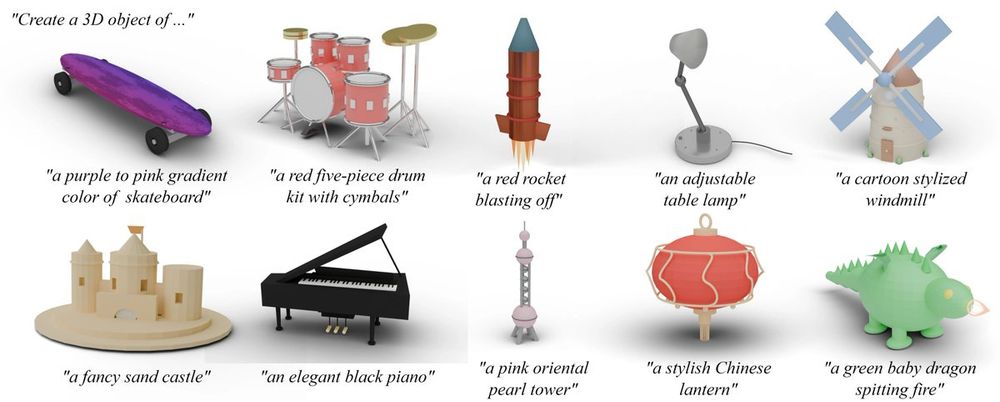

1️⃣ Initial Creation → break prompt into subtasks, retrieve relevant code snippets (BlenderRAG)

2️⃣ Auto-refine → critic spots issues, verification checks fixes

3️⃣ User-guided → iterative edits via user feedback

5/

1️⃣ Initial Creation → break prompt into subtasks, retrieve relevant code snippets (BlenderRAG)

2️⃣ Auto-refine → critic spots issues, verification checks fixes

3️⃣ User-guided → iterative edits via user feedback

5/

Our technique is capable of performing expressive text-driven deformations that preserve the input shape identity.

1/

Our technique is capable of performing expressive text-driven deformations that preserve the input shape identity.

1/

📝 Papers and schedule are now up!

Our Speakers:

Richard Newcombe (Meta)

Anjul Patney (NVIDIA)

Rana Hanocka (UChicago)

Laura Leal-Taixe (NVIDIA)

Margarita Grinvald (Meta)

📅 June 11, 8AM 📍Room 109.

cv4mr.github.io

📝 Papers and schedule are now up!

Our Speakers:

Richard Newcombe (Meta)

Anjul Patney (NVIDIA)

Rana Hanocka (UChicago)

Laura Leal-Taixe (NVIDIA)

Margarita Grinvald (Meta)

📅 June 11, 8AM 📍Room 109.

cv4mr.github.io

Brian (hywkim-brian.github.io/site/) is going to start his PhD next year at Columbia with @silviasellan.bsky.social Make sure to watch out for their awesome works 📈📈📈

Brian (hywkim-brian.github.io/site/) is going to start his PhD next year at Columbia with @silviasellan.bsky.social Make sure to watch out for their awesome works 📈📈📈