Website : https://oussamazekri.fr

Blog : https://logb-research.github.io/

The results are pretty exciting ! 😄

Adding to the discussion on using least-squares or cross-entropy, regression or classification formulations of supervised problems!

A thread on how to bridge these problems:

Adding to the discussion on using least-squares or cross-entropy, regression or classification formulations of supervised problems!

A thread on how to bridge these problems:

But what happens when we swap autoregressive generation for discrete diffusion, a rising architecture promising faster & more controllable LLMs?

Introducing SEPO !

📑 arxiv.org/pdf/2502.01384

🧵👇

But what happens when we swap autoregressive generation for discrete diffusion, a rising architecture promising faster & more controllable LLMs?

Introducing SEPO !

📑 arxiv.org/pdf/2502.01384

🧵👇

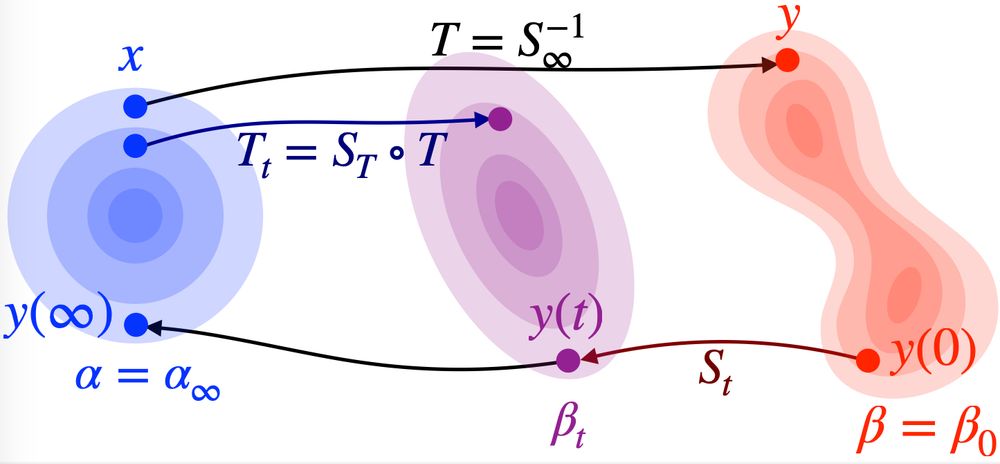

📝Easing Optimization Paths arxiv.org/pdf/2501.02362 (accepted @ICASSP 2025 🥳)

📝Clustering Heads 🔥https://arxiv.org/pdf/2410.24050

🖥️ github.com/facebookrese...

1/🧵

📝Easing Optimization Paths arxiv.org/pdf/2501.02362 (accepted @ICASSP 2025 🥳)

📝Clustering Heads 🔥https://arxiv.org/pdf/2410.24050

🖥️ github.com/facebookrese...

1/🧵

📝Easing Optimization Paths arxiv.org/pdf/2501.02362 (accepted @ICASSP 2025 🥳)

📝Clustering Heads 🔥https://arxiv.org/pdf/2410.24050

🖥️ github.com/facebookrese...

1/🧵

Make zero-shot reinforcement learning with LLMs go brrr 🚀

🖥️ github.com/abenechehab/...

📜 arxiv.org/pdf/2410.11711

Congrats Abdelhakim (abenechehab.github.io) for leading it, always fun working with nice and strong people 🤗

Make zero-shot reinforcement learning with LLMs go brrr 🚀

🖥️ github.com/abenechehab/...

📜 arxiv.org/pdf/2410.11711

Congrats Abdelhakim (abenechehab.github.io) for leading it, always fun working with nice and strong people 🤗

Recordings are also available: www.youtube.com/watch?v=5zFh...

Recordings are also available: www.youtube.com/watch?v=5zFh...

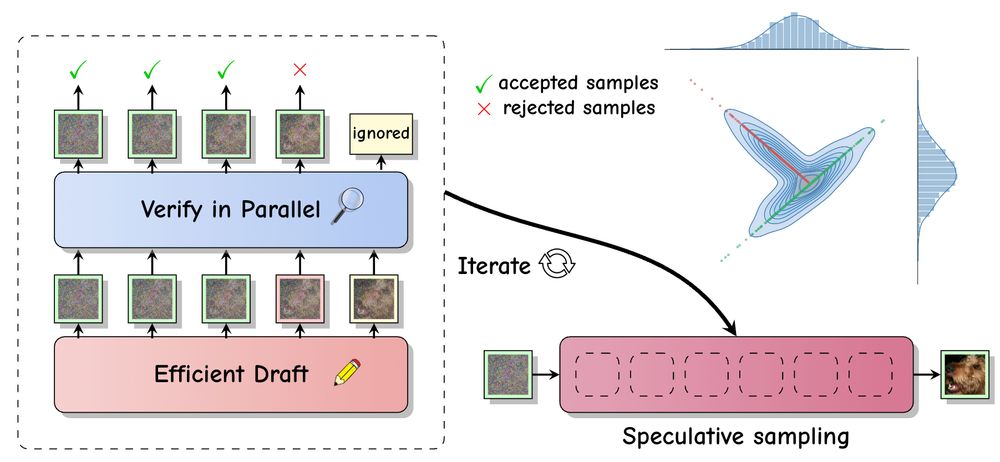

💡Our NeurIPS 2024 paper proposes 𝐌𝐚𝐍𝐨, a training-free and SOTA approach!

📑 arxiv.org/pdf/2405.18979

🖥️https://github.com/Renchunzi-Xie/MaNo

1/🧵(A surprise at the end!)

💡Our NeurIPS 2024 paper proposes 𝐌𝐚𝐍𝐨, a training-free and SOTA approach!

📑 arxiv.org/pdf/2405.18979

🖥️https://github.com/Renchunzi-Xie/MaNo

1/🧵(A surprise at the end!)

The results are pretty exciting ! 😄

The results are pretty exciting ! 😄