arxiv.org/abs/2601.17036

arxiv.org/abs/2601.17036

We also announce star investors cap.

See the announcement video to see our plans.

If you are LLM guru at research or product building, join us to make the plans a reality

youtu.be/cA8-XJeoZAQ?...

We also announce star investors cap.

See the announcement video to see our plans.

If you are LLM guru at research or product building, join us to make the plans a reality

youtu.be/cA8-XJeoZAQ?...

LLMs as Span Annotators: A Comparative Study of LLMs and Humans is accepted to multilingual-multicultural-evaluation.github.io 🎉

See paper arxiv.org/abs/2504.08697

LLMs as Span Annotators: A Comparative Study of LLMs and Humans is accepted to multilingual-multicultural-evaluation.github.io 🎉

See paper arxiv.org/abs/2504.08697

Avoiding computation is pretty obvious way how to be more efficient.

The question is how to maintain quality. MoE and router is an efficient approach but suffer from exposure bias fixed thresholds.

arxiv.org/abs/2510.12773

Avoiding computation is pretty obvious way how to be more efficient.

The question is how to maintain quality. MoE and router is an efficient approach but suffer from exposure bias fixed thresholds.

arxiv.org/abs/2510.12773

but it is tricky to use.

Left padding confused lot of early adopters.

However, clearly it is the future. Reuse of pre-trained LLM for everything.

Including vector search where BERT-like models ruled.

1/N

arxiv.org/abs/2506.05176

huggingface.co/Qwen/Qwen3-E...

but it is tricky to use.

Left padding confused lot of early adopters.

However, clearly it is the future. Reuse of pre-trained LLM for everything.

Including vector search where BERT-like models ruled.

1/N

arxiv.org/abs/2506.05176

huggingface.co/Qwen/Qwen3-E...

Saw it in TTS models where Guided Attention worked very well. Idea is simple: For speech conversion prefer guide the attention to focus on narrow context. It learns faster.

Looking forward to deep dive on this one - expect similarities

arxiv.org/abs/2601.15165

Saw it in TTS models where Guided Attention worked very well. Idea is simple: For speech conversion prefer guide the attention to focus on narrow context. It learns faster.

Looking forward to deep dive on this one - expect similarities

arxiv.org/abs/2601.15165

🚨NoCap test deadline 11/11/2025

🏆 $3K / $2K / $1K prizes

Our new team members shared tips&tricks which helped them to go through our test!

TRY IT NOW! 👉 github.com/BottleCapAI/...

🚨NoCap test deadline 11/11/2025

🏆 $3K / $2K / $1K prizes

Our new team members shared tips&tricks which helped them to go through our test!

TRY IT NOW! 👉 github.com/BottleCapAI/...

youtu.be/d3wT84egi-g?...

youtu.be/d3wT84egi-g?...

We are now opening challenge for those who want to help with that!

🏆 $3K / $2K / $1K prizes

⏰ Deadline: 11/11/2025

🖥️ 1 GPU. Your ideas.

👉 Join the NoCap Test:

github.com/BottleCapAI/...

We are now opening challenge for those who want to help with that!

🏆 $3K / $2K / $1K prizes

⏰ Deadline: 11/11/2025

🖥️ 1 GPU. Your ideas.

👉 Join the NoCap Test:

github.com/BottleCapAI/...

This year:

👉5 language pairs: EN->{ES, RU, DE, ZH},

👉2 tracks - sentence-level and doc-level translation,

👉authentic data from 2 domains: finance and IT!

www2.statmt.org/wmt25/termin...

Don't miss an opportunity - we only do it once in two years😏

This year:

👉5 language pairs: EN->{ES, RU, DE, ZH},

👉2 tracks - sentence-level and doc-level translation,

👉authentic data from 2 domains: finance and IT!

www2.statmt.org/wmt25/termin...

Don't miss an opportunity - we only do it once in two years😏

It has 77k subscribers with just 7 videos.

Because the videos are just awesome!

I wish I could explain stuff that simply!

Does anybody know how long take to prepare such video?

www.youtube.com/@algorithmic...

It has 77k subscribers with just 7 videos.

Because the videos are just awesome!

I wish I could explain stuff that simply!

Does anybody know how long take to prepare such video?

www.youtube.com/@algorithmic...

👉️ Vilém @zouharvi.bsky.social

👉️ Patrícia @patuchen.bsky.social

👉️ Ivan @ivankartac.bsky.social

👉️ Kristýna Onderková

👉️ Ondřej P. @oplatek.bsky.social

👉️ Dimitra @dimitrag.bsky.social

👉️ Saad @saad.me.uk

👉️ Ondřej D. @tuetschek.bsky.social

👉️ Simone Balloccu

👉️ Vilém @zouharvi.bsky.social

👉️ Patrícia @patuchen.bsky.social

👉️ Ivan @ivankartac.bsky.social

👉️ Kristýna Onderková

👉️ Ondřej P. @oplatek.bsky.social

👉️ Dimitra @dimitrag.bsky.social

👉️ Saad @saad.me.uk

👉️ Ondřej D. @tuetschek.bsky.social

👉️ Simone Balloccu

And how can span annotation help us with evaluating texts?

Find out in our new paper: llm-span-annotators.github.io

Arxiv: arxiv.org/abs/2504.08697

And how can span annotation help us with evaluating texts?

Find out in our new paper: llm-span-annotators.github.io

Arxiv: arxiv.org/abs/2504.08697

👉📺 @hajicjan.bsky.social talked about the new OpenEuroLLM project on Czech TV's Studio 6 www.ceskatelevize.cz/porady/10969...

👉📻 @tuetschek.bsky.social discussed #LLMs on Czech Radio radiozurnal.rozhlas.cz/proc-umela-i...).

👉📺 @hajicjan.bsky.social talked about the new OpenEuroLLM project on Czech TV's Studio 6 www.ceskatelevize.cz/porady/10969...

👉📻 @tuetschek.bsky.social discussed #LLMs on Czech Radio radiozurnal.rozhlas.cz/proc-umela-i...).

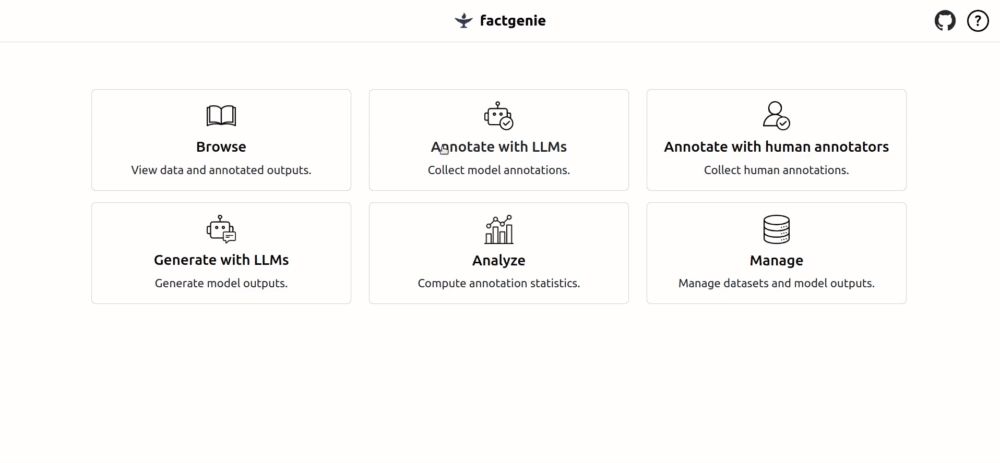

Let me introduce github.com/ufal/factgenie

A Span Annotation tool from @ufal-cuni.bsky.social

💡 Annotate errors in the outputs & w/out any references

💪 Use many ready-to-use datasets for data-to-text. MT & NLP friends are coming...

🚀 You get your annotations in minutes.

Let me introduce github.com/ufal/factgenie

A Span Annotation tool from @ufal-cuni.bsky.social

💡 Annotate errors in the outputs & w/out any references

💪 Use many ready-to-use datasets for data-to-text. MT & NLP friends are coming...

🚀 You get your annotations in minutes.

When you can *see* the errors in the output: 🤯

Check out factgenie, our new error span annotation tool:

github.com/ufal/factgenie

(Just matured into v1.0.1! 🎂)

#nlp #nlg

When you can *see* the errors in the output: 🤯

Check out factgenie, our new error span annotation tool:

github.com/ufal/factgenie

(Just matured into v1.0.1! 🎂)

#nlp #nlg