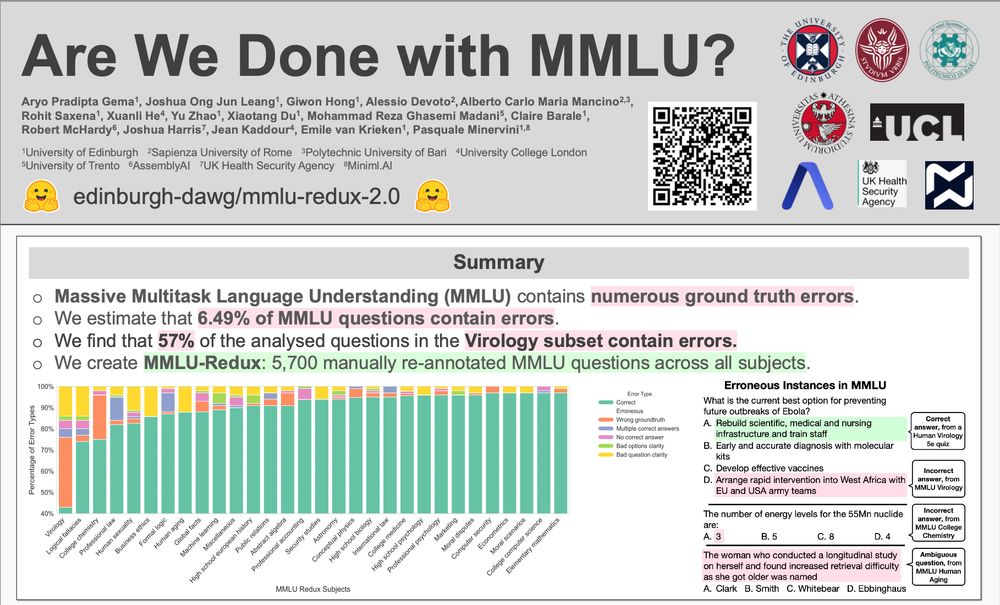

Wish I could be there for our "Are We Done with MMLU?" poster today (9:00-10:30am in Hall 3, Poster Session 7), but visa drama said nope 😅

If anyone's swinging by, give our research some love! Hit me up if you check it out! 👋

Wish I could be there for our "Are We Done with MMLU?" poster today (9:00-10:30am in Hall 3, Poster Session 7), but visa drama said nope 😅

If anyone's swinging by, give our research some love! Hit me up if you check it out! 👋

📅 Fri 11 Apr

⏰ 10am – 4pm|Free / Drop-In

📍@edfuturesinstitute.bsky.social

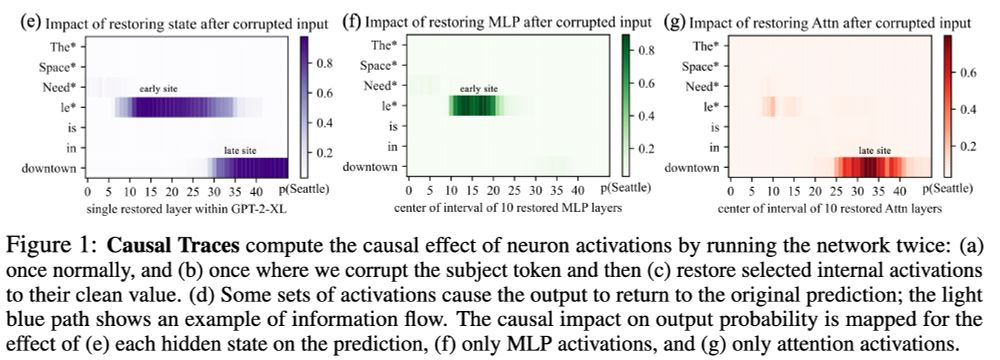

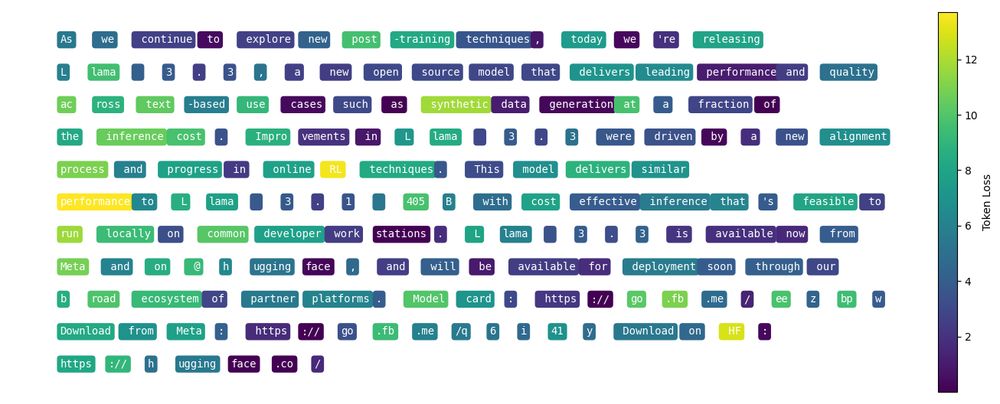

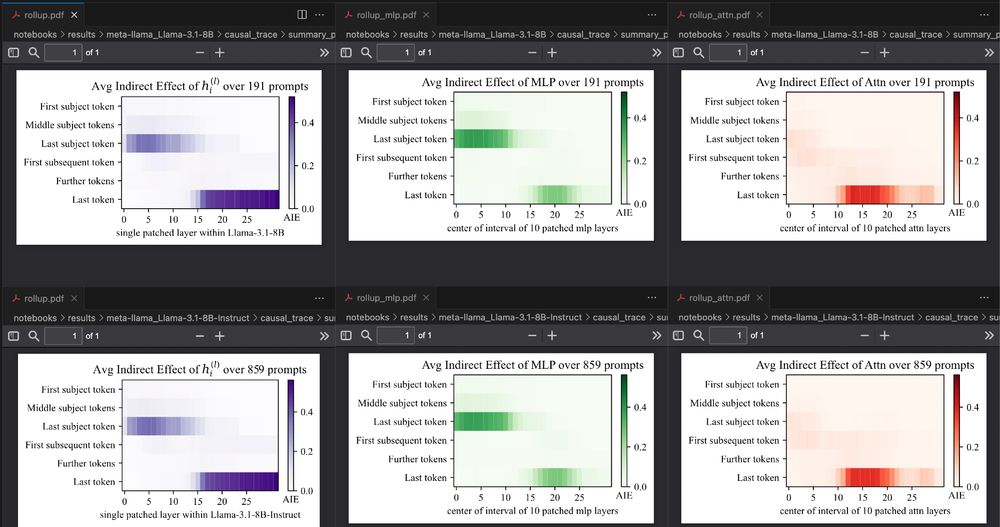

Procedural knowledge in pretraining drives LLM reasoning ⚙️🔢

🧵⬇️

Procedural knowledge in pretraining drives LLM reasoning ⚙️🔢

🧵⬇️