nbalepur.github.io

Our first paper designs a preference training method to boost LLM personalization 🎨

While the second outlines our position on why MCQA evals are terrible and how to make them better 🙏

Grateful for amazing collaborators!

Our first paper designs a preference training method to boost LLM personalization 🎨

While the second outlines our position on why MCQA evals are terrible and how to make them better 🙏

Grateful for amazing collaborators!

We propose information-guided probes, a method to uncover memorization evidence in *completely black-box* models,

without requiring access to

🙅♀️ Model weights

🙅♀️ Training data

🙅♀️ Token probabilities 🧵 (1/5)

We propose information-guided probes, a method to uncover memorization evidence in *completely black-box* models,

without requiring access to

🙅♀️ Model weights

🙅♀️ Training data

🙅♀️ Token probabilities 🧵 (1/5)

And loved visiting London+Edinburgh this week, hope to be back soon! 🙏

And loved visiting London+Edinburgh this week, hope to be back soon! 🙏

💰 $1 per question

🏆 Top-3 fastest + most accurate win $50

⏳ Questions take ~3 min => $20/hr+

Click here to sign up (please join, reposts appreciated 🙏): preferences.umiacs.umd.edu

💰 $1 per question

🏆 Top-3 fastest + most accurate win $50

⏳ Questions take ~3 min => $20/hr+

Click here to sign up (please join, reposts appreciated 🙏): preferences.umiacs.umd.edu

Multiple choice evals for LLMs are simple and popular, but we know they are awful 😬

We complain they're full of errors, saturated, and test nothing meaningful, so why do we still use them? 🫠

Here's why MCQA evals are broken, and how to fix them 🧵

Multiple choice evals for LLMs are simple and popular, but we know they are awful 😬

We complain they're full of errors, saturated, and test nothing meaningful, so why do we still use them? 🫠

Here's why MCQA evals are broken, and how to fix them 🧵

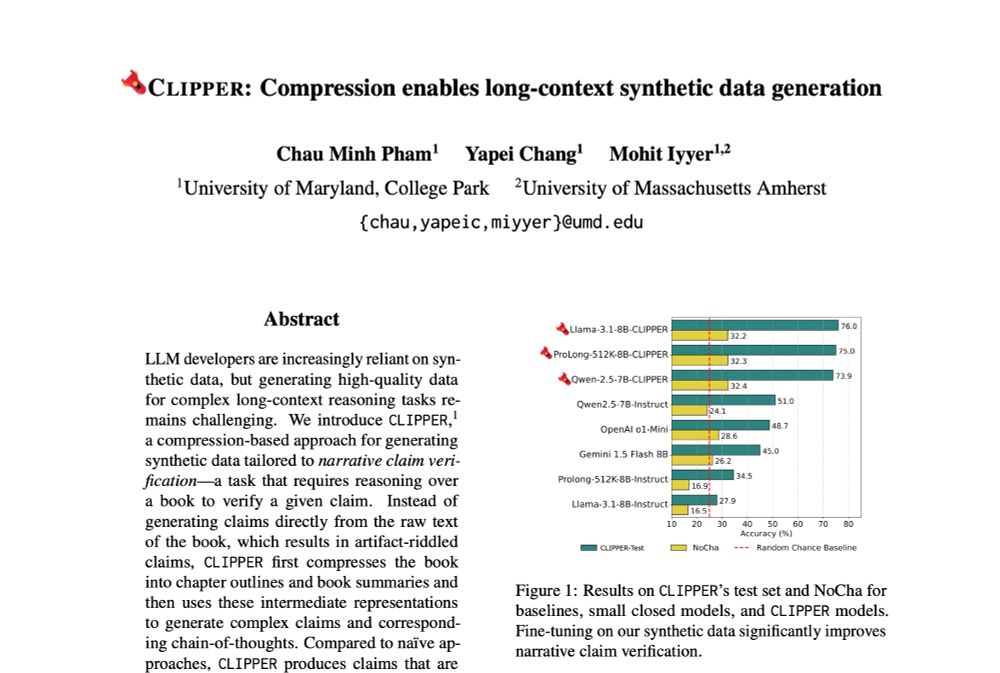

We present CLIPPER ✂️, a compression-based pipeline that produces grounded instructions for ~$0.5 each, 34x cheaper than human annotations.

We present CLIPPER ✂️, a compression-based pipeline that produces grounded instructions for ~$0.5 each, 34x cheaper than human annotations.

Multiple choice evals for LLMs are simple and popular, but we know they are awful 😬

We complain they're full of errors, saturated, and test nothing meaningful, so why do we still use them? 🫠

Here's why MCQA evals are broken, and how to fix them 🧵

Multiple choice evals for LLMs are simple and popular, but we know they are awful 😬

We complain they're full of errors, saturated, and test nothing meaningful, so why do we still use them? 🫠

Here's why MCQA evals are broken, and how to fix them 🧵

📄✍️ MoDS: Multi-Doc Summarization for Debatable Queries (Adobe intern work, coming soon!)

🤔❓Reverse QA: LLMs struggle with the simple task of giving questions for answers

Grateful for all my collaborators 😁

📄✍️ MoDS: Multi-Doc Summarization for Debatable Queries (Adobe intern work, coming soon!)

🤔❓Reverse QA: LLMs struggle with the simple task of giving questions for answers

Grateful for all my collaborators 😁

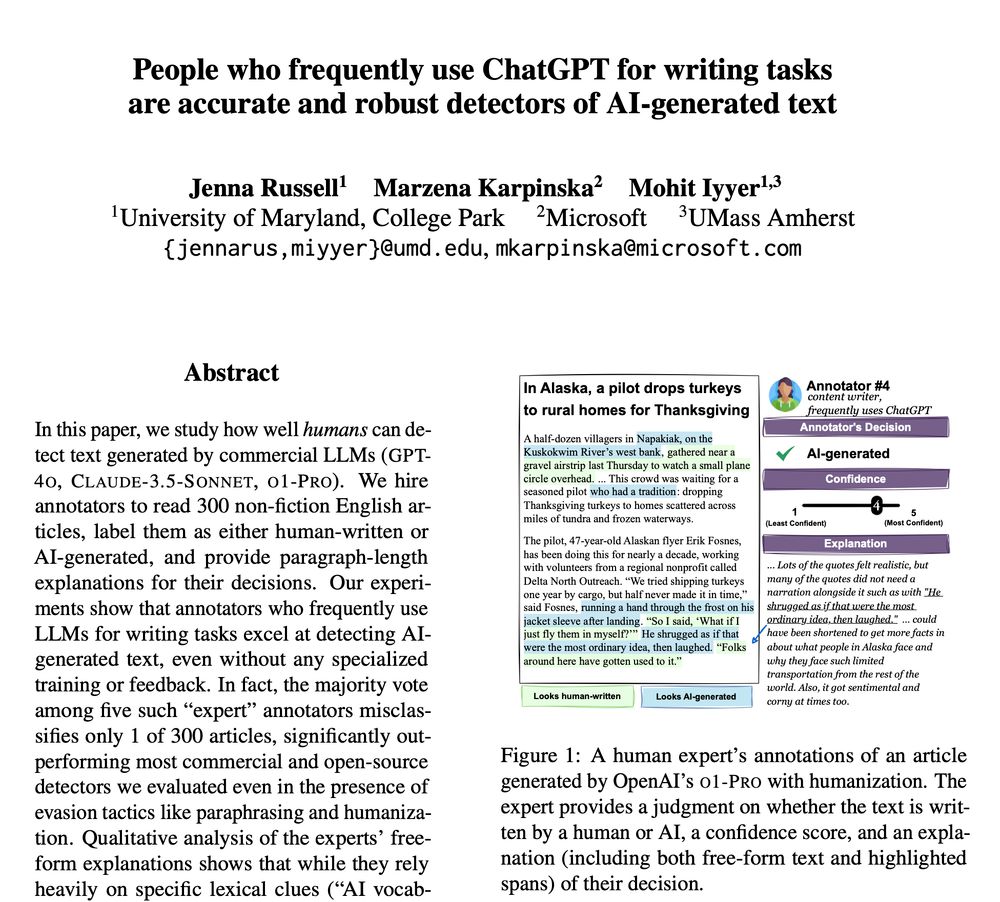

Turns out that while general population is unreliable, those who frequently use ChatGPT for writing tasks can spot even "humanized" AI-generated text with near-perfect accuracy 🎯

Turns out that while general population is unreliable, those who frequently use ChatGPT for writing tasks can spot even "humanized" AI-generated text with near-perfect accuracy 🎯

Best of luck to anyone submitting tmrw :)

Best of luck to anyone submitting tmrw :)

Simply the best fully open models yet.

Really proud of the work & the amazing team at

@ai2.bsky.social

Simply the best fully open models yet.

Really proud of the work & the amazing team at

@ai2.bsky.social