We designed this monograph to be self-contained, covering: Grid, Random & Quasi-random search, Bayesian & Multi-fidelity optimization, Gradient-based methods, Meta-learning.

arxiv.org/abs/2410.22854

We designed this monograph to be self-contained, covering: Grid, Random & Quasi-random search, Bayesian & Multi-fidelity optimization, Gradient-based methods, Meta-learning.

arxiv.org/abs/2410.22854

There's growing excitement around ML potentials trained on large datasets.

But do they deliver in simulations of biomolecular systems?

It’s not so clear. 🧵

1/

There's growing excitement around ML potentials trained on large datasets.

But do they deliver in simulations of biomolecular systems?

It’s not so clear. 🧵

1/

I’m recruiting for my new lab at NUS School of Computing, focusing on generative modeling, reasoning, and tractable inference.

💡 Interested? Learn more here: liuanji.github.io

🗓️ PhD application deadline: June 15, 2025

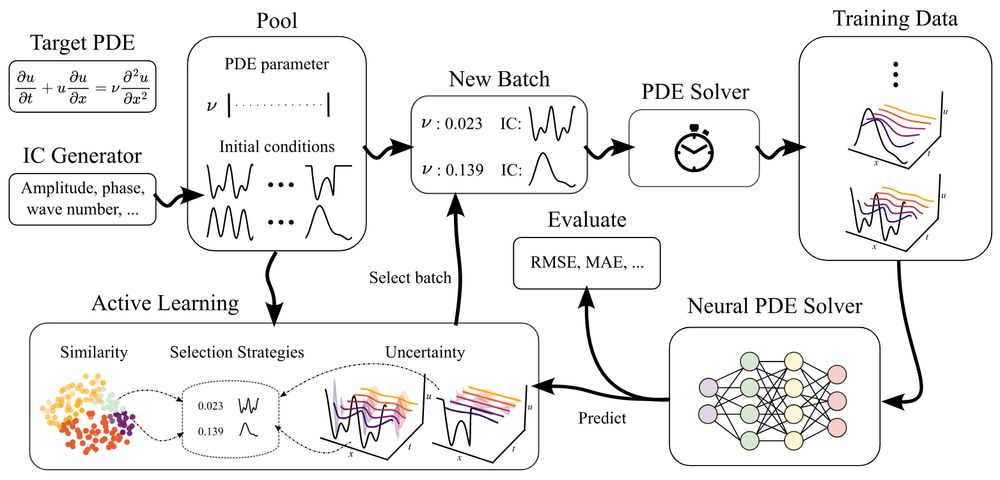

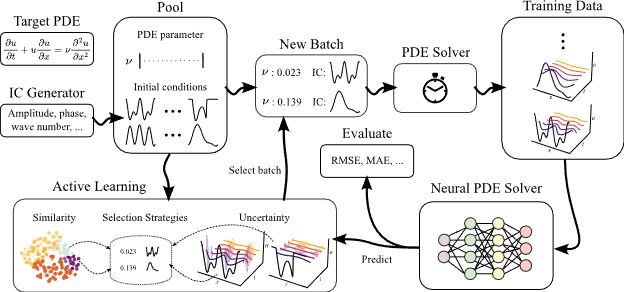

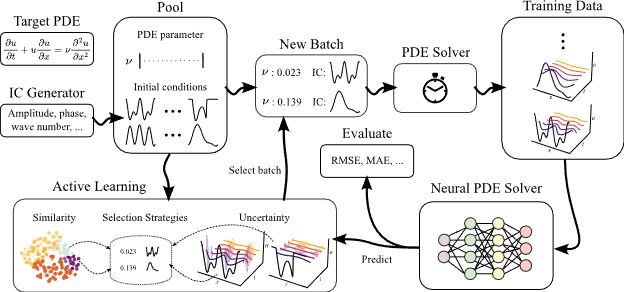

Can active learning help to generate better datasets for neural PDE solvers?

We introduce a new benchmark to find out!

Featuring 6 PDEs, 6 AL methods, 3 architectures and many ablations - transferability, speed, etc.!

Can active learning help to generate better datasets for neural PDE solvers?

We introduce a new benchmark to find out!

Featuring 6 PDEs, 6 AL methods, 3 architectures and many ablations - transferability, speed, etc.!

Full text: openreview.net/forum?id=OCM...

Full text: openreview.net/forum?id=OCM...

blackhc.github.io/balitu/

and I'll try to add proper course notes over time 🤗

blackhc.github.io/balitu/

and I'll try to add proper course notes over time 🤗

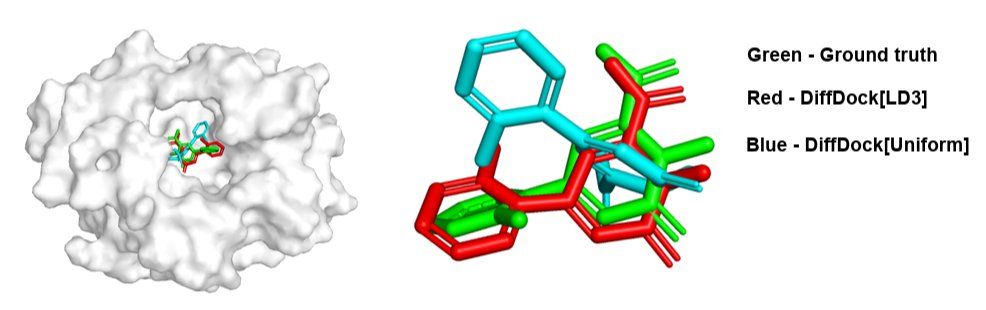

LD3 can be applied to diffusion models in other domains, such as molecular docking.

LD3 can be applied to diffusion models in other domains, such as molecular docking.

Learn how to optimize your time discretization strategy—in just ~10 minutes! ⏳✨

Check out how it's done in our Oral paper at ICLR 2025 👇

[1/n]

We propose LD3, a lightweight framework that learns the optimal time discretization for sampling from pre-trained Diffusion Probabilistic Models (DPMs).

Learn how to optimize your time discretization strategy—in just ~10 minutes! ⏳✨

Check out how it's done in our Oral paper at ICLR 2025 👇

We're excited to announce ComBayNS workshop: Combining Bayesian & Neural Approaches for Structured Data 🌐

Submit your paper and join us in Rome for #IJCNN2025! 🇮🇹

📅 Papers Due: March 20th, 2025 📜

Webpage: combayns2025.github.io

We're excited to announce ComBayNS workshop: Combining Bayesian & Neural Approaches for Structured Data 🌐

Submit your paper and join us in Rome for #IJCNN2025! 🇮🇹

📅 Papers Due: March 20th, 2025 📜

Webpage: combayns2025.github.io

[1/n]

We propose LD3, a lightweight framework that learns the optimal time discretization for sampling from pre-trained Diffusion Probabilistic Models (DPMs).

[1/n]

We propose LD3, a lightweight framework that learns the optimal time discretization for sampling from pre-trained Diffusion Probabilistic Models (DPMs).

Call for Papers is live!

2025.nesyconf.org/nesy-generat...

See you in Santa Cruz in Sep 2025!

@nesyconf.org

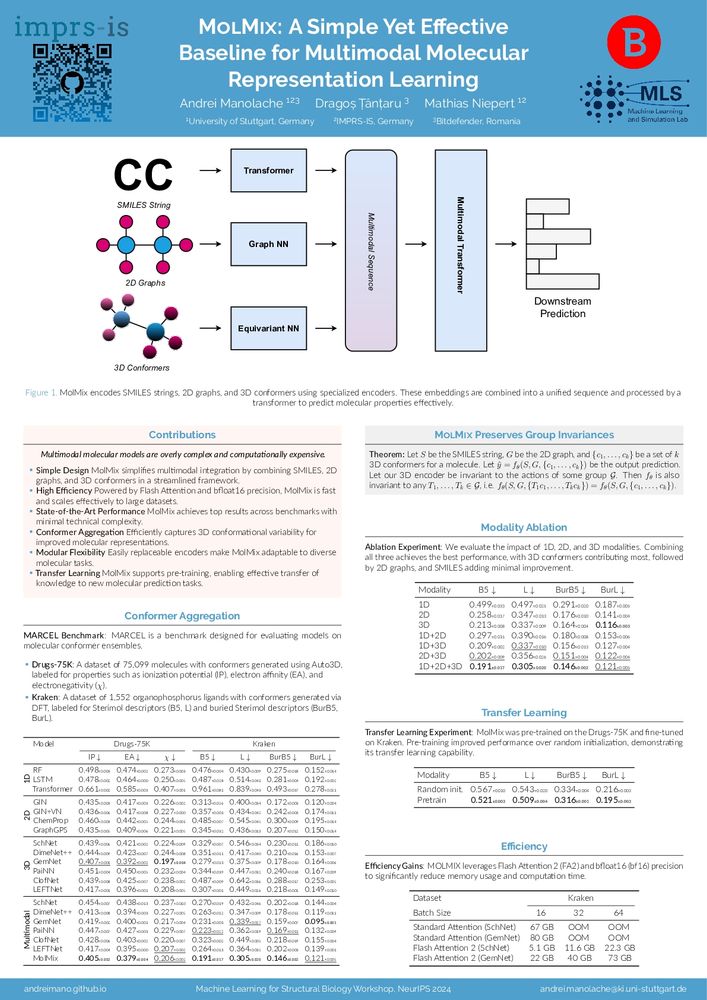

Interested in molecular representation learning? Let’s chat 👋!

Interested in molecular representation learning? Let’s chat 👋!

In our NeurIPS 2024 paper, we introduce RealMLP, a NN with improvements in all areas and meta-learned default parameters.

Some insights about RealMLP and other models on large benchmarks (>200 datasets): 🧵

Higher-Rank Irreducible Cartesian Tensors for Equivariant Message Passing

🗓️ When: Wed, Dec 11, 11 a.m. – 2 p.m. PST

📍 Where: East Exhibit Hall A-C, Poster #4107

#MachineLearning #InteratomicPotentials #Equivariance #GraphNeuralNetworks

Our #NeurIPS2024 paper explores higher-rank irreducible Cartesian tensors to design equivariant #MLIPs.

Paper: arxiv.org/abs/2405.14253

Code: github.com/nec-research...

Higher-Rank Irreducible Cartesian Tensors for Equivariant Message Passing

🗓️ When: Wed, Dec 11, 11 a.m. – 2 p.m. PST

📍 Where: East Exhibit Hall A-C, Poster #4107

#MachineLearning #InteratomicPotentials #Equivariance #GraphNeuralNetworks

iopscience.iop.org/article/10.1...

iopscience.iop.org/article/10.1...

Our #NeurIPS2024 paper explores higher-rank irreducible Cartesian tensors to design equivariant #MLIPs.

Paper: arxiv.org/abs/2405.14253

Code: github.com/nec-research...

Our #NeurIPS2024 paper explores higher-rank irreducible Cartesian tensors to design equivariant #MLIPs.

Paper: arxiv.org/abs/2405.14253

Code: github.com/nec-research...

Our #NeurIPS2024 paper explores higher-rank irreducible Cartesian tensors to design equivariant #MLIPs.

Paper: arxiv.org/abs/2405.14253

Code: github.com/nec-research...

please write below if you want to be added (and sorry if I did not find you from the beginning).

go.bsky.app/DhVNyz5

please write below if you want to be added (and sorry if I did not find you from the beginning).

go.bsky.app/DhVNyz5

We are looking for a Tenure Track Prof for the 🇦🇹 #FWF Cluster of Excellence Bilateral AI (think #NeSy ++) www.bilateral-ai.net A nice starting pack for fully funded PhDs is included.

jobs.tugraz.at/en/jobs/226f...

We are looking for a Tenure Track Prof for the 🇦🇹 #FWF Cluster of Excellence Bilateral AI (think #NeSy ++) www.bilateral-ai.net A nice starting pack for fully funded PhDs is included.

jobs.tugraz.at/en/jobs/226f...

arxiv.org/abs/1909.12790

arxiv.org/abs/1909.12790