#MLSky

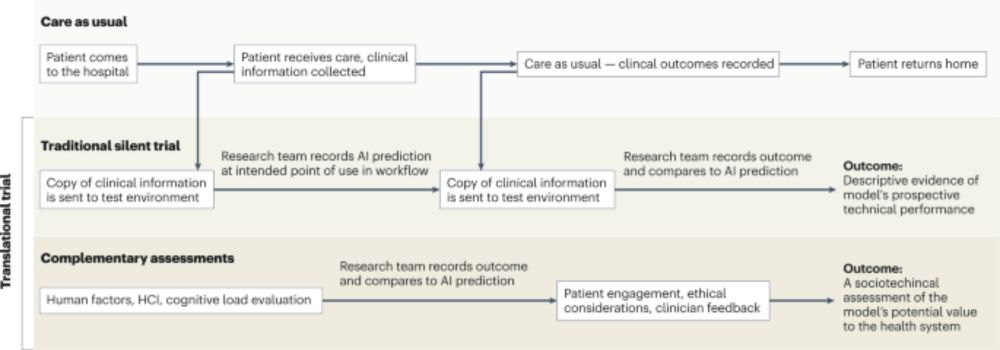

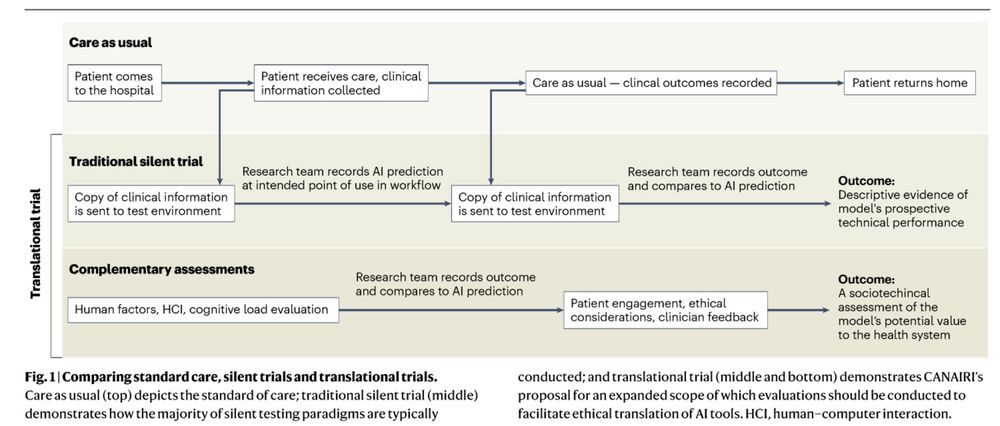

1. Most silent trials do not report model metrics outside of AUC (rarely reporting bias testing, failure modes, and data drift)

2. The evaluator of the silent trial is often unnamed or underspecified- human factors and stakeholder engagement is rare

@lanatikhomirov.bsky.social did an amazing job leading this work all the way through ❤️

osf.io/preprints/os...

1. Most silent trials do not report model metrics outside of AUC (rarely reporting bias testing, failure modes, and data drift)

2. The evaluator of the silent trial is often unnamed or underspecified- human factors and stakeholder engagement is rare

@lanatikhomirov.bsky.social did an amazing job leading this work all the way through ❤️

osf.io/preprints/os...

Beautiful.

Beautiful.

We will work together to define how to take a #sociotechnical approach to responsible #AI integration in healthcare. Spearheaded by my awesome co-lead @mdmccradden.bsky.social 🦜

nature.com/articles/s41...

Me: okay but actually you built it to rate how hot the woman at your school were

Me: okay but actually you built it to rate how hot the woman at your school were

See the conference website for themes and submission details

gidsov.com.au/abstracts

#IDSov #IDGov

See the conference website for themes and submission details

gidsov.com.au/abstracts

#IDSov #IDGov

@unityofvirtue.bsky.social Judy Gichoya Mark Sendak Lauren Erdman @istedman.bsky.social Lauren Oakden-Rayner Ismail Akrout James Anderson

@unityofvirtue.bsky.social Judy Gichoya Mark Sendak Lauren Erdman @istedman.bsky.social Lauren Oakden-Rayner Ismail Akrout James Anderson

It will be different for each tool, depending on many things including how much works has been done before on similar tools, how different the local context is, etc.

How do we decide? That’s what we want to figure out, and we need your help!

It will be different for each tool, depending on many things including how much works has been done before on similar tools, how different the local context is, etc.

How do we decide? That’s what we want to figure out, and we need your help!

Perhaps most important to AI translation is the local silent trial. Ethically, and from an evidentiary perspective, this is essential!

url.au.m.mimecastprotect.com/s/pQSsClx14m...

Perhaps most important to AI translation is the local silent trial. Ethically, and from an evidentiary perspective, this is essential!

url.au.m.mimecastprotect.com/s/pQSsClx14m...

aiguide.substack.com/p/did-openai...

aiguide.substack.com/p/did-openai...