Postdoc @ ITU of Copenhagen.

https://scholar.google.com/citations?user=QVN3iv8AAAAJ&hl=en

which will host a panel discussion with @blaiseaguera.bsky.social, @risi.bsky.social, @emilydolson.bsky.social & Sidney Pontes-Filho

Check out the full program: sites.google.com/view/soni-al...

See you in Kyoto ⛩️

every.to/thesis/knowl...

every.to/thesis/knowl...

sakana.ai/dgm

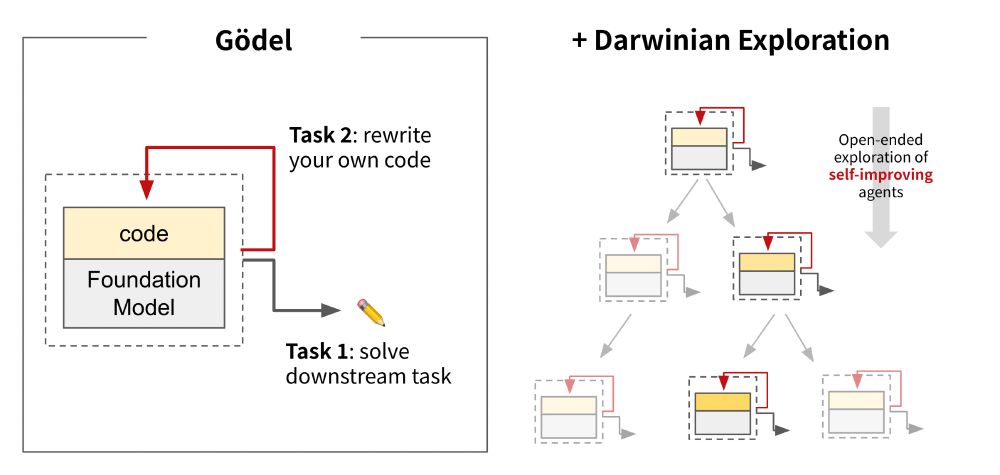

The Darwin Gödel Machine is a self-improving agent that can modify its own code. Inspired by evolution, we maintain an expanding lineage of agent variants, allowing for open-ended exploration of the vast design space of such self-improving agents.

sakana.ai/dgm

The Darwin Gödel Machine is a self-improving agent that can modify its own code. Inspired by evolution, we maintain an expanding lineage of agent variants, allowing for open-ended exploration of the vast design space of such self-improving agents.

Blog → sakana.ai/ctm

Modern AI is powerful, but it's still distinct from human-like flexible intelligence. We believe neural timing is key. Our Continuous Thought Machine is built from the ground up to use neural dynamics as a powerful representation for intelligence.

Blog → sakana.ai/ctm

Modern AI is powerful, but it's still distinct from human-like flexible intelligence. We believe neural timing is key. Our Continuous Thought Machine is built from the ground up to use neural dynamics as a powerful representation for intelligence.

So still some time to put interesting thoughts on Evolving Self-Organization together!

Also: We are very fortunate to have the great Risto Miikkulainen as the keynote speaker at the workshop!

Can't wait to see you all there! 🤩🙌

#Evolution #Gecco #ALife

Relevant for Alifers #ALife and anyone interested in #evolution, #self-organisation, and #ComplexSystems.

Submission deadline: March 26, 2025

More information: evolving-self-organisation-workshop.github.io

So still some time to put interesting thoughts on Evolving Self-Organization together!

Also: We are very fortunate to have the great Risto Miikkulainen as the keynote speaker at the workshop!

Can't wait to see you all there! 🤩🙌

#Evolution #Gecco #ALife

Bio-Inspired Plastic Neural Nets that continually adapt their own synaptic strengths can make for extremely robust locomotion policies!

Trained exclusively in simulation, the plastic networks transfer easily to the real world, even under various extra OOD situations.

Bio-Inspired Plastic Neural Nets that continually adapt their own synaptic strengths can make for extremely robust locomotion policies!

Trained exclusively in simulation, the plastic networks transfer easily to the real world, even under various extra OOD situations.

Also, does anyone know if #GECCO has an official 🦋 account? I cannot seem to find it...

Relevant for Alifers #ALife and anyone interested in #evolution, #self-organisation, and #ComplexSystems.

Submission deadline: March 26, 2025

More information: evolving-self-organisation-workshop.github.io

Also, does anyone know if #GECCO has an official 🦋 account? I cannot seem to find it...

Relevant for Alifers #ALife and anyone interested in #evolution, #self-organisation, and #ComplexSystems.

Submission deadline: March 26, 2025

More information: evolving-self-organisation-workshop.github.io

Relevant for Alifers #ALife and anyone interested in #evolution, #self-organisation, and #ComplexSystems.

We are excited to present our HIVE approach, a framework and benchmark for LLM-driven multi-agent control.

We are excited to present our HIVE approach, a framework and benchmark for LLM-driven multi-agent control.

#LLM #ALife #ArtificialIntelligence

#LLM #ALife #ArtificialIntelligence

arxiv.org/abs/2501.06252

Check out the new paper from Sakana AI (@sakanaai.bsky.social) paper. We show the power of an LLM that can self-adapt its weights to its environment!

arxiv.org/abs/2501.06252

Check out the new paper from Sakana AI (@sakanaai.bsky.social) paper. We show the power of an LLM that can self-adapt its weights to its environment!

go.bsky.app/E8WJwXS

go.bsky.app/E8WJwXS

We are very happy to share this awesome collection of papers on the topic!

Each paper comes with a brief summary and code link.

👉 github.com/DTU-PAS/awes...

We are very happy to share this awesome collection of papers on the topic!

then AGI ~= huge pile of sand

then AGI ~= huge pile of sand

sakana.ai/namm/

Introducing Neural Attention Memory Models (NAMM), a new kind of neural memory system for Transformers that not only boost their performance and efficiency but are also transferable to other foundation models without any additional training!

If you like to be added, or suggest someone else, message me or reply to this post.

If you like to be added, or suggest someone else, message me or reply to this post.

I created a starter pack! Please comment on this if you are not on the list and working in this field 🙂

go.bsky.app/CscFTAr

I created a starter pack! Please comment on this if you are not on the list and working in this field 🙂

go.bsky.app/CscFTAr

“A type of system that deserves much more thorough investigation is the large system that is built of parts that have many states of equilibrium”

csis.pace.edu/~marchese/CS...

#ComplexSystems #Cybernetics

“A type of system that deserves much more thorough investigation is the large system that is built of parts that have many states of equilibrium”

csis.pace.edu/~marchese/CS...

#ComplexSystems #Cybernetics

go.bsky.app/LgoJN2N

go.bsky.app/LgoJN2N