jaydeepborkar.github.io

paper: arxiv.org/pdf/2502.15680

Our paper (co w/ Vinith Suriyakumar) on syntax-domain spurious correlations will appear at #NeurIPS2025 as a ✨spotlight!

+ @marzyehghassemi.bsky.social, @byron.bsky.social, Levent Sagun

Our paper (co w/ Vinith Suriyakumar) on syntax-domain spurious correlations will appear at #NeurIPS2025 as a ✨spotlight!

+ @marzyehghassemi.bsky.social, @byron.bsky.social, Levent Sagun

1. Examples of statements of purpose (SOPs) for computer science PhD programs: cs-sop.org [1/4]

1. Examples of statements of purpose (SOPs) for computer science PhD programs: cs-sop.org [1/4]

In our new work (w/ @tuhinchakr.bsky.social, Diego Garcia-Olano, @byron.bsky.social ) we provide a systematic attempt at measuring AI "slop" in text!

arxiv.org/abs/2509.19163

🧵 (1/7)

In our new work (w/ @tuhinchakr.bsky.social, Diego Garcia-Olano, @byron.bsky.social ) we provide a systematic attempt at measuring AI "slop" in text!

arxiv.org/abs/2509.19163

🧵 (1/7)

"Talkin' 'Bout AI Generation: Copyright and the Generative-AI Supply Chain," is out in the 72nd volume of the Journal of the Copyright Society

copyrightsociety.org/journal-entr...

written with @katherinelee.bsky.social & @jtlg.bsky.social (2023)

"Talkin' 'Bout AI Generation: Copyright and the Generative-AI Supply Chain," is out in the 72nd volume of the Journal of the Copyright Society

copyrightsociety.org/journal-entr...

written with @katherinelee.bsky.social & @jtlg.bsky.social (2023)

If you would like to chat memorization/privacy/safety/, please reach out :)

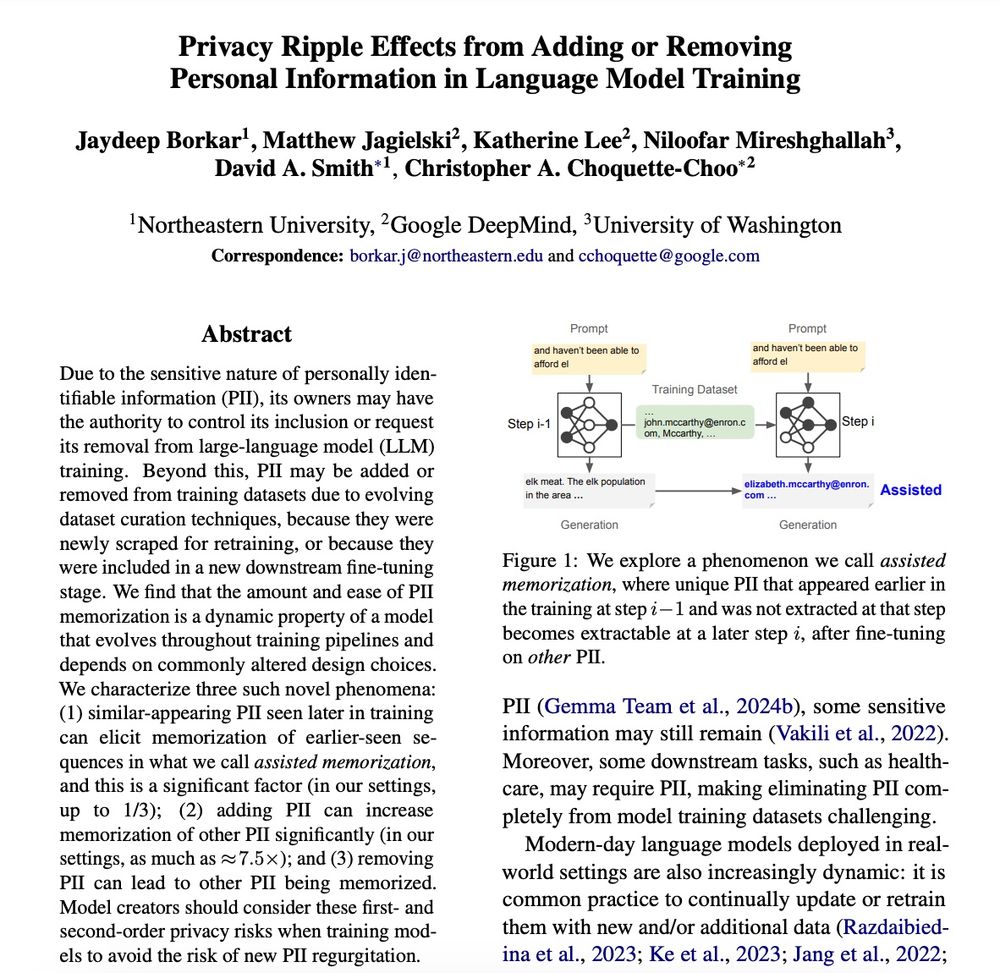

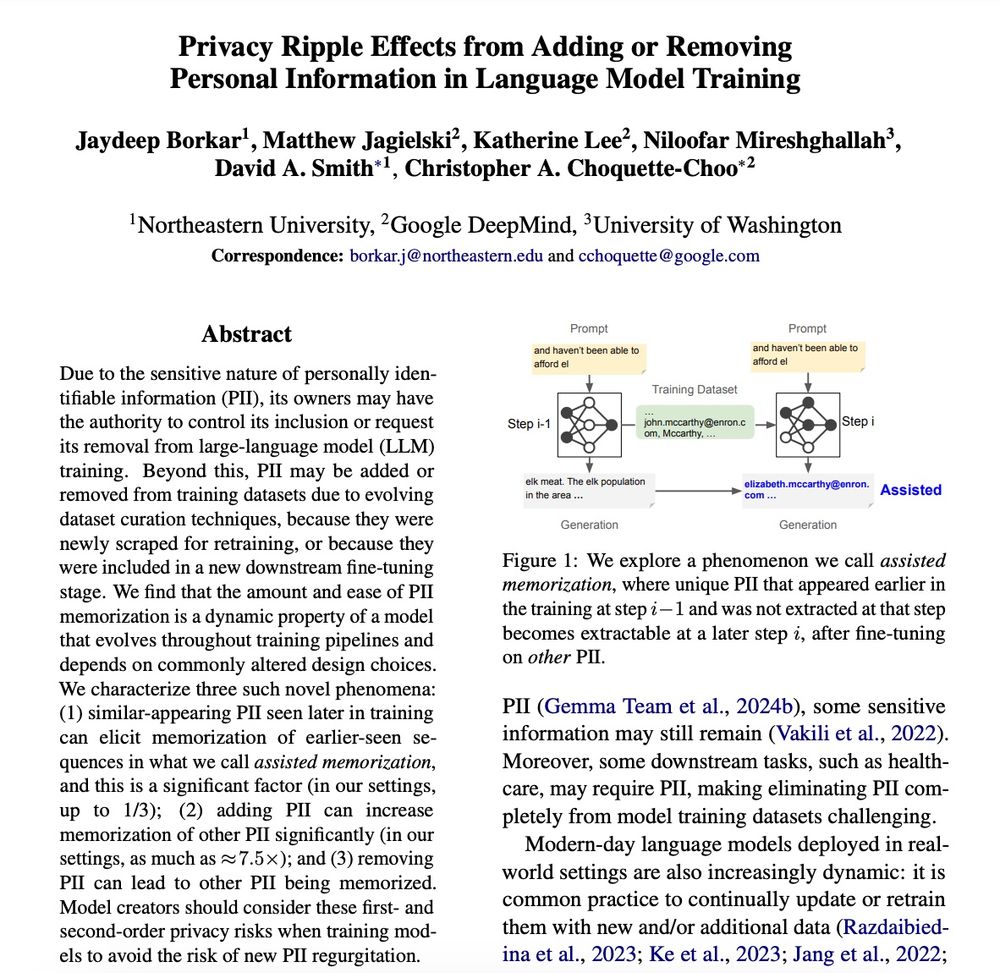

paper: arxiv.org/pdf/2502.15680

If you would like to chat memorization/privacy/safety/, please reach out :)

paper: arxiv.org/pdf/2502.15680

If you’re in NYC and would like to hang out, please message me :)

If you’re in NYC and would like to hang out, please message me :)

CHI Program Link: programs.sigchi.org/chi/2025/pro...

Looking forward to connecting with you all!

CHI Program Link: programs.sigchi.org/chi/2025/pro...

Looking forward to connecting with you all!

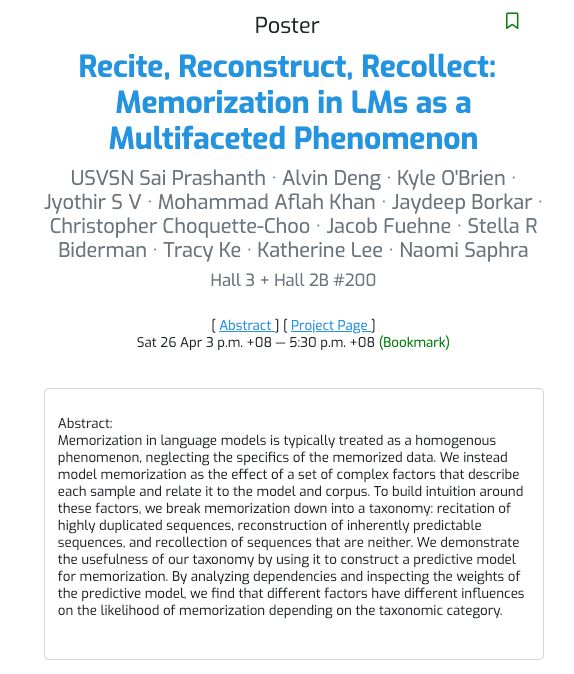

'Recite, Reconstruct, Recollect: Memorization in LMs as a Multifaceted Phenomenon'

USVSN Sai Prashanth · @nsaphra.bsky.social et al

Submission: openreview.net/forum?id=3E8...

'Recite, Reconstruct, Recollect: Memorization in LMs as a Multifaceted Phenomenon'

USVSN Sai Prashanth · @nsaphra.bsky.social et al

Submission: openreview.net/forum?id=3E8...

We propose a taxonomy for different types of memorization in LMs. Paper: openreview.net/pdf?id=3E8YN...

We propose a taxonomy for different types of memorization in LMs. Paper: openreview.net/pdf?id=3E8YN...

@bucds.bsky.social in 2026! BU has SCHEMES for LM interpretability & analysis, I couldn't be more pumped to join a burgeoning supergroup w/ @najoung.bsky.social @amuuueller.bsky.social. Looking for my first students, so apply and reach out!

We propose information-guided probes, a method to uncover memorization evidence in *completely black-box* models,

without requiring access to

🙅♀️ Model weights

🙅♀️ Training data

🙅♀️ Token probabilities 🧵 (1/5)

I put together a google form that should take no longer than 10 minutes to complete: forms.gle/oWxsCScW3dJU...

If you can help, I'd appreciate your input! 🙏

I put together a google form that should take no longer than 10 minutes to complete: forms.gle/oWxsCScW3dJU...

If you can help, I'd appreciate your input! 🙏

We wanted to let you know that we chose not to submit a workshop proposal this year (we need a break!!). We’ll be at ICML though and look forward to catching up there!

You can watch our prior videos!

We wanted to let you know that we chose not to submit a workshop proposal this year (we need a break!!). We’ll be at ICML though and look forward to catching up there!

You can watch our prior videos!

More info (including about registration) on the website: computersciencelaw.org/2025

More info (including about registration) on the website: computersciencelaw.org/2025

paper: arxiv.org/pdf/2502.15680

paper: arxiv.org/pdf/2502.15680