indiiigo.github.io/

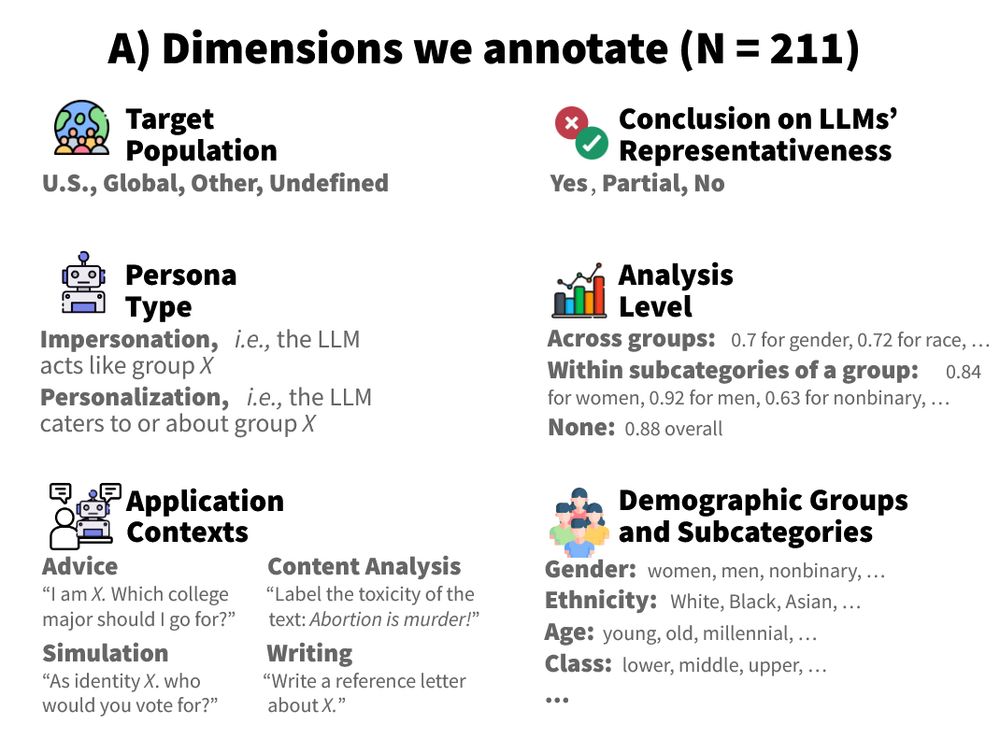

We review the current literature in our #ACL2025 Findings paper and investigating what researchers conclude about the demographic representativeness of LLMs:

osf.io/preprints/so...

1/

7 days of hands-on advanced methods + networking for PhDs, postdocs & early-career researchers.

Free of charge (limited travel support).

Deadline 1 March 2026: summerschoolwpm.org

#methodsky #polisky

We invite Ph.D. students and early career postdocs to come to GESIS. Visiting researchers of the Junior Research Program are involved in our research to publish with GESIS staff, and to develop research ideas and joint projects.

We invite Ph.D. students and early career postdocs to come to GESIS. Visiting researchers of the Junior Research Program are involved in our research to publish with GESIS staff, and to develop research ideas and joint projects.

@taniseceron.bsky.social, Sebastian Padó and I test multilingual LLMs before and after English-only fine-tuning and find strong cross-lingual political opinion transfer across five Western languages.

www.arxiv.org/abs/2508.05553

@taniseceron.bsky.social, Sebastian Padó and I test multilingual LLMs before and after English-only fine-tuning and find strong cross-lingual political opinion transfer across five Western languages.

www.arxiv.org/abs/2508.05553

🚨 We show they are not: different cues yield different model behavior for the same group and different conclusions on LLM bias. 🧵👇

🚨 We show they are not: different cues yield different model behavior for the same group and different conclusions on LLM bias. 🧵👇

1/

1/

www.science.org/doi/10.1126/...

Big thank you to my coauthors @small-schulz.bsky.social and @lorenzspreen.bsky.social, and to all participants who discussed 20 political issues over 4 weeks in 6 subreddit, 3 experimental conditions and let us observe.

www.science.org/doi/10.1126/...

Big thank you to my coauthors @small-schulz.bsky.social and @lorenzspreen.bsky.social, and to all participants who discussed 20 political issues over 4 weeks in 6 subreddit, 3 experimental conditions and let us observe.

puwebp.princeton.edu/AcadHire/app...

Please apply before Sunday, the 13th of December!

puwebp.princeton.edu/AcadHire/app...

Please apply before Sunday, the 13th of December!

In a platform-independent field experiment, we show that reranking content expressing antidemocratic attitudes and partisan animosity in social media feeds alters affective polarization.

🧵

In a platform-independent field experiment, we show that reranking content expressing antidemocratic attitudes and partisan animosity in social media feeds alters affective polarization.

🧵

How are social bias and CSS interconnected? 🤔

@aytalina.bsky.social, @janabernhard.bsky.social, @valeriehase.bsky.social, and I argue that social bias shapes CSS as a field and as a methodology. Progress in CSS depends on engaging with both dimensions! osf.io/preprints/so...

How are social bias and CSS interconnected? 🤔

@aytalina.bsky.social, @janabernhard.bsky.social, @valeriehase.bsky.social, and I argue that social bias shapes CSS as a field and as a methodology. Progress in CSS depends on engaging with both dimensions! osf.io/preprints/so...

and based in a low- or middle-income country?

💡 Submit a project idea, get matched with a mentor, present virtually at ICWSM'26, and prepare a submission for Sept 2026!

📢 Call icwsm.org/2026/submit....

🚀 Apply by Jan 15 forms.gle/A9GkJboP7qi3...

and based in a low- or middle-income country?

💡 Submit a project idea, get matched with a mentor, present virtually at ICWSM'26, and prepare a submission for Sept 2026!

📢 Call icwsm.org/2026/submit....

🚀 Apply by Jan 15 forms.gle/A9GkJboP7qi3...

More info: piccardi.me

More info: piccardi.me

Answers to male agent include more topics, but no evidence of social desirability.

👉 New #OpenAccess paper with @jkhoehne.bsky.social #cneuert in #IJMR.

🌐 doi.org/10.1177/1470...

Answers to male agent include more topics, but no evidence of social desirability.

👉 New #OpenAccess paper with @jkhoehne.bsky.social #cneuert in #IJMR.

🌐 doi.org/10.1177/1470...

Please note the updated application link (due to a recent university webpage update):

👉 PhD Candidate in Emotionally and Socially Aware Natural Language Processing

careers.universiteitleiden.nl/job/PhD-Cand...

Please note the updated application link (due to a recent university webpage update):

👉 PhD Candidate in Emotionally and Socially Aware Natural Language Processing

careers.universiteitleiden.nl/job/PhD-Cand...

Apply to Wisconsin CS to research

- Societal impact of AI

- NLP ←→ CSS and cultural analytics

- Computational sociolinguistics

- Human-AI interaction

- Culturally competent and inclusive NLP

with me!

lucy3.github.io/prospective-...

Apply to Wisconsin CS to research

- Societal impact of AI

- NLP ←→ CSS and cultural analytics

- Computational sociolinguistics

- Human-AI interaction

- Culturally competent and inclusive NLP

with me!

lucy3.github.io/prospective-...

We used causal inference on 9.9M tweets, quantifying effects in the wild while blocking backdoor paths.

Does misinfo get higher engagement? Are following discussions more emotional? 🧵

We used causal inference on 9.9M tweets, quantifying effects in the wild while blocking backdoor paths.

Does misinfo get higher engagement? Are following discussions more emotional? 🧵

Mohammad Taher Pilehvar on multilingual misinformation and online harms in #NLP.

(the position is open to UK and international students.)

Details and contact information 👇:

www.findaphd.com/phds/project...

Mohammad Taher Pilehvar on multilingual misinformation and online harms in #NLP.

(the position is open to UK and international students.)

Details and contact information 👇:

www.findaphd.com/phds/project...

But... can they? We don’t actually know.

In our new study, we develop a Computational Turing Test.

And our findings are striking:

LLMs may be far less human-like than we think.🧵

But... can they? We don’t actually know.

In our new study, we develop a Computational Turing Test.

And our findings are striking:

LLMs may be far less human-like than we think.🧵

New recording and workshop materials published!

➡️ Large Language Models for Social Research: Potentials and Challenges

👤 @indiiigo.bsky.social (University of Mannheim)

📺 youtu.be/p5wPJHK-74M

🗒️ github.com/SocialScienc...

New recording and workshop materials published!

➡️ Large Language Models for Social Research: Potentials and Challenges

👤 @indiiigo.bsky.social (University of Mannheim)

📺 youtu.be/p5wPJHK-74M

🗒️ github.com/SocialScienc...

🕑 Thu. Nov 6, 12:30 - 13:30

📍 Findings Session 2, Hall C3

🤔 Ever wondered how the way you write a persona prompt affects how well an LLM simulates people?

In our #EMNLP2025 paper, we find that using interview-style persona prompts makes LLM social simulations less biased and more aligned with human opinions.

🧵1/7

🕑 Thu. Nov 6, 12:30 - 13:30

📍 Findings Session 2, Hall C3