Researchers find preferences for verbosity, listicles, vagueness, and jargon even higher among LLM-based reward models (synthetic data) than among us humans.

#AI #AIalignment

arxiv.org/abs/2506.05339

Researchers find preferences for verbosity, listicles, vagueness, and jargon even higher among LLM-based reward models (synthetic data) than among us humans.

#AI #AIalignment

arxiv.org/abs/2506.05339

www.youtube.com/watch?v=y1Wn...

www.youtube.com/watch?v=y1Wn...

#AI #LLM

www.arxiv.org/abs/2505.11423

#AI #LLM

www.arxiv.org/abs/2505.11423

arxiv.org/abs/2505.10185

arxiv.org/abs/2505.10185

But I wonder if this changes when #agents are personal assistants, & are more personal & more aware.

#UX #AI #Design

arxiv.org/abs/2402.01934

"backtracing ... retrieve the cause of the query from a corpus. ... targets the information need of content creators who wish to improve their content in light of questions from information seekers."

arxiv.org/abs/2403.03956

"backtracing ... retrieve the cause of the query from a corpus. ... targets the information need of content creators who wish to improve their content in light of questions from information seekers."

arxiv.org/abs/2403.03956

arxiv.org/abs/2402.01618

arxiv.org/abs/2402.01618

arxiv.org/abs/2505.06120

arxiv.org/abs/2505.06120

www.arxiv.org/abs/2505.02130

www.arxiv.org/abs/2505.02130

LLMs were fed "blended" prompts, impossible conceptual combinations, meant to elicit hallucinations. Models did not trip, but instead tried to reason their way through their responses.

arxiv.org/abs/2505.00557

LLMs were fed "blended" prompts, impossible conceptual combinations, meant to elicit hallucinations. Models did not trip, but instead tried to reason their way through their responses.

arxiv.org/abs/2505.00557

Whilst this episode has its tech explanations, the social interaction aspects are unsolved & will rise as models are personalized, as mirroring is an effective design feature, & we're not good at distinguishing affirmation from agreement.

Borderline self-indulgant to get to write about the messiness of RLHF to this extent, but simultaneously essential. A must read. GPT-4o-simp is a sign of things to come.

www.interconnects.ai/p/sycophancy...

Whilst this episode has its tech explanations, the social interaction aspects are unsolved & will rise as models are personalized, as mirroring is an effective design feature, & we're not good at distinguishing affirmation from agreement.

www.arxiv.org/abs/2504.20708

www.arxiv.org/abs/2504.20708

Anthropic's discovery of an influence bot network shows how conversation can be weaponized. This isn't the Cambridge Analytica-styled user preference targeting, but language/conversation instead. #AIethics

www.anthropic.com/news/detecti...

Anthropic's discovery of an influence bot network shows how conversation can be weaponized. This isn't the Cambridge Analytica-styled user preference targeting, but language/conversation instead. #AIethics

www.anthropic.com/news/detecti...

"ChatGPT has distinct preferences for simpler syntactic constructions...and relies heavily on anaphoric references, whereas students demonstrate more balanced syntactic distribution..."

www.sciencedirect.com/science/arti...

Period models - Black Mirror Hotel Reverie episode?

Period models - Black Mirror Hotel Reverie episode?

If an LLM reads Deleuze's Logic of Sense, does Alice still become both smaller & larger at the same time? (No, because AI has neither temporality nor a "sense" for it) #philosophy #LLMs

covidianaesthetics.substack.com/p/from-apoph...

If an LLM reads Deleuze's Logic of Sense, does Alice still become both smaller & larger at the same time? (No, because AI has neither temporality nor a "sense" for it) #philosophy #LLMs

covidianaesthetics.substack.com/p/from-apoph...

#LLM #AI

www.alphaxiv.org/abs/2504.13171

#LLM #AI

www.alphaxiv.org/abs/2504.13171

I'm reminded of Borges Chinese Encyclopedia of Animals

www.darioamodei.com/post/the-urg...

I'm reminded of Borges Chinese Encyclopedia of Animals

www.darioamodei.com/post/the-urg...

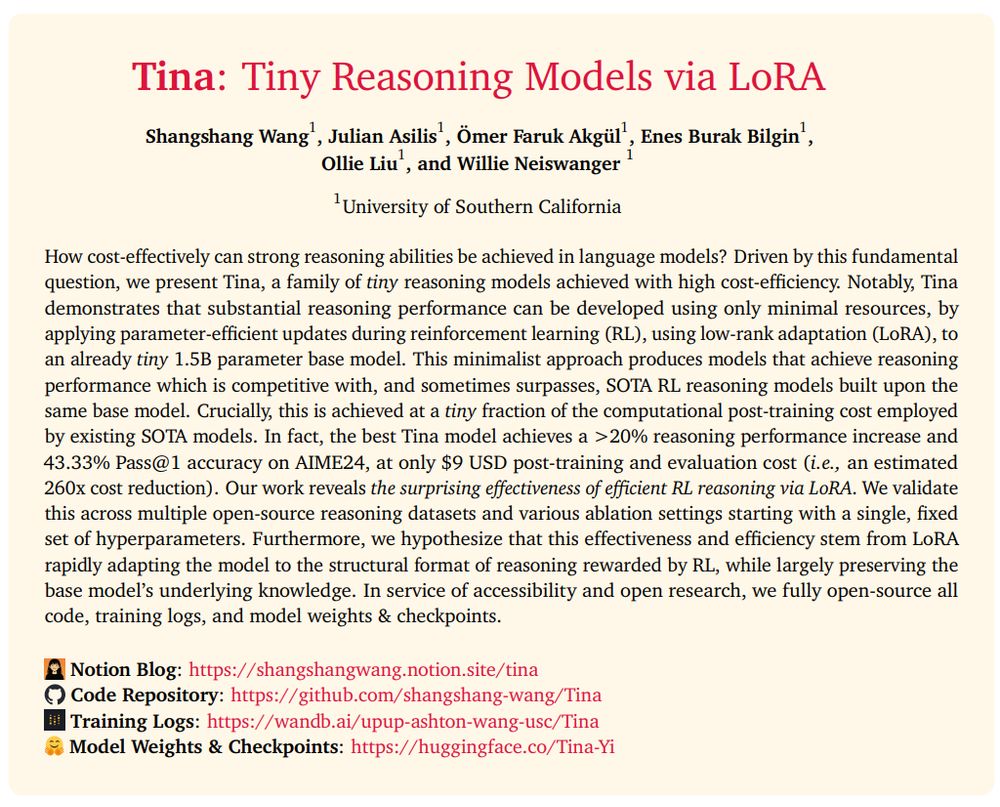

Tina model achieves a >20% reasoning performance increase and 43.33% Pass@1 accuracy on AIME24, at only $9 USD post-training and evaluation cost (i.e., an estimated 260x cost reduction). The surprising effectiveness of efficient RL reasoning via LoRA.

That's what ChatGPT gave me after a lengthy conversation about semiotics, McLuhan, and #LLMs

That's what ChatGPT gave me after a lengthy conversation about semiotics, McLuhan, and #LLMs

#AI #LLMs #CoT

arxiv.org/abs/2504.09762

#AI #LLMs #CoT

arxiv.org/abs/2504.09762

Unlike search engines, they send no traffic back so no new users, no new donors. Just rising costs and a shrinking audience.

A raw deal for a cornerstone of the free web.

Unlike search engines, they send no traffic back so no new users, no new donors. Just rising costs and a shrinking audience.

A raw deal for a cornerstone of the free web.