Carbohydrate enthusiast.

fabiotollon.wixsite.com/fabiotollon

Co-authored with the brilliant @sj-bennett.bsky.social and @ftollon.bsky.social, as part of @ludovico-rella.bsky.social and @fabio-iapaolo.bsky.social SI on ‘Where’s the Intelligence in AI? Mattering, Placing, and De-individuating AI' link.springer.com/article/10.1...

🧵

Read: montrealethics.ai/saier-vol-7-...

Read: montrealethics.ai/saier-vol-7-...

We suggest that some AI chatbots might be answerable, thus rendering them capable of asserting. Strange stuff.

#philsky #AIethics

www.pt-ai.org/2025/program...

We suggest that some AI chatbots might be answerable, thus rendering them capable of asserting. Strange stuff.

#philsky #AIethics

www.pt-ai.org/2025/program...

🗓️ 15 October 18.00 - 19.30 BST

📍 EFI & online

🎟️ edin.ac/3Kl8em6

🗓️ 15 October 18.00 - 19.30 BST

📍 EFI & online

🎟️ edin.ac/3Kl8em6

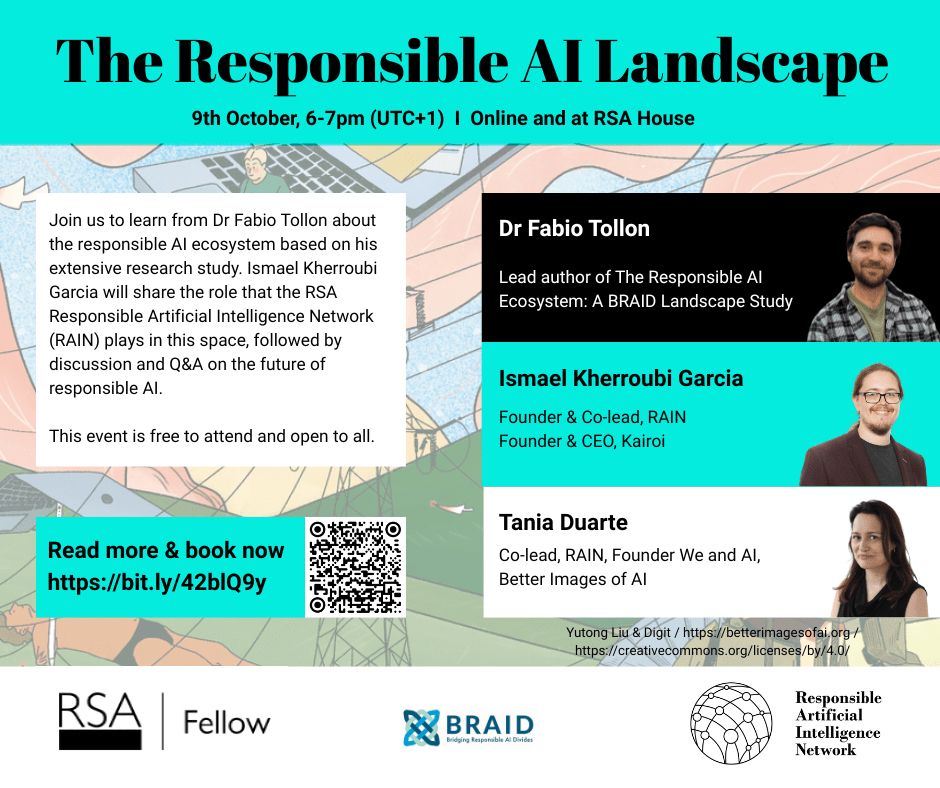

www.eventbrite.co.uk/e/the-respon...

www.eventbrite.co.uk/e/the-respon...

www.eventbrite.co.uk/e/the-respon...

Heartfelt thanks to our speakers, attendees; Our Community - your being there made it what it was.

Heartfelt thanks to our speakers, attendees; Our Community - your being there made it what it was.

#philsky #philAI

If you can't be bothered to read the whole thing, we even summarized it for you: braiduk.org/the-responsi...

@braiduk.bsky.social @technomoralfutures.bsky.social

#philsky #philAI

If you can't be bothered to read the whole thing, we even summarized it for you: braiduk.org/the-responsi...

@braiduk.bsky.social @technomoralfutures.bsky.social

If you can't be bothered to read the whole thing, we even summarized it for you: braiduk.org/the-responsi...

@braiduk.bsky.social @technomoralfutures.bsky.social

@ftollon.bsky.social & @shannonvallor.bsky.social introduce us to the report in the latest BRAID blog braiduk.org/the-responsi...

@ftollon.bsky.social & @shannonvallor.bsky.social introduce us to the report in the latest BRAID blog braiduk.org/the-responsi...

@ftollon.bsky.social visits Northern Illinois University to talk about his research in #AI and Responsibility.

@ftollon.bsky.social visits Northern Illinois University to talk about his research in #AI and Responsibility.

Lecture by @ftollon.bsky.social of @edinburghuni.bsky.social This Friday (21 March) at 1pm. Sponsored by the Dept. of Communication at Northern Illinois University. Free and open to all.

Lecture by @ftollon.bsky.social of @edinburghuni.bsky.social This Friday (21 March) at 1pm. Sponsored by the Dept. of Communication at Northern Illinois University. Free and open to all.

Lecture by @ftollon.bsky.social of @edinburghuni.bsky.social This Friday (21 March) at 1pm. Sponsored by the Dept. of Communication at Northern Illinois University. Free and open to all.

Long answer: https://www.taylorfrancis.com/books/oa-mono/10.4324/9781003498032/unfair-emotions-jonas-blatter

#philsky #philosophy

Long answer: https://www.taylorfrancis.com/books/oa-mono/10.4324/9781003498032/unfair-emotions-jonas-blatter

#philsky #philosophy

Read our blog post about the experience and key takeaways! ▶️ edin.ac/4hUL3Lh

Read our blog post about the experience and key takeaways! ▶️ edin.ac/4hUL3Lh

www.404media.co/declassified...

www.404media.co/declassified...

Co-authored with the brilliant @sj-bennett.bsky.social and @ftollon.bsky.social, as part of @ludovico-rella.bsky.social and @fabio-iapaolo.bsky.social SI on ‘Where’s the Intelligence in AI? Mattering, Placing, and De-individuating AI' link.springer.com/article/10.1...

🧵

Co-authored with the brilliant @sj-bennett.bsky.social and @ftollon.bsky.social, as part of @ludovico-rella.bsky.social and @fabio-iapaolo.bsky.social SI on ‘Where’s the Intelligence in AI? Mattering, Placing, and De-individuating AI' link.springer.com/article/10.1...

🧵

www.theguardian.com/politics/202...

www.theguardian.com/politics/202...