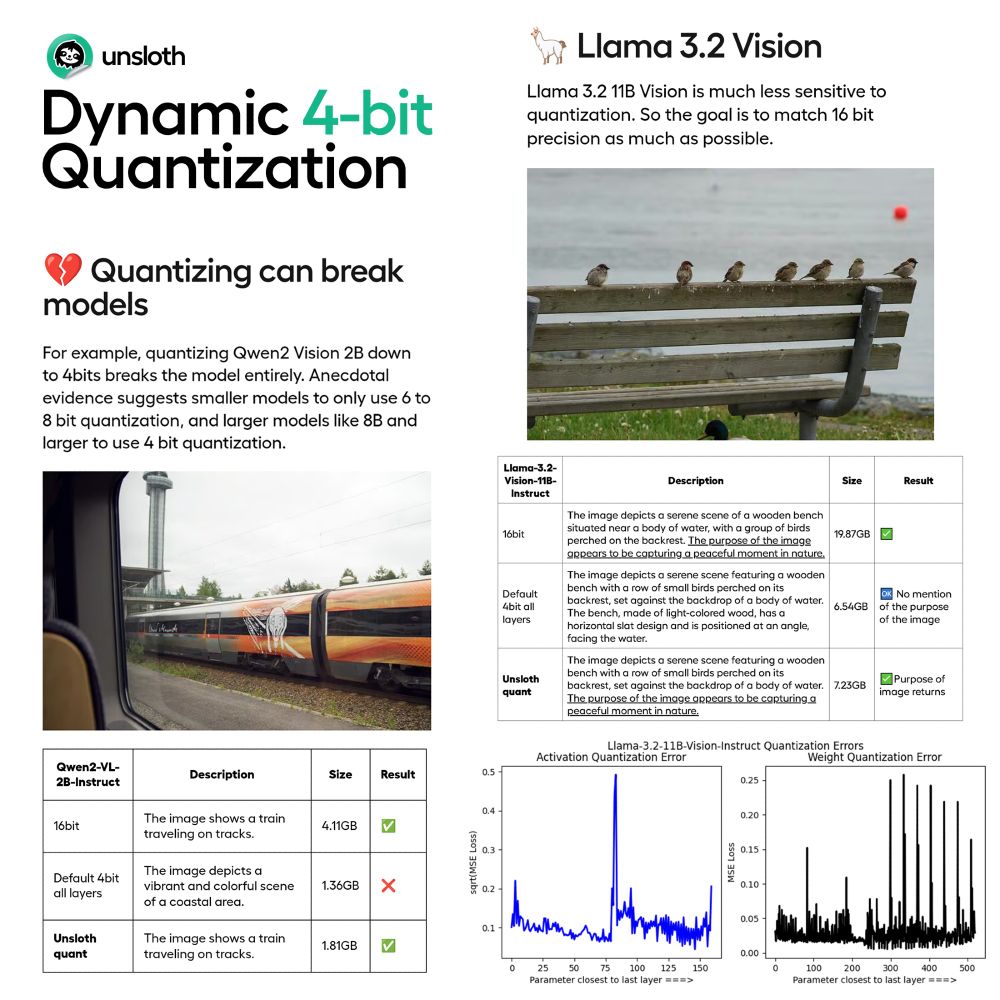

Naive quantization often harms accuracy but we avoid quantizing certain parameters. This achieves higher accuracy using only <10% more VRAM than BnB 4bit

Read our Blog: unsloth.ai/blog/dynamic...

Quants on Hugging Face: huggingface.co/collections/...

If you are:

* Driven

* Love OSS

* Interested in distributed PyTorch training/FSDPv2/DeepSpeed

Come work with me!

Fully remote, more details to apply in the comments

If you are:

* Driven

* Love OSS

* Interested in distributed PyTorch training/FSDPv2/DeepSpeed

Come work with me!

Fully remote, more details to apply in the comments

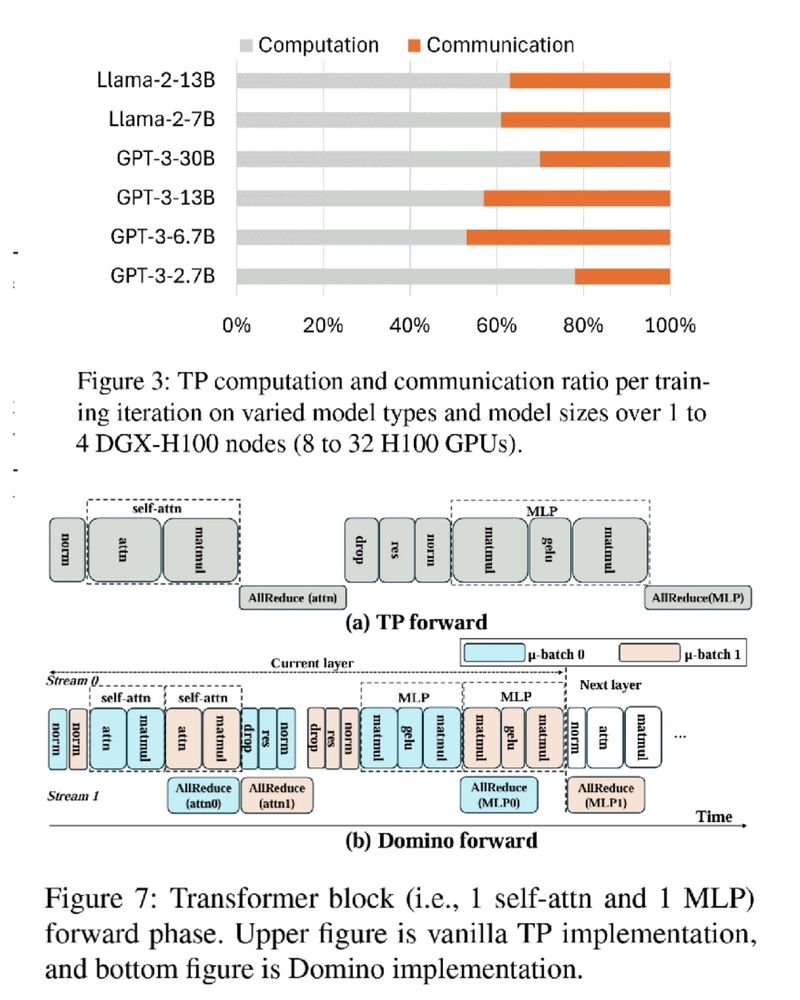

DeepSpeed Domino, with a new tensor parallelism engine, minimizes communication overhead for faster LLM training. 🚀

✅ Near-complete communication hiding

✅ Multi-node scalable solution

Blog: github.com/microsoft/De...

DeepSpeed Domino, with a new tensor parallelism engine, minimizes communication overhead for faster LLM training. 🚀

✅ Near-complete communication hiding

✅ Multi-node scalable solution

Blog: github.com/microsoft/De...

Outperforms all models at similar GPU RAM usage and tokens throughputs

Blog post: huggingface.co/blog/smolvlm

Outperforms all models at similar GPU RAM usage and tokens throughputs

Blog post: huggingface.co/blog/smolvlm

github.com/google-resea...

github.com/google-resea...

github.com/google-resea...

github.com/google-resea...

recently, @primeintellect.bsky.social have announced finishing their 10B distributed learning, trained across the world.

what is it exactly?

🧵

recently, @primeintellect.bsky.social have announced finishing their 10B distributed learning, trained across the world.

what is it exactly?

🧵

arxiv.org/abs/2407.036...

arxiv.org/abs/2407.036...

* Add a small MLP + classifier which predict a temperature per token

* They train the MLP with a variant of DPO (arxiv.org/abs/2305.18290) with the temperatures as latent

* low temp for math, high for creative tasks

* Add a small MLP + classifier which predict a temperature per token

* They train the MLP with a variant of DPO (arxiv.org/abs/2305.18290) with the temperatures as latent

* low temp for math, high for creative tasks

gist.github.com/hamelsmu/fb9...

gist.github.com/hamelsmu/fb9...

github.com/xjdr-alt/ent...

github.com/xjdr-alt/ent...